Understanding Interaction in Virtual Reality

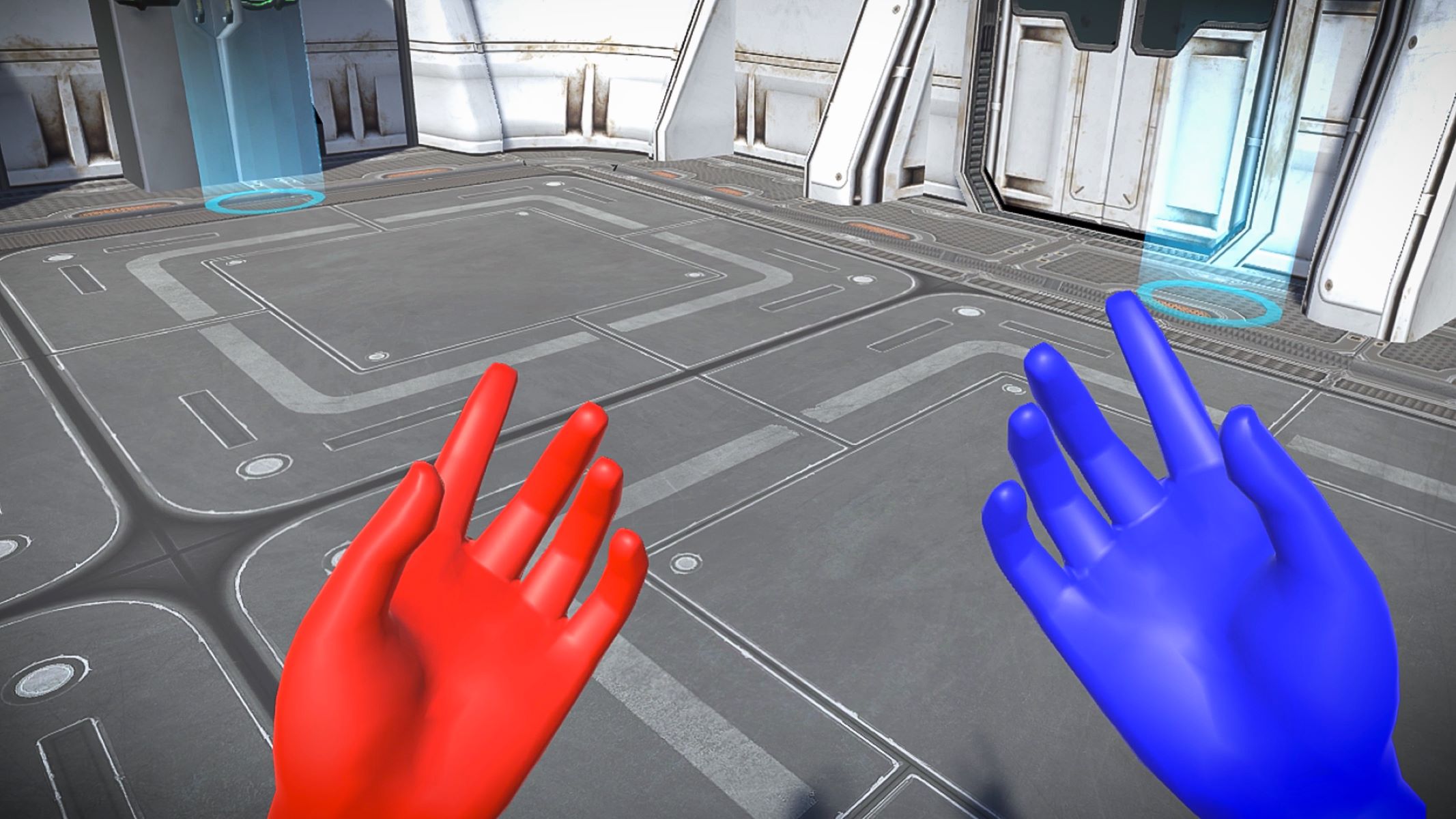

Virtual reality (VR) has revolutionized the way we interact with digital environments. In Unity, the HTC Vive provides an immersive experience by allowing users to physically interact with virtual objects in virtual spaces. Understanding the principles behind interaction in virtual reality is crucial to creating realistic and engaging VR experiences.

Interaction in VR encompasses a wide range of actions, including picking up objects, pushing buttons, and manipulating the virtual world. The goal is to simulate real-world interactions as closely as possible, providing a sense of presence and allowing users to intuitively engage with the virtual environment.

To achieve this, Unity provides a variety of interaction techniques. One common approach is using controllers, such as the HTC Vive’s handheld controllers, which enable users to directly interact with virtual objects. These controllers track the position and orientation of the user’s hands, allowing for natural and precise interaction.

Another key aspect of interaction in VR is the use of physics. Objects in the virtual environment should behave realistically when being interacted with. Unity’s physics engine allows developers to apply forces, constraints, and collisions to objects, enabling them to respond to user actions realistically.

Additionally, VR interaction often involves the use of user interfaces (UI). Whether it’s menus, buttons, or indicators, UI elements provide crucial feedback and guidance to the user during interactions. Unity offers intuitive UI tools that can be customized and implemented seamlessly within the virtual environment.

It’s important to design interactions that are comfortable and intuitive for users. This means considering factors such as the user’s reach, hand size, and ergonomics. Providing visual and auditory feedback during interactions can enhance the sense of presence and improve the overall user experience.

Understanding the principles and techniques involved in VR interaction is essential for creating captivating and immersive experiences in Unity with the HTC Vive. By leveraging Unity’s features and considering user comfort and realism, developers can create interactive virtual worlds that engage users and leave a lasting impression.

Setting Up the HTC Vive with Unity

Before you can start developing VR experiences with the HTC Vive in Unity, you need to set up the necessary hardware and software components. Here’s a step-by-step guide to help you get started:

- Hardware Setup: Begin by setting up the HTC Vive headset and controllers. Ensure that the sensors are properly placed and calibrated for tracking.

- Software Installation: Install the SteamVR software and the necessary drivers for the HTC Vive on your computer.

- Unity Setup: Download and install the Unity game engine, preferably the latest version compatible with the HTC Vive. Create a new project or open an existing one.

- Importing the SteamVR Plugin: In the Unity editor, navigate to the Asset Store and search for the SteamVR Plugin. Import the plugin into your project.

- Setting Up the VR Camera: In your scene hierarchy, add a VR camera rig prefab from the SteamVR Plugin. This prefab contains the necessary components to enable VR interaction.

- Configuring Interaction Variables: Set up variables such as reach distance, grab strength, and release velocity in the interaction scripts attached to your VR controllers. These variables determine how users interact with objects in your VR scene.

- Testing the Setup: Connect your HTC Vive headset and controllers to your computer, and run your Unity project in the VR mode. Ensure that the tracking is accurate, and test basic interactions such as object grabbing and releasing.

By following these steps, you can quickly set up the HTC Vive with Unity and start developing immersive VR experiences. Remember to check for updates to the SteamVR software and Unity plugins regularly to take advantage of new features and improvements. Happy VR development!

Adding Interactable Objects to Your Scene

To create a truly immersive VR experience with the HTC Vive in Unity, you’ll want to populate your scene with objects that users can interact with. Here’s how you can add interactable objects to your scene:

- Create the Object: Start by creating or importing the 3D model for the object you want to make interactable. You can use software like Blender or Unity’s built-in modeling tools to create simple shapes or import complex models.

- Add a Collider: In order for the object to interact with other objects and the VR controllers, you need to add a collider component to it. Unity provides a variety of colliders, such as box, sphere, and capsule colliders, that you can attach to your object.

- Add Rigidbody: If you want the object to have realistic physics behavior, attach a Rigidbody component to it. This will enable the object to respond to forces and collisions in the virtual environment.

- Add Interaction Scripts: To make the object interactable, you’ll need to add scripts that handle the interaction logic. Unity provides useful scripts like “Interactable” or “Grabbable” that you can attach to your object. These scripts define how the object can be grabbed, released, and manipulated by the user.

- Set Object Properties: Configure the properties of the interaction scripts to define the object’s behavior. For example, you can specify how far the user can reach to grab the object, how strong the grab force should be, and whether the object should snap to certain positions or rotations.

- Test and Iterate: Play the scene in Unity and test the interaction with the object. Make adjustments to the object’s collider, rigidbody, and interaction scripts as needed. Continuously test and iterate to achieve a satisfying and realistic interaction experience.

By following these steps, you can add interactable objects to your scene and provide users with a sense of presence and immersion in your VR experience. Remember to consider the scale, texture, and visual details of the objects to enhance their realism and interaction appeal. Have fun creating interactive virtual worlds with the HTC Vive in Unity!

Implementing Basic Interactions with Objects

One of the core aspects of creating an immersive VR experience with the HTC Vive in Unity is enabling users to interact with virtual objects. Here’s a step-by-step guide to implementing basic interactions with objects in your scene:

- Attach Interaction Scripts: Add interaction scripts to the VR controllers and the objects you want users to interact with. These scripts handle the grabbing and releasing logic, as well as other interaction behavior.

- Implement Grabbing Logic: In the interaction script attached to the VR controller, write code to detect when the user pressed the controller’s button to grab an object. This can be done using Unity’s input system and the OnTriggerStay or OnCollisionEnter methods to detect if the controller collides with an interactable object.

- Apply Grabbing Mechanics: When the user grabs an object, update the object’s position and rotation to match the controller’s position and rotation. Consider using parenting or joint components to ensure that the object moves smoothly with the controller.

- Implement Releasing Logic: Similarly, write code to detect when the user releases the grip button on the controller. This can be done using Unity’s input system and the OnTriggerExit or OnCollisionExit methods to detect when the controller is no longer colliding with the object.

- Release the Object: When the user releases the grip button, stop updating the object’s position and rotation based on the controller’s movement. You can also apply forces or velocities to the object to simulate throwing or dropping.

- Interact with Other Objects: Expand the interaction to include other objects in the scene. For example, you can implement button presses, object stacking, or puzzle-solving mechanics by detecting collisions or triggers between interactable objects.

- Add Visual Feedback: To enhance the realism and feedback during interactions, consider adding visual effects such as highlighting the grabbed object or displaying tooltips when the user gets close to an interactable object. These elements can provide guidance and improve the overall user experience.

Implementing basic interactions with objects in Unity using the HTC Vive is an exciting journey that allows users to engage with the virtual world in a natural and intuitive way. Remember to test and iterate on your interactions, ensuring that they are responsive, realistic, and enjoyable for users. Happy coding!

Grabbing and Throwing Objects

One of the key components of creating a realistic and immersive VR experience with the HTC Vive in Unity is the ability for users to grab and throw objects in the virtual environment. Here’s how you can implement grabbing and throwing mechanics:

- Attach Interaction Scripts: Make sure that both the VR controllers and the objects you want to interact with have the necessary interaction scripts attached to them.

- Implement Grabbing Logic: In the script attached to the VR controller, write code to detect when the user presses the grip button. Use Unity’s input system and the OnTriggerStay or OnCollisionEnter methods to detect if the controller collides with an interactable object.

- Record Initial Grab Position: When the user initiates a grab, record the initial position and rotation of the grabbed object. This will be used to calculate the offset between the object and the controller during the grab.

- Update Object Position: Continuously update the position and rotation of the grabbed object to match that of the VR controller. Take into account the offset recorded in the previous step to maintain a natural and realistic interaction.

- Apply Release Mechanics: Create a method to release the grabbed object when the user releases the grip button. Stop updating the object’s position and rotation based on the controller’s movement and apply forces or velocities to simulate throwing or dropping the object.

- Add Physics Constraints: To enhance the realism of grabbing and throwing objects, consider applying physics constraints to the grabbed object. These constraints can limit the rotation or movement of the object, creating a more natural and believable interaction.

- Adjust Physics Properties: Experiment with the mass, friction, and other physics properties of the grabbed object to achieve the desired behavior. Tweak these properties to ensure that the object feels appropriately weighted and responsive to user input.

- Fine-tune Feedback: Enhance the user experience by providing visual and audio feedback during the grabbing and throwing process. Add effects such as haptic feedback, sound effects, and particle systems to enhance the sense of interaction and impact.

- Test and Iterate: Playtest your VR experience and gather user feedback to improve the grabbing and throwing mechanics. Fine-tune the parameters, physics properties, and feedback elements to provide users with a satisfying and immersive interaction.

Implementing grabbing and throwing mechanics in Unity with the HTC Vive adds a new level of realism and engagement to your VR experience. With careful attention to detail and user feedback, you can create interactions that make users feel like they are truly manipulating objects in a virtual world. Happy grabbing and throwing!

Applying Object Physics and Constraints

Applying physics and constraints to objects in a virtual reality (VR) environment is crucial for creating realistic and immersive experiences with the HTC Vive in Unity. By incorporating physics and constraints, you can simulate the behavior of objects and ensure that they interact believably with the user and the virtual environment. Here’s how you can apply object physics and constraints:

- Add a Rigidbody Component: Attach a Rigidbody component to the objects you want to interact with. The Rigidbody component enables objects to respond to physics simulations, such as gravity and collisions.

- Configure Rigidbody Properties: Adjust the mass, drag, and angular drag properties of the Rigidbody component to control how objects behave under different forces. Consider the size, weight, and material properties of the object to achieve a realistic physics simulation.

- Apply Collider Components: Attach Collider components to the objects. Colliders define the shape and size of the object’s physical representation, allowing for accurate collision detection and response with other objects.

- Use Physics Materials: Apply physics materials to the Collider components to control the friction and bounciness of object interactions. Adjusting these properties can make objects feel more or less slippery and affect how they respond to collisions.

- Implement Object Constraints: Apply constraints to restrict the movement of objects. Unity provides several types of constraints, such as position, rotation, and joint constraints. Constraints can simulate the fixed position of an object, limit rotation in certain axes, or create realistic joints between objects.

- Set Up Object Joints: Use joint components to create connections between objects. Joints simulate physical connections, such as hinges or springs, allowing objects to move in relation to one another realistically. Experiment with different joint types and parameters to achieve the desired behavior.

- Combine Constraints and Joints: Combine constraints and joints to create complex interactions between objects. For example, a door may have a hinge joint for rotation but also a position constraint to keep it within a certain range of movement.

- Test and Fine-tune: Regularly test your physics and constraints in the VR environment to ensure that objects behave as expected. Use Unity’s physics debug visualization tools to identify issues and adjust physical properties and constraints to achieve the desired interactions.

By applying object physics and constraints in Unity with the HTC Vive, you can create realistic and engaging interactions in your VR experience. Carefully consider the properties and constraints of objects to match the desired behavior and achieve a high level of immersion. Experiment, test, and iterate to provide users with captivating and believable object interactions.

Creating Interactive UI Elements

Interactive user interface (UI) elements play a vital role in enhancing the user experience and providing intuitive navigation in virtual reality (VR) environments created with Unity and the HTC Vive. These elements allow users to interact with menus, buttons, and other visual indicators within the virtual space. Here’s how you can create interactive UI elements:

- Create 3D UI Objects: Start by designing and creating 3D models or prefabs for your UI elements. This can include buttons, sliders, panels, or any other interactive components you envision.

- Add Collider Components: Attach Collider components to the UI elements to enable collision detection and interaction. Ensure they match the shape and size of the visual representation accurately.

- Implement UI Interaction Scripts: Write scripts that control the behavior of the UI elements. These scripts should handle input detection from the VR controllers and respond accordingly, such as highlighting buttons or updating values on sliders.

- Use Unity UI Framework: Incorporate Unity’s UI framework to create interactive UI elements. Unity’s UI system provides useful components like buttons, sliders, and canvases that can be customized and integrated seamlessly into your VR experience.

- Handle Controller Input: Detect input from the VR controllers using Unity’s input system. Implement actions that map to UI interactions, such as button presses or touchpad swipes, and associate them with corresponding UI element behaviors.

- Create Visual Feedback: Provide visual feedback to users when interacting with UI elements. This can include highlighting buttons or changing the appearance of elements based on user input to indicate selection or activation.

- Enhance with Haptic Feedback: Consider adding haptic feedback to UI interactions. Utilize the HTC Vive’s haptic capabilities to provide subtle vibrations as users interact with different UI elements, adding a tactile component to the experience.

- Include Audio Feedback: Incorporate audio cues to enhance the interactivity of UI elements. Play sounds or provide audio feedback when users interact with buttons, sliders, or other UI elements, creating a more immersive and engaging experience.

- Test and Iterate: Thoroughly test your interactive UI elements in the VR environment. Gather user feedback and iterate on the design and behavior of the UI based on their experiences. Continuously refine and improve to ensure a seamless and intuitive user interface.

By creating interactive UI elements in Unity with the HTC Vive, you can provide users with intuitive control and navigation within the virtual environment. Incorporate visual, haptic, and audio feedback to enhance the interactivity and usability of the UI. Experiment, iterate, and gather user feedback to create a compelling and immersive VR user interface.

Using Raycasting for Object Selection

Raycasting is a powerful technique used in virtual reality (VR) development with Unity and the HTC Vive to enable object selection. By casting a virtual ray from the VR controller, you can detect and interact with objects in the virtual environment. Here’s how you can use raycasting for object selection:

- Set Up Raycasting: Attach a script to the VR controller that will handle the raycasting functionality. In this script, create a ray that extends from the controller’s position and direction.

- Perform Raycasting: Use Unity’s physics raycasting functions, such as Physics.Raycast or Physics.RaycastAll, to determine if the ray intersects with any objects in the scene. You can set a maximum distance for the ray to limit the range of selection.

- Detect Object Interaction: Once the ray intersects with an object, check if the object is marked as interactable or has specific interaction scripts attached to it. This ensures that only relevant objects are selectable and prevent unintended interactions.

- Highlight Selected Objects: Provide visual feedback to indicate which objects are currently selected. Modify the material or color of the selected object to make it stand out from the rest of the scene.

- Handle User Input: Define how users can interact with the selected object using input from the VR controllers. For example, you can allow users to grab, rotate, or manipulate the object based on their input.

- Update Object Position and Rotation: Continuously update the position and rotation of the selected object based on the user’s input. Keep track of any offset between the VR controller and the object to maintain a natural and responsive interaction.

- Release the Object: Provide a way for users to release the selected object, either by pressing a specific button or performing a predefined action. Stop updating the object’s position and rotation when it is released.

- Fine-tune Raycasting Parameters: Experiment with the raycasting parameters, such as ray length or object layers, to ensure accurate and reliable object selection. Adjust these parameters to match the specific requirements and constraints of your VR experience.

- Test and Iterate: Test the raycasting functionality in your VR environment and gather user feedback. Iterate on the selection mechanics, visual feedback, and user interaction to create a smooth and intuitive object selection experience.

By utilizing raycasting for object selection in Unity with the HTC Vive, you can provide users with a natural and accurate way to interact with virtual objects. Keep in mind factors like performance optimization, collision detection, and feedback mechanisms to create a seamless and satisfying selection process in your VR experience.

Implementing Object Interaction Feedback

Effective object interaction feedback is essential for creating engaging and intuitive virtual reality (VR) experiences with the HTC Vive in Unity. By providing visual, haptic, and auditory feedback, you can enhance the sense of presence and improve the user’s understanding of their interactions in the virtual environment. Here’s how you can implement object interaction feedback:

- Visual Feedback: Visual cues are crucial in conveying information about object interactions. Implement visual feedback by changing the color, material, or texture of objects when they are interacted with or selected. Highlight interactable objects to guide the user’s attention and indicate potential interaction points.

- Icons and Labels: Use icons or labels to provide additional information about objects and their interactive capabilities. Add tooltips or information panels that display relevant details when the user hovers over or interacts with objects.

- Haptic Feedback: Utilize the haptic capabilities of the HTC Vive controllers to provide tactile feedback during interactions. Trigger vibrations or pulsations to simulate the sensation of touch when interacting with objects. Vary the intensity and duration of haptic feedback based on the type and significance of the interaction.

- Audio Feedback: Including audio cues is an effective way to enhance object interaction feedback. Play sound effects or provide auditory feedback when users interact with objects or perform certain actions, such as grabbing, rotating, or activating an object. Use spatial audio techniques to simulate realistic sound positioning in the VR environment.

- Use Animation: Incorporate animations to provide feedback on object interactions. For example, when grabbing an object, animate its movement to mimic the user’s hand motion. Use animation to simulate physical responses, such as the object flexing or bending when applying force.

- Consider Physics Principles: Implement physics-based feedback to enhance the realism of object interactions. Apply forces, collisions, and gravity to simulate the responses and dynamics of objects realistically when users interact with them.

- Customize Feedback: Make the feedback customizable to accommodate individual preferences or simulate different interaction scenarios. Provide options for users to adjust the intensity, frequency, or type of feedback based on their comfort and immersion preferences.

- Test and Iterate: Regularly test the object interaction feedback in the VR environment and gather user feedback. Iterate on the feedback mechanisms, adjusting elements such as timing, intensity, and visual indicators based on user responses, to create a more intuitive and immersive interaction experience.

Implementing effective object interaction feedback in Unity with the HTC Vive can greatly enhance user engagement and immersion in your VR experience. By combining visual, haptic, and audio feedback and iterating on these elements, you can create a more intuitive and compelling interaction environment that captivates users and provides a sense of realism in the virtual world.

Handling Object Collision and Interaction Events

Handling object collision and interaction events is critical for creating realistic and interactive virtual reality (VR) experiences with the HTC Vive in Unity. By detecting and responding to collisions and interactions between objects, you can create dynamic and engaging interactions for users. Here’s how you can handle object collision and interaction events:

- Set Up Collision Detection: Ensure that the objects in your VR environment have Collider components attached to them. These colliders define the shape and size of the object for collision detection purposes.

- Define Interaction Zones: Identify specific areas on objects where interactions should trigger events. These can be designated as zones or specific collider components, such as buttons or handles, that are associated with interaction scripts.

- Detect Collisions: Implement collision detection logic to identify when objects come into contact with each other. Unity provides collision events, such as OnCollisionEnter or OnTriggerEnter, that can be used to detect these collision occurrences.

- Handle Interaction Events: Write scripts to respond to interaction events triggered by collisions. This can involve executing specific actions, such as activating mechanisms, playing sound effects, or triggering animations, based on the nature of the collision or interaction.

- Implement Grab and Release Actions: When the user grabs or releases an object, use events and triggers to execute corresponding actions. Adjust the position and rotation of the object based on the user’s input during these events.

- Apply Force and Constraints: Use Unity’s physics system to apply forces or constraints to objects during collision or interaction events. This can create realistic responses, such as objects bouncing off each other or adhering to specific movements or limitations.

- Consider Physics Material Properties: Adjust the friction, bounciness, and other physics material properties of objects to control how they interact with each other. Different material settings can simulate various surface types and further enhance the realism of interactions.

- Customize Event Triggering: Tailor the conditions for event triggering to suit your VR experience. Consider factors such as object types, collision velocities, or proximity to fine-tune when and how events are triggered during collisions and interactions.

- Test and Iterate: Regularly test collision and interaction events in the VR environment to ensure desired behavior. Analyze user feedback and iterate on event handling scripts, physics properties, and object interactions to create more immersive and satisfying experiences.

By carefully handling object collision and interaction events in Unity with the HTC Vive, you can create interactive and dynamic VR experiences. From activating mechanisms to triggering animations or sound effects, the proper recognition and response to collisions and interactions contribute to a more realistic and engaging virtual world.

Fine-tuning Object Interactions for a Better User Experience

Fine-tuning object interactions is a crucial step in creating a seamless and immersive virtual reality (VR) experience with the HTC Vive in Unity. By iterating and optimizing the interaction mechanics, you can enhance the user experience and create more intuitive and satisfying interactions. Here are some key considerations for fine-tuning object interactions:

- Reach and Accessibility: Ensure that objects are within the user’s reach and reachable without straining. Consider the ergonomics of the user’s movements and adjust the placement and size of objects accordingly.

- Interaction Affordances: Implement visual cues that indicate the interactive nature of objects. Use design elements such as button shapes, handles, or toggles to signal how users can interact with the objects.

- Responsiveness: Aim for immediate and responsive interactions to provide a sense of control and connection between the user and the virtual objects. Reduce delays or lag to create a real-time and intuitive experience.

- Collision Detection: Refine collision detection to ensure accurate and reliable interactions. Strive for seamless detection and response to prevent objects from passing through each other or behaving erratically during collisions.

- Physics Constraints: Fine-tune physics constraints applied to objects to create more realistic interactions. Adjust parameters such as stiffness, limits, or damping to achieve desired behavior and prevent objects from exhibiting unnatural movements.

- Object Weight and Scale: Consider the perceived weight and scale of objects to achieve a more convincing and immersive interaction experience. Adjust object mass in relation to the physics simulation and ensure that grabbing, throwing, and manipulation feel natural.

- Audio and Visual Feedback: Continuously refine audio and visual feedback elements. Ensure that the feedback is clear and meaningful, providing users with information and cues about their interactions. Consider user preferences and adjust the intensity or prominence of feedback accordingly.

- Comfort and Safety: Prioritize user comfort and safety by implementing interactions that minimize strain and reduce the risk of motion sickness or discomfort. Test and iterate on the mechanics to create a comfortable and enjoyable experience for a wide range of users.

- User Testing and Iteration: Regularly test the interactions with users and gather feedback to identify areas for improvement. Gain insights into user preferences, challenges, and suggestions to iteratively refine and enhance the object interactions based on real-world user experiences.

By fine-tuning object interactions in Unity with the HTC Vive, you can create a more immersive and enjoyable VR experience. Continuously iterate on the mechanics, responsiveness, and feedback elements to provide users with intuitive and satisfying interactions that feel natural and enhance their sense of presence in the virtual world.

Handling Inventory and Object Management

Properly managing inventory and objects is essential for creating immersive and interactive virtual reality (VR) experiences with the HTC Vive in Unity. By implementing inventory systems and object management techniques, you can provide users with a seamless and organized way to interact with and keep track of objects within the virtual environment. Here are some key considerations for handling inventory and object management:

- Create Inventory System: Design and implement an inventory system that allows users to collect and store objects. This system should provide a way for users to add and remove objects, as well as view and interact with the collected items.

- Inventory UI: Develop a user interface (UI) for the inventory system that is intuitive and visually appealing. Use buttons, icons, or thumbnails to represent the objects in the inventory, and provide clear indications of object selection and use.

- Item Information: Include relevant information about collected objects within the inventory UI. This can include item descriptions, properties, or usage instructions, providing users with a better understanding of each object’s purpose and functionality.

- Object Serialization: Serialize object data to retain their state across different scenes or save and load game sessions. Store information such as position, rotation, and object properties, so that objects can be correctly restored when the user re-enters the scene.

- Object Placement and Organization: Determine how users can place and organize objects within the virtual environment. Consider implementing object snapping features, grid systems, or specific locations where objects can be placed for improved organization and cleanliness.

- Object Interactions within Inventory: Define how users can interact with objects while they are in the inventory. This can include inspecting objects, combining items, or using objects in specific ways to solve puzzles or progress in the VR experience.

- Object Spawning: Develop mechanisms to dynamically spawn objects within the scene. Create conditions or triggers that initiate the appearance of specific objects based on user actions or predefined events, providing a sense of progression and discovery.

- Object Destruction or Removal: Determine how objects can be removed or destroyed from the virtual environment. Implement features such as object deletion, disposal, or recycling to maintain cleanliness and prevent clutter within the scene.

- Optimization: Consider performance optimization techniques, such as object pooling or occlusion culling, to manage the memory and processing resources required for a large number of objects within the VR environment.

- User Testing and Iteration: Regularly test the inventory and object management systems with users to gather feedback and identify areas for improvement. Iterate on the design and functionality based on user insights to enhance the usability and effectiveness of the inventory and object management mechanics.

By effectively handling inventory and object management in Unity with the HTC Vive, you can provide users with a seamless and organized way to interact with objects within the virtual environment. Implementing intuitive user interfaces, serialization, and interactive features will enhance the immersion and enjoyment of the VR experience.

Advanced Interaction Techniques with the HTC Vive

The HTC Vive, combined with Unity, offers a wide range of advanced interaction techniques that can enhance the level of immersion and engagement in virtual reality (VR) experiences. These techniques go beyond basic object interaction and provide more complex and realistic ways for users to interact with the virtual environment. Here are some advanced interaction techniques you can explore:

- Multi-Tool Interaction: Implement a multi-tool system that allows users to switch between different interaction modes or tools. Each tool can have unique functionalities, such as a paintbrush for drawing in 3D space, a laser pointer for precise selection, or a teleportation device for moving around the environment.

- Gestural Interaction: Utilize hand gestures and pose recognition to trigger specific actions or interactions. For example, users can make gestures to activate certain abilities or cast spells, allowing for a more immersive and intuitive experience.

- Physics-based Interaction: Implement advanced physics simulations to allow for more realistic interactions with objects. Objects can behave more accurately by taking into account weight, momentum, and other physical properties, providing a more immersive sense of touch and manipulation.

- Hand Interaction: Use the HTC Vive’s hand-tracking capabilities to enable direct hand interaction within the VR environment. This allows users to reach out and touch objects or manipulate them using their natural hand movements.

- Object Customization: Provide options for users to customize and personalize objects within the VR environment. Enable color selection, texture mapping, or object modifications to allow users to create unique and personalized experiences.

- Body Interaction: Incorporate full-body tracking to allow users to interact with objects using their entire body. For example, users can kick a ball or perform physical actions such as pushing or pulling objects to solve puzzles or complete tasks.

- Speech Recognition: Integrate speech recognition technology to allow users to interact with objects or control the environment using voice commands. Users can speak commands to activate certain functionalities or trigger events within the VR experience.

- Social Interaction: Explore multiplayer capabilities to enable users to interact and collaborate with others within the VR environment. Users can engage in cooperative activities, solve puzzles together, or engage in virtual social interactions, enhancing the sense of presence and shared experiences.

- Emotional Interaction: Experiment with techniques that evoke emotional responses from users. For example, dynamically adjust lighting, sound, or visual effects based on user actions or contextual triggers to create a more immersive and emotionally engaging experience.

- Contextual Interaction: Implement interactions that are contextually relevant to the virtual environment or the objects within it. For example, users may need to perform certain gestures or actions to activate environmental effects, manipulate machinery, or solve interactive puzzles.

By employing advanced interaction techniques with the HTC Vive in Unity, you can create immersive and engaging VR experiences that push the boundaries of user interaction. Experiment with these techniques and combine them creatively to deliver unique and captivating experiences for your users.

Tips and Tricks for Interacting with Items in Unity

Interacting with items in Unity using the HTC Vive can be a complex process that requires careful consideration and fine-tuning. Here are some tips and tricks to help you optimize and enhance your item interaction mechanics:

- Iterate and Refine: Fine-tune your item interaction mechanics through iteration and testing. Gather user feedback and make adjustments to ensure a seamless and enjoyable experience.

- Balance Realism and Ease of Use: While realism is important, prioritize ease of use to ensure a comfortable and intuitive interaction process. Strike a balance between realism and user-friendliness to create an engaging experience.

- Implement Haptic Feedback: Leverage the HTC Vive’s haptic feedback capabilities to provide users with tactile sensations during interactions. Use vibrations or pulses to enhance immersion and improve the sense of touch.

- Consider Accessibility: Account for users with different physical abilities. Provide options for adjusting interaction parameters or alternative input methods to accommodate a wide range of users.

- Optimize Performance: Utilize performance optimization techniques, such as object pooling and efficient collision detection, to ensure smooth and responsive item interactions without compromising the overall performance of your VR experience.

- Integrate Audio Feedback: Use sound effects and audio cues to provide feedback during item interactions. Incorporate audio signals for successful actions or interactions to reinforce the user’s sense of accomplishment.

- Implement Contextual Interactions: Make interactions contextually relevant to the virtual environment. Consider the placement, appearance, and behavior of items to ensure consistency and logical interactions within the VR experience.

- Test Interactions in VR: Regularly test your item interactions directly in the VR environment to accurately assess their feel and responsiveness. This will allow you to identify and address any issues or challenges that may arise during real-world usage.

- Provide Visual Cues: Use visual cues, such as highlighting interactable objects or displaying instructional tooltips, to guide users and help them understand the available interaction options.

- Consider Motion Sickness: Take motion sickness into account when designing item interactions. Minimize abrupt and jerky movements, and provide comfort settings that allow users to adjust interaction parameters to their comfort level.

- Optimize Grabbing and Releasing: Refine the grabbing and releasing mechanics of objects to ensure smooth and natural interactions. Consider factors such as hand positions, object rotation, and collision responses to create realistic and intuitive interactions.

By applying these tips and tricks to your item interaction mechanics in Unity with the HTC Vive, you can create a more immersive, intuitive, and enjoyable virtual reality experience. Regular testing, performance optimization, and attention to user feedback are key to refining and perfecting your item interaction mechanics.