What Is Labeled Data?

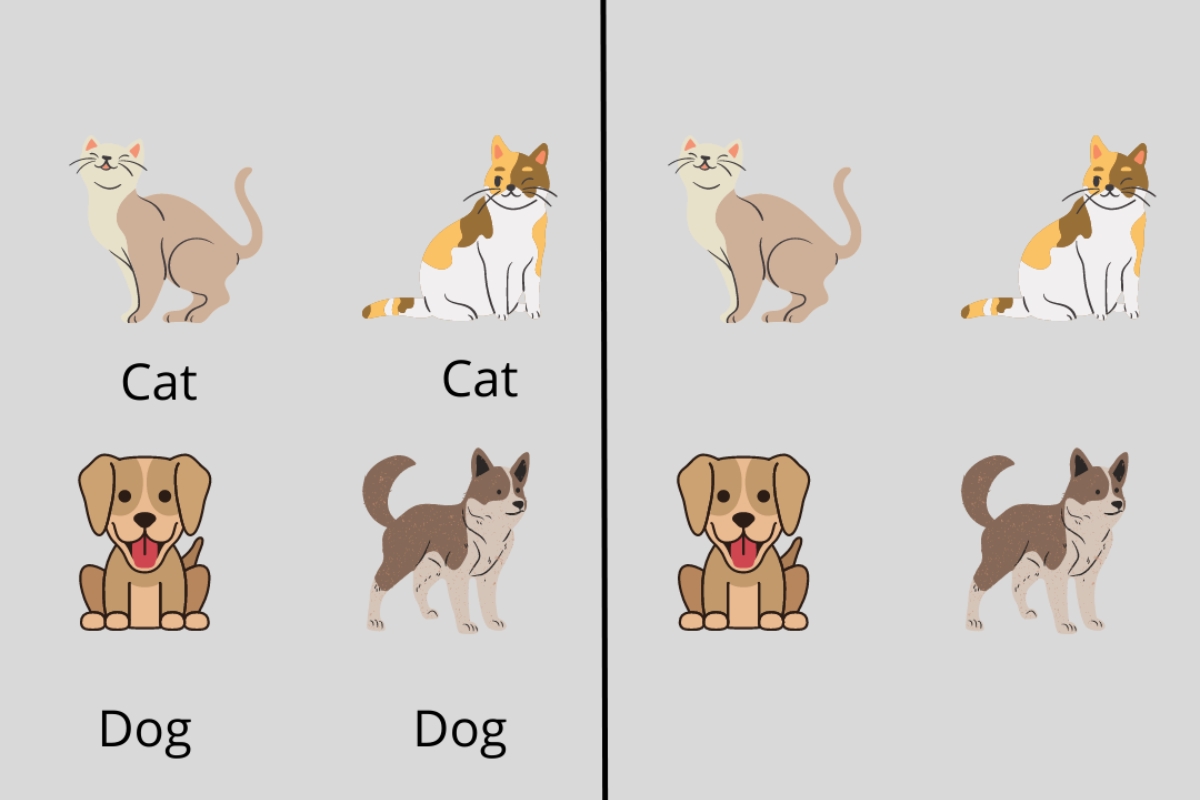

Labeled data is a fundamental concept in machine learning that refers to data that has been assigned a specific label or category. In the context of supervised learning, labeled data consists of input data, typically in the form of features or attributes, which are accompanied by corresponding output labels or target values. These labels serve as the ground truth or reference for the machine learning algorithm to learn and make predictions.

For example, in a binary classification task where the goal is to predict whether an email is spam or not, the labeled data would include a collection of emails along with their corresponding labels, indicating whether each email is spam or not. Similarly, in a regression problem to predict housing prices based on various features, the labeled data would contain the features of each house along with their respective sale prices.

Labeled data plays a crucial role in supervised learning as it provides the necessary information for the algorithm to learn patterns and make accurate predictions on new, unseen data. By observing the relationship between the input features and their corresponding labels, the algorithm can infer the underlying patterns and generalize its knowledge to make predictions on new data instances.

Creating labeled data typically involves human involvement, where domain experts or annotators manually assign labels to the data. This process can be time-consuming and resource-intensive, especially for large datasets. However, labeled data is considered highly valuable as it serves as the foundation for building and training machine learning models, enabling them to generate meaningful insights and make accurate predictions in various real-world applications.

What Is Unlabeled Data?

Unlabeled data, as the name suggests, refers to data that does not have explicit labels or categories assigned to it. Unlike labeled data, which consists of both input features and corresponding output labels, unlabeled data only contains the input features without any associated labels.

In many real-world scenarios, obtaining labeled data can be challenging or costly. It may require manual annotation by experts, which may not always be feasible due to time, budget, or privacy constraints. Unlabeled data, on the other hand, is often abundant and readily available, making it a valuable resource for machine learning tasks.

Although unlabeled data lacks explicit labels, it still contains valuable information and patterns that can be extracted and utilized. Unsupervised learning algorithms are specifically designed to analyze and uncover these inherent patterns within unlabeled data. These algorithms aim to find meaningful clusters, associations, or structures in the data without any predefined labels or knowledge of the target variable.

Unlabeled data can be used for various purposes, such as feature extraction, anomaly detection, or discovering hidden patterns. By analyzing the relationships and similarities between the data points, unsupervised learning algorithms can segment the data into groups or clusters based on their inherent characteristics.

For example, in customer segmentation, where the goal is to group customers based on their behaviors or preferences, unsupervised learning algorithms can process unlabeled data representing customer attributes and identify distinct clusters that share similar attributes. This can provide valuable insights for targeted marketing campaigns or personalized recommendations.

In addition, unlabeled data is often used in pre-training models. In this approach, a neural network is trained on a large amount of unlabeled data to learn general features or representations of the input data. The pre-trained model can then be further fine-tuned on a smaller labeled dataset to perform specific tasks, such as image classification or natural language processing.

Overall, while unlabeled data may lack explicit labels, it still holds valuable information that can be leveraged through unsupervised learning techniques to extract patterns, gain insights, and improve the performance of machine learning models.

Difference Between Labeled and Unlabeled Data

There are several key differences between labeled and unlabeled data in the context of machine learning. These differences lie in the presence of labels, the level of human involvement, and the specific use cases for each type of data. Understanding these distinctions is crucial for effectively utilizing labeled and unlabeled data in machine learning tasks.

Presence of Labels: The primary difference between labeled and unlabeled data is the presence or absence of labels. Labeled data contains explicit annotations or categories assigned to the data instances, providing a clear indication of the desired output or target variable. On the other hand, unlabeled data lacks these explicit labels and requires algorithms to uncover underlying patterns or structures.

Level of Human Involvement: Labeled data typically involves human involvement, where domain experts or annotators manually assign the labels to the data instances. This process requires expertise and can be time-consuming and resource-intensive, especially for large datasets. In contrast, unlabeled data does not require manual labeling, as it consists solely of input features without any associated labels.

Supervised vs. Unsupervised Learning: Labeled data is primarily used in supervised learning, where the goal is to train a model to predict or classify new, unseen data based on the provided labels. In supervised learning, the labeled data serves as the ground truth or reference for the model to learn and make accurate predictions. Unlabeled data, on the other hand, is utilized in unsupervised learning, where the algorithm aims to uncover patterns or structures within the data without any predefined labels. Unsupervised learning algorithms analyze the relationships and similarities between the data points to discover meaningful clusters or associations, providing valuable insights for various applications.

Availability and Cost: Labeled data can be valuable but often comes at a cost, as it requires manual annotation or expert knowledge. Obtaining large amounts of labeled data may be challenging or expensive, especially in certain domains. In contrast, unlabeled data is often readily available and abundant, as there is no requirement for explicit labeling. Unlabeled data can be obtained from various sources, such as sensor data, web scraping, or customer behavior logs.

Use Cases: Labeled data is commonly used for tasks that require accurate predictions or classifications, such as sentiment analysis, image recognition, or fraud detection. Unlabeled data, on the other hand, is utilized for tasks such as data exploration, feature extraction, anomaly detection, or clustering. Unsupervised learning algorithms leverage the inherent patterns and structures within unlabeled data to gain insights or improve the performance of machine learning models.

Understanding the differences between labeled and unlabeled data is essential for selecting appropriate machine learning approaches and utilizing the right data for specific tasks. Both types of data have their unique advantages and applications, and a combination of labeled and unlabeled data can often lead to improved model performance and insights in various machine learning applications.

The Importance of Labeled and Unlabeled Data in Machine Learning

Labeled and unlabeled data play crucial roles in machine learning, each contributing to different aspects of the learning process. These types of data provide valuable information for training models, extracting patterns, and making accurate predictions in various applications. Understanding the importance of labeled and unlabeled data is essential for developing effective machine learning solutions.

Labeled Data: Labeled data serves as the foundation for building and training supervised learning models. By providing the input features along with the corresponding labels or target values, labeled data allows machine learning algorithms to learn patterns and relationships. Supervised learning models leverage the labeled data to make accurate predictions on new, unseen data instances. Without labeled data, the models would not have a reference for learning and would not be able to generalize to new data effectively.

Labeled data is essential for a wide range of tasks, such as classification, regression, sentiment analysis, and fraud detection. It enables the models to understand the relationship between the input features and the desired output, enabling them to make informed decisions and predictions. Acquiring labeled data often requires the involvement of domain experts or annotators to manually assign labels, making it a valuable resource that can be time-consuming and costly to obtain.

Unlabeled Data: While unlabeled data lacks explicit labels, it is still valuable in machine learning. Unsupervised learning algorithms make use of unlabeled data to discover underlying patterns or structures within the data. These algorithms analyze the relationships, similarities, and distributions of the input features to find clusters, associations, or dependencies. Unlabeled data can be used for various tasks, such as data exploration, feature extraction, and anomaly detection. It provides insights into the inherent characteristics of the data and helps uncover hidden patterns or relationships that may not be apparent from labeled data alone.

Furthermore, unlabeled data is often abundant and readily available, making it a valuable resource, especially in scenarios where obtaining labeled data may be challenging or expensive. Unlabeled data can be used for pre-training models, where a neural network is initially trained on a large amount of unlabeled data to learn general features or representations of the input data. The pre-trained model can then be further fine-tuned using a smaller labeled dataset for specific tasks, improving the model’s performance.

Both labeled and unlabeled data have their unique contributions to machine learning. Labeled data provides the necessary information for models to learn and make accurate predictions, while unlabeled data helps uncover valuable insights and patterns. Leveraging the power of both types of data allows for more comprehensive learning, leading to the development of robust and accurate machine learning solutions.

Labeled Data in Supervised Learning

Labeled data plays a central role in supervised learning, a machine learning approach that aims to build models capable of making accurate predictions or classifications based on the input data. In supervised learning, the labeled data consists of input features or attributes along with their corresponding output labels or target values.

The labeled data provides the ground truth or reference for the machine learning algorithm to learn and make predictions. By observing the patterns and relationships between the input features and their corresponding labels, the algorithm can infer the underlying rules and generalize its knowledge to make predictions on new, unseen data instances.

In supervised learning, the labeled data is typically split into two subsets: a training set and a test set. The training set is used to train the model, where the algorithm learns the relationship between the input features and their corresponding labels. The trained model is then evaluated on the test set, which contains new, unseen data, to measure its performance and assess its ability to make accurate predictions.

The process of acquiring labeled data often involves human involvement. Domain experts or annotators manually assign the labels to the data instances based on their expertise or predefined criteria. This process can be time-consuming and resource-intensive, especially for large datasets. However, the labeled data serves as a valuable resource for building and training machine learning models.

Labeled data is essential for a wide range of supervised learning tasks, including classification and regression. In classification problems, the goal is to assign input samples to predefined categories or classes. For example, given a dataset of email messages, the labeled data would include both the email content as features and the corresponding labels indicating whether each email is spam or not. The algorithm can learn from this labeled data to classify new, unseen emails as spam or non-spam based on the learned patterns and relationships.

In regression tasks, the objective is to predict a continuous numerical output based on the input features. For instance, in a housing price prediction task, the labeled data would consist of features such as location, size, and number of bedrooms, along with the corresponding sale prices. The model can learn from this labeled data to make predictions on new houses based on their features, estimating their sale prices.

Labeled data enables supervised learning algorithms to learn from the provided examples and generalize that knowledge to make accurate predictions on unseen data. It acts as a valuable guide for the model, allowing it to understand the relationship between the input features and the desired output. By leveraging labeled data, supervised learning models can provide insights, solve complex problems, and make informed decisions in various domains and applications.

Unlabeled Data in Unsupervised Learning

Unlabeled data plays a significant role in unsupervised learning, a machine learning approach where the goal is to uncover patterns, structures, or relationships within the data without the presence of explicit labels. In unsupervised learning, the unlabeled data comprises only the input features or attributes without any associated target values.

Unsupervised learning algorithms leverage unlabeled data to analyze the relationships, similarities, and distributions within the data. These algorithms aim to discover hidden patterns and structures, cluster similar data points, or find associations among the features.

Unlabeled data is highly valuable as it allows for exploration of the underlying characteristics of the data. It provides insights into the intrinsic properties and structures that may not be apparent from labeled data alone. By uncovering these patterns, unsupervised learning algorithms can gain a deeper understanding of the data and extract valuable information.

Clustering is one of the most common applications of unsupervised learning. Unsupervised algorithms group similar data points together into clusters based on their intrinsic characteristics. By examining the patterns within unlabeled data, these algorithms can identify distinct clusters or groups, revealing natural divisions in the data. Clustering algorithms have various applications, such as customer segmentation, social network analysis, and image recognition.

Another application of unsupervised learning is anomaly detection. Anomalies are data points that significantly differ from the majority of the data. By analyzing the patterns within unlabeled data, unsupervised learning algorithms can identify these outliers or anomalies, which may indicate rare events, fraud attempts, or abnormal behavior in the data. Anomaly detection has applications in areas such as fraud detection, cybersecurity, and system monitoring.

Unsupervised learning is also employed for dimensionality reduction and feature extraction. Unlabeled data can have high dimensionality, with a large number of features. Unsupervised algorithms can identify the most informative features or reduce the dimensionality by transforming the data into a lower-dimensional representation. This process helps in simplifying the data and capturing the most relevant information, making it easier for downstream tasks such as visualization, classification, or regression.

Furthermore, unsupervised learning algorithms can reveal underlying structures, similarities, and relationships between entities in various domains. They provide a foundation for exploratory data analysis, enabling researchers and practitioners to gain insights, discover patterns, and generate hypotheses.

Unlabeled data is often abundant and readily accessible, making it an invaluable resource in unsupervised learning. Its analysis and utilization through unsupervised learning algorithms provide a deeper understanding of the data’s intrinsic characteristics, leading to valuable insights and discoveries.

Semi-Supervised Learning: Combining Labeled and Unlabeled Data

Semi-supervised learning is a machine learning approach that combines both labeled and unlabeled data to improve the performance of models. In many real-world scenarios, obtaining a large amount of labeled data can be costly or time-consuming. Semi-supervised learning addresses this challenge by leveraging the abundance of unlabeled data alongside a limited set of labeled data.

The underlying idea in semi-supervised learning is that unlabeled data contains valuable information and can assist in learning more robust and accurate models. By combining the labeled and unlabeled data, the learning algorithm can take advantage of the patterns and relationships present in the unlabeled data to generalize better, especially in cases where the labeled data may be limited or incomplete.

The process of semi-supervised learning involves first training a model on the labeled data, as in supervised learning. This initial model is then utilized to make predictions on the unlabeled data. The predictions on the unlabeled data are considered pseudo-labels and are used to refine the model further. The refinement can be done through various techniques, such as self-training, co-training, or generative models.

In self-training, the model makes predictions on the unlabeled data and assigns pseudo-labels based on its confidence in the predictions. The high confidence predictions are then treated as additional labeled data and combined with the original labeled data for subsequent training iterations. This iterative process continues until the model converges or reaches a stopping criterion.

Co-training, on the other hand, involves training multiple models using different subsets of the labeled data, each model learning from a specific set of features. The models then make predictions on the unlabeled data, and the most confident predictions are used to label the unlabeled examples. The labeled examples from each model are then combined and added to the labeled dataset, creating a larger and more diverse training set for the models to learn from.

Generative models, such as generative adversarial networks (GANs) or variational autoencoders (VAEs), can also be used in semi-supervised learning. These models learn the underlying distribution of the unlabeled data and generate synthetic examples that resemble the original data distribution. The synthetic examples are then combined with the labeled data for training, enabling the model to have a better understanding of the data distribution and improve its performance.

Semi-supervised learning has shown promising results in various domains, including natural language processing, image classification, and speech recognition. By utilizing both labeled and unlabeled data, semi-supervised learning allows for more efficient use of available resources and can lead to improved model performance, particularly in scenarios where obtaining large amounts of labeled data is challenging.

Overall, semi-supervised learning provides an effective approach to leverage the abundance of unlabeled data alongside a limited set of labeled data. By combining the two, models can achieve better generalization and make more accurate predictions, addressing the limitations and constraints of fully supervised learning.

Techniques for Labeling Data

Labeling data is a vital step in supervised learning, as it provides the necessary annotations or categories that guide the machine learning algorithms during training. While manual labeling by domain experts is a common approach, it can be time-consuming and expensive, especially for large datasets. Thus, alternative techniques have been developed to alleviate the labeling burden and make the process more efficient.

Human Annotation: This traditional approach involves human annotators manually assigning labels to the data instances. Domain experts or trained annotators carefully review the data and determine the appropriate labels based on specific guidelines or predefined criteria. Although manual labeling ensures high-quality annotations, it can be labor-intensive and costly, particularly for large datasets. Crowd-sourcing platforms like Amazon Mechanical Turk are often used to distribute the labeling tasks among multiple annotators simultaneously, speeding up the process.

Active Learning: This technique leverages the iterative process of training a model and selectively labeling additional samples to improve its performance. Initially, a small set of labeled data is used to train the model. The model then identifies data instances where it is uncertain or has low confidence in its predictions. These instances are then presented to human annotators for labeling, focusing on the most informative samples that would enhance the model’s knowledge and reduce uncertainty. By iteratively repeating this process, the model gradually improves, and the need for extensive manual labeling is reduced.

Transfer Learning and Pre-trained Models: Transfer learning is a technique where models trained on one task are leveraged to perform well on related but different tasks. Instead of labeling data from scratch, transfer learning allows models to utilize pre-trained weights and knowledge acquired from a different but relevant dataset. This significantly reduces the need for large amounts of labeled data. The pre-trained models are fine-tuned or adapted to the target task using a smaller labeled dataset. This approach is particularly useful when the target dataset is limited or when there are similarities between the source and target tasks.

Weak Supervision: Weak supervision is an approach where noisy or imperfect labels are used to train models. Rather than relying on a single expert or annotator, weak supervision combines multiple sources of noisy labels, heuristics, or rule-based methods to generate labels. For example, in text classification, weak supervision can involve using keyword-based rules or distant supervision by utilizing readily available metadata as proxy labels. While weak supervision may introduce noise or inaccuracies in the labels, it allows for a larger amount of labeled data to be generated in a shorter time frame.

Multi-instance Learning: In certain scenarios, the labeling process may be challenging or costly at the instance level, but can be performed at a higher-level concept called bags. Multi-instance learning is a technique where instances are grouped into bags, and each bag is assigned a single label. This approach is commonly used in tasks such as object recognition, drug discovery, or anomaly detection. By labeling entire bags rather than individual instances, the reliance on costly instance-level annotations is reduced.

These labeling techniques help streamline the process of obtaining labeled data and make it more efficient. By leveraging the expertise of human annotators, incorporating active learning, utilizing transfer learning and pre-trained models, and embracing weak supervision or multi-instance learning, the labeling process becomes more cost-effective and scalable. These techniques significantly aid machine learning tasks, reducing the reliance on extensive manual annotations and enabling the development of accurate models.

Challenges with Labeling Data

Labeling data is a critical and often challenging aspect of supervised learning. While labeled data is essential for training accurate machine learning models, there are various challenges associated with the labeling process that can impact the quality, efficiency, and effectiveness of the resulting models.

Subjectivity and Inter-annotator Agreement: Labeling data can involve subjective decisions, especially in cases where there is ambiguity or multiple interpretations. Different annotators may assign different labels to the same instance, resulting in low inter-annotator agreement. This subjectivity can introduce noise and inconsistencies into the labeled data, potentially affecting the performance of the models.

Expertise and Training: Labeling data often requires the involvement of domain experts or trained annotators who possess the necessary knowledge and understanding of the task. Ensuring the availability of skilled annotators and providing adequate training to maintain consistency and accuracy can be challenging, particularly in specialized or niche domains.

Time and Resource Constraints: The process of manual labeling can be time-consuming and resource-intensive, especially for large datasets. Annotators need sufficient time to review, analyze, and assign labels to the data accurately. This time requirement can become a bottleneck, especially when dealing with real-time or high-volume data streams.

Cost and Scalability: Acquiring labeled data can be costly, particularly when it involves paying skilled annotators or outsourcing the labeling tasks. The cost can be prohibitive, especially for resource-constrained organizations or projects. Scaling the labeling process to handle large datasets can also pose challenges, as it requires additional resources and infrastructure.

Labeling Bias: Human annotators can inadvertently introduce biases based on their background, experiences, or personal beliefs. These biases can manifest in the labeled data and subsequently affect the behavior and performance of the machine learning models. Addressing and mitigating labeling biases requires careful consideration and monitoring during the annotation process.

Data Imbalance: In some applications, labeled data can suffer from class imbalance, where the distribution of samples across different classes is highly skewed. This imbalance can impact the model’s ability to learn and make accurate predictions, as it may heavily favor the majority class and ignore the minority classes. Addressing data imbalance may require techniques such as oversampling, undersampling, or synthetic data generation.

Quality Control: Ensuring the quality and consistency of the labeled data can be challenging, especially when multiple annotators are involved. Implementing rigorous quality control measures, such as regular feedback sessions, double-checking, or adjudication processes, is essential to maintain the integrity of the labeled dataset.

Overcoming these challenges requires careful planning, allocation of resources, and implementation of quality control measures. Employing techniques such as active learning, weak supervision, or transfer learning can also help reduce the burden of manual labeling and improve the efficiency and scalability of the process. By actively addressing these challenges, the resulting labeled data can be of higher quality, leading to more accurate and robust machine learning models.

Use Cases and Applications of Labeled and Unlabeled Data in Machine Learning

Both labeled and unlabeled data have significant applications and play crucial roles in various machine learning tasks. Understanding their use cases and applications is essential for leveraging these types of data effectively in solving real-world problems.

Labeled Data in Supervised Learning: Labeled data is fundamental for supervised learning tasks, where the goal is to train models to make accurate predictions or classifications. It is widely used in applications such as:

- Image and Object Recognition: Labeled images with corresponding object labels are used to train models for tasks such as image classification, object detection, and facial recognition.

- Natural Language Processing: Labeled text data, such as sentiment-labeled reviews or labeled text snippets, are used for tasks like sentiment analysis, named entity recognition, and text classification.

- Fraud Detection: Labeled data containing information about fraudulent and non-fraudulent activities are utilized to train models for identifying and preventing fraudulent behavior.

- Medical Diagnostics: Labeled medical data, like labeled medical images or patient records, can aid in diagnosing diseases, predicting outcomes, and improving patient care.

Unlabeled Data in Unsupervised Learning: Unlabeled data is utilized in unsupervised learning tasks, where the focus is on discovering patterns or structures within the data. Applications of unlabeled data include:

- Clustering and Customer Segmentation: Unlabeled data is used to uncover patterns or group similar data points together, enabling tasks such as customer segmentation, market analysis, and recommendation systems.

- Anomaly Detection: Unlabeled data is leveraged for identifying rare or unusual instances, helping detect anomalies in financial transactions, cybersecurity, or fault diagnosis.

- Feature Extraction: Unlabeled data is instrumental in extracting informative features or representations from complex data, aiding tasks such as image feature extraction, text embedding, and speech analysis.

- Data Exploration: Unlabeled data facilitates exploring and visualizing the inherent structures and relationships within the dataset, enabling researchers and analysts to gain insights and formulate hypotheses.

Combining Labeled and Unlabeled Data: Combining labeled and unlabeled data in semi-supervised learning allows for enhanced performance and efficiency in various applications, including:

- Text and Document Classification: Combining a small number of labeled documents with a large pool of unlabeled documents can improve the performance of text classification models, especially when labeled training data is limited.

- Image and Video Annotation: Combining labeled examples with unlabeled images or videos can aid in automating the process of annotation, reducing the manual effort required for generating large labeled datasets.

- Speech Recognition: Combining labeled speech data with unlabeled speech data can enhance the performance of speech recognition models, improving accuracy and reducing the need for extensive manual transcription.

- Drug Discovery: Combining labeled compounds with massive amounts of unlabeled chemical data can aid in drug discovery by identifying potential candidates and optimizing molecular properties.

By utilizing both labeled and unlabeled data in machine learning tasks, researchers and practitioners can harness the strengths of each type of data, leading to more accurate predictions, robust models, and insightful discoveries in a wide range of applications.