What are Data Packets?

Data packets are the fundamental building blocks of computer networks. They are small units of data that are packaged and transmitted over a network from one device to another. These packets contain not only the actual data being sent, but also additional information, such as the source and destination addresses, error-checking codes, and sequencing information.

Think of data packets as envelopes that hold pieces of a larger message. When you send a file or make an online request, the data is broken down into smaller chunks, known as packets, to efficiently travel across the network. Each packet is individually addressed and can take different routes to reach its destination. Once all the packets arrive, they are reassembled to reconstruct the original data.

Data packets are typically limited in size, usually ranging from a few hundred bytes to a few thousand bytes. Breaking down data into smaller packets allows for more efficient transmission, as it minimizes the impact of network congestion and enables parallel processing. It also increases the chances of successful data delivery, as each packet can take different paths and be retransmitted if it gets lost or corrupted during transmission.

These packets are often transmitted using packet-switching protocols, such as the Internet Protocol (IP), which is the foundation of the Internet. IP divides the data into packets, assigns them unique addresses, and determines the most appropriate routing paths based on network conditions. Other protocols, such as the Transmission Control Protocol (TCP), ensure reliable delivery of packets by establishing connections, sequencing packets, and detecting errors.

Data packets are crucial for the efficient and reliable transmission of information in computer networks. They allow for the seamless transfer of data, regardless of the distance between devices or the complexity of the network infrastructure. By dividing data into smaller units and providing necessary information for routing and error detection, data packets make it possible for us to send emails, download files, stream videos, and engage in countless other online activities.

How are Data Packets Created?

Data packets are created through a process known as encapsulation. When data needs to be transmitted over a network, it is divided into smaller units called packets. This process involves several layers in the network stack, each playing a specific role in creating and preparing the packets for transmission.

The process of creating data packets starts at the application layer. The application layer takes the data from the user’s application, such as a web browser or email client, and breaks it into manageable chunks. These chunks are then passed down to the transport layer.

In the transport layer, the data is further divided into segments. Each segment is assigned a sequence number and encapsulated with transport layer protocols like TCP or User Datagram Protocol (UDP). TCP ensures reliable delivery of data by establishing connections between devices, sequencing the packets, and providing error detection and correction mechanisms. UDP, on the other hand, is a connectionless protocol that does not provide the same level of reliability but is faster and more suitable for applications like video streaming or VoIP.

Once the segments are created and encapsulated with the appropriate transport layer protocol, they are passed down to the network layer. In this layer, the data segments are further divided into smaller units called packets. Each packet is assigned a unique source and destination IP address and encapsulated with the Internet Protocol (IP). IP determines the most efficient path for the packets to reach their destination based on the network’s routing table.

After the packets are created at the network layer, they are passed down to the data link layer. This layer is responsible for adding additional headers and footers to the packets. These headers and footers contain information about the physical addresses of the source and destination devices, as well as error-checking codes such as cyclic redundancy check (CRC).

Finally, at the physical layer, the packets are converted into binary signals and transmitted over the network medium, such as Ethernet cables or wireless signals. Once the packets reach the destination device, they are received and processed by the layers in reverse order. The data link layer checks for errors and removes the headers and footers, then the network layer reassembles the packets into segments, and finally, the transport layer reconstructs the original data.

The process of creating data packets involves multiple layers of the network stack working together to ensure efficient and reliable data transmission. By encapsulating the data at each layer with the necessary headers and footers, data packets can be successfully transmitted over networks of varying complexities and distances, enabling us to communicate, share information, and access online services.

Structure of a Data Packet

A data packet has a specific structure that includes various components, each serving a specific purpose in the transmission and delivery of data. Understanding the structure of a data packet is vital in troubleshooting network issues, optimizing network performance, and ensuring successful data transmission.

The structure of a data packet can vary depending on the network protocol being used. However, in general, a data packet consists of two main parts: the header and the payload.

The header is located at the beginning of the data packet and contains critical information for routing and managing the packet. It typically includes the source and destination IP addresses, which specify the sending and receiving devices. The header also contains sequence numbers, acknowledgment numbers, and other control information used by transport layer protocols like TCP to ensure reliable data delivery.

In addition to the source and destination addresses, the header may include information such as the protocol used (e.g., TCP, UDP), Time To Live (TTL), and various flags that control how the packet should be handled by network devices along its path.

The payload, also known as the data field, is the actual data being transmitted. It could be a portion of a file, a web page, an email, or any other data that needs to be sent over the network. The payload can vary in size and content, depending on the application and the data being transmitted. It is important to note that the payload does not include the header or any additional control information.

After the payload comes the trailer, which is located at the end of the data packet. The trailer typically contains error-checking codes, such as cyclic redundancy check (CRC), which help detect and correct errors during transmission. The trailer ensures that the received data packet matches the original data sent by the source device.

It is important to mention that data packets can be further encapsulated within each layer of the network stack. For example, at the data link layer, a data packet can be encapsulated within a frame, which includes the destination and source physical addresses and other control information specific to the data link layer protocol being used, such as Ethernet.

The structure of a data packet is designed to facilitate efficient and reliable transmission of data across computer networks. By including headers with necessary addressing and control information, along with a payload containing the actual data, data packets can be successfully routed, delivered, and reassembled at the destination device. The use of error-checking codes in the trailer adds another layer of reliability, ensuring that the data packet’s integrity is maintained throughout the transmission.

What is the Purpose of Data Packets?

Data packets serve a crucial role in computer networks, enabling the efficient and reliable transmission of information across devices and networks. The primary purpose of data packets is to break down the data into smaller units and add necessary information for routing, error detection, and reassembly at the destination device.

One of the key purposes of data packets is to optimize data transmission. By dividing data into smaller packets, networks can better handle congestion and distribute the load across multiple paths. This enables faster and more efficient transmission of data, especially in large networks where multiple devices are communicating simultaneously.

Data packets also facilitate reliable data delivery. By assigning sequence numbers and including error-checking codes in the headers, protocols like TCP ensure that packets arrive in the correct order and are free of errors. If a packet is lost or corrupted during transmission, protocols can request retransmission, improving the overall reliability of data delivery.

Another purpose of data packets is to facilitate network routing. Each packet contains source and destination IP addresses, allowing networking devices to determine the most appropriate path for the packet to reach its destination. Routing protocols analyze network conditions, such as congestion and network availability, to determine the optimal route for each packet in real-time.

Data packets also enable the reassembly of data at the destination device. By dividing the data into smaller units and assigning sequencing information, the destination device can reconstruct the original data from the received packets. This process ensures that large files or messages can be successfully transmitted and reassembled, even if individual packets take different routes or arrive at different times.

Furthermore, data packets allow for the implementation of quality of service (QoS) mechanisms. QoS prioritizes certain types of data, such as real-time audio or video streams, to ensure their smooth and uninterrupted delivery. Data packets can be marked with different priority levels, and network devices can use this information to prioritize the processing and transmission of critical data.

Last but not least, data packets also play a significant role in network security. By analyzing the headers and content of data packets, network security systems can detect and mitigate potential threats, such as unauthorized access, malware, or data breaches. Deep packet inspection techniques can be employed to examine the payload of packets and identify any suspicious or malicious activity.

How do Data Packets Travel in a Network?

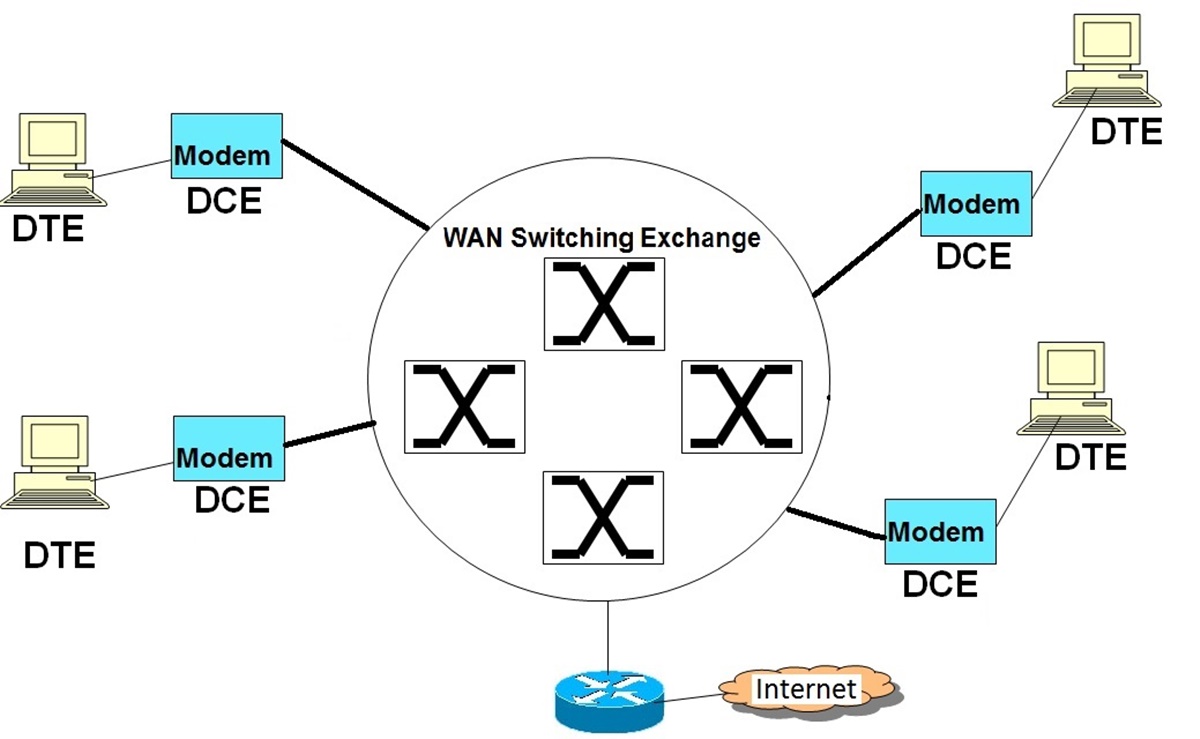

Data packets travel through a network using a process known as packet switching. This method allows for efficient and flexible transmission of data across various devices, routers, and network links. The journey of a data packet in a network involves several stages, including routing, forwarding, and reassembly at the destination device.

When a device wants to send data to another device on the network, it divides the data into smaller units called packets. Each packet is assigned a unique source and destination IP address, allowing networking devices to determine the most appropriate path for the packet to reach its destination.

The packets are then sent from the source device to a local router. The router examines the destination IP address in the packet header and consults its routing table to determine the next hop, or the next router on the path to the destination. The router then forwards the packet to the next hop based on the routing information.

As the packets traverse the network, they may encounter multiple routers along the way. Each router examines the destination IP address in the packet header and makes forwarding decisions based on its routing table. This process continues until the packets reach their final destination.

During this journey, the packets can take different paths depending on the network’s topology and conditions. This is known as dynamic routing, where routing protocols dynamically calculate the most efficient paths in real-time based on factors such as congestion, network availability, and link quality. This dynamic routing ensures that the packets are delivered as quickly and efficiently as possible.

Upon arrival at the destination device, the packets are reassembled to reconstruct the original data. The destination device uses sequence numbers and other information in the packet headers to ensure that the packets are put back in the correct order. If any packets are missing or corrupted during transmission, the destination device can request retransmission of those packets.

It’s important to note that data packets can take different routes and may not necessarily arrive in the order they were sent. This is due to the nature of packet-switching, which allows packets to be transmitted independently and take different paths based on network conditions. However, the packets’ headers contain the necessary information to reassemble the data correctly at the destination.

The process of data packets traveling through a network is dynamic and flexible, allowing for efficient transmission and delivery of data across various devices and network links. Through packet switching and dynamic routing, data packets can navigate the network, reaching their destination and ensuring reliable and timely communication between devices.

Routing Data Packets

Routing is a critical process in computer networks that determines the optimal path for data packets to travel from the source to the destination. The goal of routing is to ensure efficient and reliable transmission of packets by considering factors such as network topology, congestion, and network policies.

When a device sends a data packet, it includes the destination IP address in the packet’s header. This address serves as the identifier for the intended recipient. The routing process begins with the source device’s local router, which examines the packet’s destination IP address.

The local router has a routing table that contains information about available network paths and associated metrics. Metrics can include factors such as hop count, bandwidth, delay, and reliability. Based on the destination IP address and the information in the routing table, the router determines the next hop, which is the next router on the path towards the destination.

Each router in the network follows a similar process. It examines the packet’s destination IP address, consults its routing table, and determines the next hop. This process repeats until the packet reaches its final destination.

There are two main types of routing: static routing and dynamic routing. Static routing involves manually configuring the routing table on each router, specifying the routes that packets should take. This type of routing is typically used in small networks with a simple network topology where changes in the network infrastructure are infrequent.

Dynamic routing, on the other hand, uses routing protocols to automate the process of updating and maintaining the routing tables on routers. These protocols exchange information between routers, allowing them to dynamically calculate the most efficient paths for packets in real-time. Examples of dynamic routing protocols include Border Gateway Protocol (BGP) for routing between autonomous systems and Open Shortest Path First (OSPF) for intra-domain routing.

Routing protocols consider various factors, such as link costs, network congestion, and network policies, to determine the best paths for packets. They adapt to changes in network topology and traffic conditions by recalculating routes as needed. This flexibility allows networks to dynamically adjust to changes and optimize the use of network resources.

In addition to dynamic routing protocols, other mechanisms can influence routing decisions. For example, policy-based routing allows network administrators to define specific routing policies based on criteria like traffic type, source or destination IP addresses, or application characteristics.

Overall, routing is a fundamental process that enables the efficient and reliable transmission of data packets in computer networks. By determining the optimal paths for packet delivery, routing ensures that data reaches its destination in a timely and efficient manner, regardless of the network size or complexity.

Fragmentation and Reassembly of Data Packets

Fragmentation and reassembly are important processes in the transmission of data packets over computer networks. These processes enable the efficient transfer of data when the packet size exceeds the maximum transmission unit (MTU) of a network link or when different network technologies have varying packet size limits.

When a device wants to send a data packet, it first checks the packet size. If the packet size exceeds the MTU of the outgoing network link, fragmentation occurs. Fragmentation involves breaking down the original data packet into smaller fragments that can fit within the MTU. Each fragment becomes an independent packet with its own header and is assigned a unique identification number.

Fragmentation occurs at the sending device and is transparent to the rest of the network. The original packet’s header is retained in the first fragment, while subsequent fragments include a fragmentation offset field that indicates the position of the fragment within the original packet.

When a packet fragment arrives at a receiving device, the device stores the fragment until it receives all the remaining fragments. Once all the fragments are received, the receiving device can begin the reassembly process.

Reassembly involves combining the individual fragments to reconstruct the original data packet. The receiving device uses information present in the packet headers, such as the identification number and offset, to correctly order the fragments and eliminate any duplicate or overlapping data. Once the reassembly process is complete, the device can further process the data as needed.

Fragmentation and reassembly are necessary in situations where networks have different MTUs or when data is transmitted across multiple network links with varying packet size limits. For example, when data is sent from a network with a large MTU over an intermediate network with a smaller MTU, fragmentation may be required to ensure successful delivery of the data.

Although fragmentation and reassembly allow for the transmission of data packets across networks with different MTUs, they can introduce overhead and potential delays. Extra processing is required to fragment packets at the source device and reassemble them at the destination. Furthermore, if fragments are lost or delayed during transmission, the entire packet may need to be retransmitted, which can impact network performance.

Generally, it is best to avoid fragmentation whenever possible by ensuring that the packet size remains smaller than the MTU of the network links it traverses. This can be achieved by configuring devices and applications to use appropriate MTU sizes and implementing quality of service (QoS) mechanisms to prioritize or limit the size of certain types of data traffic.

Overall, fragmentation and reassembly play vital roles in enabling the transmission of data packets across networks with varying MTUs. When implemented efficiently, these processes allow for seamless delivery of data while considering the constraints imposed by network technologies and configurations.

Quality of Service for Data Packets

Quality of Service (QoS) is an essential aspect of data packet transmission in computer networks. It refers to the ability to prioritize certain types of data traffic, ensuring that critical or time-sensitive data receives higher levels of service and that network resources are allocated efficiently to guarantee reliable and timely delivery.

QoS mechanisms play a significant role in managing network traffic and minimizing delays, packet loss, and congestion. By assigning different levels of priority to various types of data packets, QoS ensures that important data, such as real-time audio or video streams, is given sufficient bandwidth and minimal latency.

There are several aspects of QoS that can be implemented to enhance the performance of data packet transmission:

- Traffic Classification: QoS allows for the classification of different types of data traffic based on factors such as the application, source, destination, or traffic characteristics. This classification enables network devices to identify and handle specific types of data differently, based on their prioritization policies.

- Traffic Queuing: QoS mechanisms implement various queuing algorithms to manage the order and scheduling of data packets. These algorithms prioritize packets based on their assigned level of service, ensuring that high-priority packets get processed and transmitted first.

- Packet Priority: QoS assigns different priority levels to data packets based on their importance or urgency. This allows for the preferential treatment of critical or real-time traffic over less time-sensitive data. For example, voice and video data may be assigned a higher priority to ensure uninterrupted communication or smooth streaming.

- Bandwidth Allocation: QoS mechanisms allocate bandwidth resources to different types of data traffic. By reserving a certain amount of bandwidth for high-priority data, QoS ensures that critical applications receive the necessary resources to operate effectively.

- Network Congestion Management: QoS helps manage network congestion by implementing techniques such as traffic shaping, traffic policing, and congestion avoidance mechanisms. These methods monitor and control data traffic to prevent network congestion and ensure fair allocation of network resources.

Implementing QoS mechanisms requires coordination and configuration within the network infrastructure. Various networking devices, such as routers and switches, need to be configured to recognize and handle different types of data traffic based on their assigned priorities. QoS policies can be defined and enforced at network boundaries, where traffic enters or exits a specific network segment.

QoS is particularly important in networks that experience heavy traffic, multiple applications competing for bandwidth, or a mix of real-time and non-real-time data. Examples of scenarios where QoS is vital include voice and video conferencing, real-time gaming, virtual private networks (VPNs), and enterprise networks that require reliable and efficient network performance.

By implementing QoS for data packets, networks can effectively manage and prioritize traffic, reduce delays and packet loss, ensure reliable data delivery, and provide a better user experience for applications that require consistent and high-quality network performance.

Security Considerations for Data Packets

The transmission of data packets over computer networks poses various security risks. It is essential to address these security considerations to protect the confidentiality, integrity, and availability of the transmitted data. Several security measures can be implemented to mitigate these risks and ensure the secure transmission of data packets.

Encryption: One of the primary security measures for data packets is encryption. Encryption converts the data into an unreadable format using cryptographic algorithms. This ensures that even if the packets are intercepted during transmission, the information they contain remains secure. Popular encryption protocols, such as Secure Sockets Layer/Transport Layer Security (SSL/TLS), are widely used to safeguard the confidentiality of data packets.

Authentication: Ensuring that data packets come from a trusted source is vital to prevent unauthorized access and tampering. Authentication mechanisms, such as digital certificates, public key infrastructure (PKI), or secure key exchanges, can verify the identity of the sender and validate the integrity of the received data packets.

Integrity Verification: Data packets can be tampered with during transmission or altered by malicious actors. Integrity verification mechanisms, like checksums or message digests, can be used to detect any unauthorized changes to the data packets. By comparing the calculated checksum or digest at the receiving end with the one included in the packet, the integrity of the data can be verified.

Firewalls and Intrusion Detection/Prevention Systems: Firewalls act as a barrier between internal networks and the external world, examining and filtering inbound and outbound network traffic. Intrusion detection and prevention systems (IDS/IPS) monitor network traffic for signs of malicious activities or intrusions. These security measures can help protect data packets from unauthorized access or attacks launched from external sources.

Virtual Private Networks (VPNs): VPNs provide a secure and private channel for transmitting data packets over public networks. By encrypting all traffic between the sender and receiver, VPNs protect the confidentiality and integrity of data packets even when transmitted over untrusted networks.

Access Control: Limiting access to network resources and ensuring appropriate permissions for users and devices is crucial for securing data packets. Implementing access control mechanisms, such as user authentication, authorization, and proper network segmentation, helps prevent unauthorized access to data packets and the network infrastructure as a whole.

Intrusion Prevention: Intrusion prevention systems can monitor network traffic for potential threats or malicious activities and take proactive measures to prevent them. These systems use various techniques, such as packet filtering, signature-based detection, and anomaly-based detection, to identify and block potentially harmful data packets before they reach their intended destination.

Packet Filtering: Packet filtering involves examining the header and contents of data packets and making decisions about whether to allow or block them based on predefined rules. Packet filters can be implemented at the network perimeter or on individual devices to control inbound and outbound traffic and prevent unauthorized access or malicious activities.

By addressing these security considerations and implementing appropriate security measures, the transmission of data packets can be safeguarded, protecting sensitive information and maintaining the integrity of network communications.

Common Protocols for Data Packets

Various protocols are used for the transmission of data packets across computer networks. These protocols define the rules and standards for how data is packaged into packets, how packets are addressed, and how they are transmitted and processed by network devices. Here are some of the most commonly used protocols for data packets:

Internet Protocol (IP): IP is the fundamental protocol used for transmitting data packets over the Internet. It provides the addressing scheme, packet format, and routing mechanisms to ensure the delivery of packets across network boundaries. IP is responsible for assigning unique IP addresses to devices, enabling efficient routing, and handling issues like fragmentation and reassembly of packets.

Transmission Control Protocol (TCP): TCP is a reliable transport layer protocol that ensures the reliable delivery of data packets. It establishes a connection between two network devices, performs sequencing and acknowledgment of packets, and provides flow control and congestion control to optimize network performance. TCP guarantees that packets arrive in the correct order and mitigates packet loss or errors.

User Datagram Protocol (UDP): UDP is a connectionless transport layer protocol that provides a lightweight alternative to TCP. Unlike TCP, UDP does not include mechanisms for sequencing, acknowledgment, or error recovery. It is commonly used for applications that require low latency and do not require the reliability guarantees provided by TCP, such as real-time streaming or VoIP.

Internet Control Message Protocol (ICMP): ICMP is a network layer protocol that is primarily used for diagnostic and error reporting purposes. It is commonly associated with utilities like ping and traceroute, which use echo requests and replies to measure network response times and trace the path of packets through networks. ICMP messages are encapsulated within IP packets and help troubleshoot network connectivity or performance issues.

Address Resolution Protocol (ARP): ARP is used for mapping IP addresses to MAC (Media Access Control) addresses in local network environments. It allows devices to determine the MAC address of a device based on its IP address, enabling proper communication over local networks. ARP operates at the data link layer and is essential for communication between devices within the same network segment.

Internet Group Management Protocol (IGMP): IGMP is a network layer protocol used by devices to manage and communicate with multicast groups. It enables devices to join or leave multicast groups and receive multicast traffic. IGMP is commonly used for applications such as streaming media, online gaming, and video conferencing, where data needs to be simultaneously sent to multiple recipients.

Domain Name System (DNS): DNS is a protocol used to translate domain names, such as www.example.com, into their corresponding IP addresses. It allows devices to locate resources on the Internet by converting human-readable domain names into the numerical IP addresses required for communication between devices. DNS operates at the application layer and is vital for web browsing, email, and other Internet-based services.

Simple Mail Transfer Protocol (SMTP): SMTP is an application layer protocol used for the transmission of email messages. It is responsible for the exchange of email between mail servers and ensures the reliable delivery of email data packets. SMTP defines how email is formatted, addressed, and delivered across networks, allowing users to send and receive emails worldwide.

Hypertext Transfer Protocol (HTTP): HTTP is an application layer protocol used for the transfer of hypertext in the form of web pages, images, videos, and other resources. It enables the communication between web browsers and web servers, defining how requests and responses are formatted and exchanged. HTTP is the foundation of the World Wide Web and facilitates the access and retrieval of information on websites.

These are just a few examples of the common protocols used for the transmission of data packets over computer networks. Each protocol serves a specific purpose, whether it is providing reliable delivery of packets, addressing and routing packets, or facilitating the exchange of data between applications. Understanding these protocols is crucial for building and maintaining efficient and secure network communications.