Defining Time Series Data

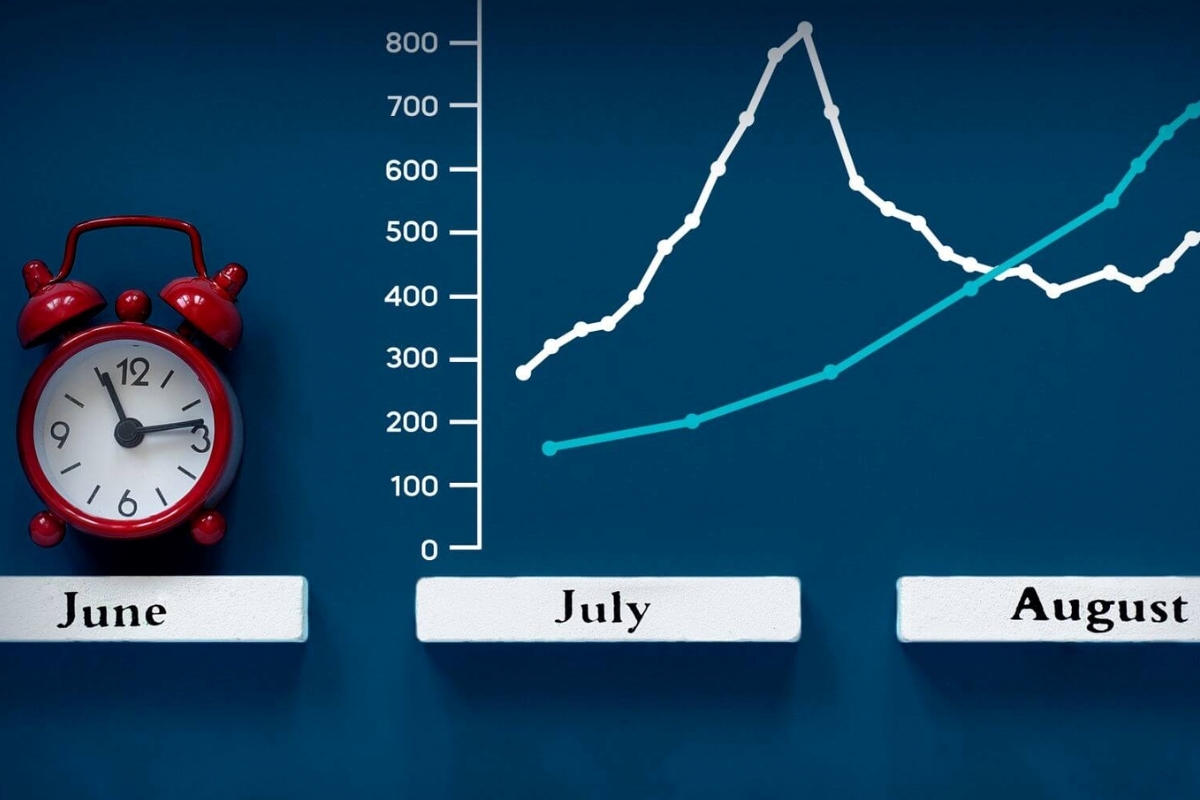

Time series data plays a crucial role in various fields, especially in the realm of machine learning. It refers to a collection of observations recorded at different time intervals. Unlike traditional datasets where each data point is independent, time series data has a temporal dimension, making it unique and challenging to analyze. Understanding the concept of time series data is fundamental when working with machine learning algorithms that rely on historical patterns and trends to make accurate predictions.

At its core, time series data consists of two key elements: the time component and the corresponding value or measurement. The time component represents a specific point in time, such as the date, hour, or minute when the observation was recorded. On the other hand, the value represents the measurement or variable of interest at that particular time point.

For example, consider a dataset that records the monthly sales figures of a retail store over a five-year period. In this case, each data point in the dataset will consist of the time component (month and year) and the corresponding sales value. The sequential nature of time series data enables us to analyze past patterns, detect trends, and predict future values.

Time series data can be found in a wide range of domains, including finance, economics, weather forecasting, medicine, and social sciences. In finance, for instance, stock prices are recorded over time and analyzed to identify patterns and make investment decisions. In weather forecasting, meteorologists use historical weather data to predict future climate conditions.

By leveraging the temporal patterns found in time series data, machine learning algorithms can be trained to make accurate predictions and forecasts. These algorithms take into account the historical data, such as past sales, stock prices, or weather conditions, to estimate future values and trends.

It is important to note that time series data may exhibit various characteristics that need to be considered during analysis. These characteristics include trends, seasonality, cyclic patterns, irregular fluctuations, and autocorrelation. Understanding these characteristics is crucial to select the appropriate models and techniques for analyzing and predicting time series data.

Understanding Time Series Analysis

Time series analysis is a statistical technique used to extract meaningful insights and patterns from time series data. By analyzing past observations, identifying trends, and forecasting future values, time series analysis provides valuable information for decision-making in various fields, including finance, economics, and forecasting.

One of the primary goals of time series analysis is to understand the underlying structure and behavior of the data. This involves examining the different components of a time series, such as trends, seasonality, and random fluctuations, and their impact on the overall pattern.

Trends refer to long-term changes or patterns in the data over time. They can be either upward (indicating growth) or downward (indicating a decline). By identifying and analyzing trends, analysts can gain insights into the overall direction and potential future values of the time series.

Seasonality is another important aspect of time series analysis. It refers to recurring patterns that occur at fixed time intervals, such as daily, weekly, or yearly. For example, retail sales may exhibit a seasonal pattern where sales are higher during holiday seasons or weekends. Understanding seasonality helps in anticipating and planning for future fluctuations in the data.

Random fluctuations, also known as noise or irregular components, represent random variations that cannot be attributed to any specific pattern or trend. These fluctuations often exist in time series data and can make it challenging to accurately forecast future values. Removing or reducing the impact of random fluctuations is an essential step in time series analysis.

There are various techniques and models available for time series analysis. These include simple statistical methods like moving averages and exponential smoothing, as well as more advanced techniques like autoregressive integrated moving average (ARIMA) models and seasonal decomposition of time series (STL) algorithms. Each method has its own strengths and limitations, and the choice of technique depends on the specific characteristics of the time series data being analyzed.

Time series analysis also involves evaluating the performance of forecasting models. This is done by comparing the predicted values with the actual values and assessing the accuracy and reliability of the forecasts. Common evaluation metrics include mean squared error (MSE), root mean squared error (RMSE), and mean absolute percentage error (MAPE).

Common Characteristics of Time Series Data

Time series data exhibits several common characteristics that distinguish it from traditional static datasets. Understanding these characteristics is essential for effectively analyzing and interpreting time series data.

1. Trend: Time series data often exhibits a trend, which refers to the long-term upward or downward movement in the data. Trends indicate the overall direction and pattern of the data and can provide insights into future values.

2. Seasonality: Seasonality refers to recurring patterns that occur at fixed time intervals. These patterns can be daily, weekly, monthly, or yearly. Understanding seasonality is crucial for predicting and planning for future fluctuations in the data.

3. Cyclicality: Cyclicality refers to patterns that occur over a longer time frame than seasonality and are not necessarily fixed in duration. These cycles can be influenced by economic or environmental factors and may repeat irregularly.

4. Autocorrelation: Autocorrelation is the correlation between a data point and its lagged values. Time series data often exhibit autocorrelation, meaning that past observations can influence future values. Autocorrelation can be used to identify and model relationships within the data.

5. Irregular Fluctuations: While trends, seasonality, and cyclicality provide some structure to the data, time series data also contains irregular fluctuations or noise. These fluctuations are random variations that cannot be attributed to any specific pattern or trend.

6. Non-Stationarity: Non-stationarity refers to time series data where the statistical properties, such as the mean and variance, change over time. Non-stationary data poses challenges for modeling and forecasting, and techniques like differencing or transformation may be used to make the data stationary.

7. Volatility: Volatility refers to the degree of variation or uncertainty in the data. In financial time series data, volatility is often used to measure the risk associated with the data and is important for investment and risk management decisions.

8. Outliers: Outliers are data points that deviate significantly from the general pattern of the data. These can be caused by measurement errors, anomalies, or other factors. Outliers should be carefully analyzed to determine if they should be included or removed from the analysis.

Understanding these common characteristics of time series data is vital for selecting appropriate modeling techniques, preprocessing the data, and interpreting the results of time series analysis.

Applications of Time Series Data in Machine Learning

Time series data plays a crucial role in machine learning, allowing us to harness the power of historical patterns and trends to make accurate predictions and forecasts. The applications of time series data in machine learning are diverse and span across various domains. Here are some notable applications:

1. Financial Forecasting: Time series analysis is widely used in finance for stock market forecasting, predicting exchange rates, and identifying market trends. By analyzing historical financial data, machine learning models can provide insights into future price movements and help traders make informed investment decisions.

2. Energy Demand Forecasting: Time series data is instrumental in predicting energy demand, optimizing power generation, and managing energy resources. Utilities and energy providers can leverage machine learning algorithms to forecast energy consumption patterns, ensuring efficient allocation of resources and reducing costs.

3. Weather Forecasting: Meteorologists rely on time series analysis to predict weather patterns, monitor climate change, and issue timely warnings. By analyzing historical weather data, machine learning models can identify trends and patterns to make accurate short-term and long-term weather forecasts.

4. Healthcare: Time series data is invaluable in healthcare for monitoring patient vitals, predicting disease outbreaks, and analyzing medical trends. By analyzing patient data over time, machine learning models can assist in early detection of diseases, treatment planning, and personalized medicine.

5. Supply Chain Optimization: Time series data is extensively used in supply chain management to forecast demand, optimize inventory levels, and improve logistics processes. Machine learning models can analyze historical sales data and external factors to predict future demand fluctuations, leading to cost savings and improved customer satisfaction.

6. Internet of Things (IoT): With the proliferation of IoT devices, time series data plays a vital role in analyzing sensor data, predicting equipment failures, and optimizing maintenance schedules. Machine learning models can process real-time sensor data to detect anomalies, predict equipment failures, and optimize operational efficiency.

7. Social Media Analysis: Time series data from social media platforms can be analyzed to understand user behavior, sentiment analysis, and predict trends. Machine learning algorithms can process social media data over time to identify patterns, predict consumer preferences, and optimize marketing strategies.

These are just a few examples of how time series data is utilized in machine learning. The ability to analyze and predict future values and trends from historical data makes time series analysis a powerful tool for making informed decisions in various industries and domains.

Preprocessing Time Series Data

Preprocessing time series data is a crucial step in preparing the data for analysis and modeling. It involves transforming raw time series data into a format that is more suitable for machine learning algorithms. The preprocessing techniques applied may vary depending on the characteristics of the data and the specific analysis objectives. Here are some common preprocessing steps for time series data:

1. Data Cleaning: The first step is to clean the data by handling missing values, outliers, and errors. Missing values can be filled using techniques such as forward filling, backward filling, or interpolation. Outliers can be detected and either removed or replaced with more appropriate values.

2. Resampling: Time series data may be collected at irregular intervals. Resampling involves converting the data into a regular time interval, such as hourly, daily, or monthly. This can be done through techniques like upsampling (increasing the frequency) or downsampling (decreasing the frequency). Resampling helps in achieving consistent and standardized data for analysis.

3. Normalization: Normalizing the data is essential when the values of the time series data have different scales or units. Common normalization techniques include min-max scaling or z-score normalization, which transform the data to a common scale without distorting the underlying patterns.

4. Differencing: Differencing is a technique used to remove trends by computing the differences between consecutive observations. This is helpful in making a time series stationary by eliminating the trend component, which can make the data easier to model and analyze.

5. Feature Engineering: Feature engineering involves creating additional features from the existing time series data. These features can capture seasonality, trends, or other patterns that may be useful for modeling. Common techniques include lagging (including past values as features), rolling statistics (e.g., rolling average), and Fourier transforms to identify frequency components.

6. Handling Seasonality: If the time series data exhibits a seasonal pattern, it is important to account for it. This can be done through techniques like seasonal decomposition, where the seasonality component is separated from the overall trend. Seasonal adjustments help in better understanding and modeling the patterns in the data.

7. Splitting into Training and Test Sets: To evaluate the performance of time series models, it is essential to split the data into training and test sets. The training set is used to build the model, while the test set is reserved for evaluating its performance on unseen data. Care must be taken to ensure that the training period precedes the test period to avoid data leakage.

Preprocessing time series data is a critical step that sets the foundation for accurate modeling and analysis. It helps in removing noise, handling missing values, and transforming the data into a suitable format for machine learning algorithms to extract meaningful insights.

Exploratory Data Analysis for Time Series Data

Exploratory Data Analysis (EDA) is a crucial step in understanding the characteristics and patterns present in time series data. It involves visualizing and summarizing the data to gain insights into its structure, identify outliers or anomalies, and inform subsequent modeling and analysis. EDA helps in uncovering important features and relationships within the data. Here are some key techniques used in EDA for time series data:

1. Plotting Time Series: Visualizing the time series data is the first step in EDA. Time series plots represent the data on the y-axis and time on the x-axis. This allows us to observe trends, seasonality, and fluctuations within the data. Line plots, scatter plots, or other specialized plots like autocorrelation plots can be used to examine the data and identify any patterns or irregularities.

2. Descriptive Statistics: Calculating descriptive statistics such as mean, standard deviation, and percentiles provides insights into the central tendencies and variability of the time series data. These statistics can help identify outliers or unusual observations that may require further investigation.

3. Autocorrelation and Partial Autocorrelation Analysis: Autocorrelation measures the correlation between a time series and its lagged values. It helps in identifying the presence of serial correlation and determining the order of autoregressive and moving average components in time series models. Partial autocorrelation shows the direct relationship between an observation and its lagged values, while taking into account the indirect relationships through intermediate lags.

4. Seasonal Decomposition: Seasonal decomposition separates a time series into its trend, seasonal, and residual components. This technique helps in understanding the underlying patterns and structures of the time series data. It allows analysts to examine and model the individual components for better forecasting and analysis.

5. Heatmaps and Correlation Plots: Heatmaps and correlation plots provide a visual representation of the relationships between multiple time series variables. These plots can help identify correlated or influential variables, facilitating feature selection and model building.

6. Histograms and Density Plots: Histograms and density plots represent the distribution of values within a time series. This helps in identifying the skewness, kurtosis, and potential data transformations required for modeling purposes.

7. Outlier Detection: Outliers can significantly impact the analysis and forecasting accuracy. EDA helps in identifying outliers using techniques such as box plots or statistical methods like the Z-score or interquartile range. Outliers can then be investigated to determine their validity and potential impact on the analysis.

By performing exploratory data analysis, analysts can gain a deeper understanding of the underlying patterns and characteristics of the time series data. It provides insights that guide further analysis, model selection, and feature engineering to develop accurate predictive models.

Time Series Forecasting Methods

Time series forecasting is a process of predicting future values or patterns based on historical data. Various methods and models are used to forecast time series data, depending on the specific characteristics and objectives of the analysis. Here are some commonly used time series forecasting methods:

1. ARIMA (Autoregressive Integrated Moving Average): ARIMA is a popular and widely used forecasting method. It combines autoregressive (AR), moving average (MA), and differencing components to model the trend, seasonality, and randomness in the time series data. ARIMA models are particularly useful for stationary time series data.

2. Exponential Smoothing: Exponential smoothing methods forecast time series data by assigning exponentially decreasing weights to past observations. There are different variations of exponential smoothing, such as Simple Exponential Smoothing (SES), Holt’s Linear Exponential Smoothing, and Holt-Winters’ Exponential Smoothing that handle different patterns in the data, such as trend and seasonality.

3. AR (Autoregressive) Models: Autoregressive models use the past values of the time series to forecast future values. These models assume that the current value of the time series is linearly related to its previous values. The order of the autoregressive model (represented as AR(p)) specifies the number of past observations used for prediction.

4. MA (Moving Average) Models: Moving average models consider the weighted average of past forecast errors to predict future values. These models assume that the current value of the time series is a function of the past forecast errors. The order of the moving average model (represented as MA(q)) indicates the number of lagged forecast errors considered.

5. SARIMA (Seasonal ARIMA): SARIMA models extend the capabilities of ARIMA models to incorporate seasonality in the data. They include additional seasonal components in the model to account for the repeating patterns observed in the time series.

6. Prophet: Prophet is a relatively new time series forecasting approach developed by Facebook. It is designed to handle time series data with various seasonality patterns and offers flexibility in modeling holidays and trend changepoints. Prophet uses an additive regression model with separate components for trend, seasonality, and holidays.

7. Long Short-Term Memory (LSTM) Neural Networks: LSTM networks are a type of recurrent neural networks (RNN) that are well-suited for modeling and predicting temporal dependencies present in time series data. LSTM networks can capture long-term patterns and handle complex relationships, making them effective for time series forecasting tasks.

8. Ensemble Methods: Ensemble methods combine multiple forecasting models to improve accuracy and robustness. Techniques such as model averaging, weighted averaging, or combining the forecasts using machine learning algorithms like Gradient Boosting or Random Forests can enhance the forecasting performance.

It is important to choose the appropriate forecasting method based on the characteristics of the time series data, such as trends, seasonality, and non-stationarity. Evaluating and comparing the performance of different forecasting methods using appropriate metrics is crucial in selecting the most suitable approach for accurate predictions.

Evaluating Time Series Forecasting Models

Evaluating the performance of time series forecasting models is essential to assess their accuracy and reliability. By comparing the predicted values with the actual values, we can determine how well the model performs in capturing the underlying patterns and making accurate forecasts. Here are some commonly used evaluation techniques for time series forecasting models:

1. Mean Absolute Error (MAE): MAE measures the average absolute difference between the predicted values and the actual values. It provides a measure of the model’s ability to make accurate forecasts without considering the direction of the errors. A lower MAE indicates a better-performing model.

2. Mean Squared Error (MSE): MSE calculates the average squared difference between the predicted values and the actual values. It is particularly useful for penalizing large errors as it squares the differences. However, it does not have the same unit of measure as the original data, making it harder to interpret.

3. Root Mean Squared Error (RMSE): RMSE is the square root of MSE and provides an interpretable metric in the same unit as the original data. It is widely used as an evaluation metric for time series forecasting models. Like MSE, a lower RMSE indicates better predictive performance.

4. Mean Absolute Percentage Error (MAPE): MAPE measures the average percentage difference between the predicted values and the actual values. It is useful for evaluating the accuracy of the forecast relative to the magnitude of the data. However, it can be sensitive to zero or near-zero values in the actual data.

5. Percentage of Accuracy: Percentage of Accuracy calculates the percentage of correct predictions within a specified tolerance range. It provides an intuitive measure of how well the model predicts values within an acceptable margin of error.

6. Visual Inspection of Residuals: Residuals are the differences between the predicted and actual values. Visual inspection of residuals can help identify patterns or biases in the model’s forecast. Plots such as residual histograms, box plots, or autocorrelation plots can provide insights into the model’s ability to capture the underlying structure of the time series data.

7. Forecast Error Decomposition: Decomposing the forecast errors into components such as bias, variance, or seasonality can provide a deeper understanding of the model’s performance. This allows us to identify the sources of error and make appropriate adjustments to improve the forecasting accuracy.

It is important to note that the choice of evaluation metric depends on the specific objectives and characteristics of the time series data. Some metrics may be more suitable for certain types of data or forecasting tasks. Additionally, conducting cross-validation or out-of-sample testing is recommended to assess the model’s generalization performance and avoid overfitting to the training data.

Handling Seasonality and Trends in Time Series Data

Seasonality and trends are common patterns present in time series data that need to be addressed to make accurate forecasts. These patterns can significantly influence the behavior of the data and impact the performance of time series models. Here are some approaches for handling seasonality and trends in time series data:

1. Differencing: Differencing is a technique used to remove trends from the data. It involves computing the differences between consecutive observations to make the time series data stationary. By removing the trend component, differencing allows for better modeling and forecasting of the data. If the time series exhibits seasonality, seasonal differencing can be applied in addition to first-order differencing.

2. Seasonal Adjustment: Seasonal adjustment is the process of removing the seasonal component from the time series data. It aims to isolate the trend and other non-seasonal patterns. Seasonal adjustment methods include methods like X-12-ARIMA, STL decomposition, or seasonal Holt-Winters forecasting. Seasonal adjustment can help in better understanding the underlying trend and making accurate forecasts.

3. Exogenous Variables: Incorporating exogenous variables can help capture additional factors that influence the time series data, such as economic indicators or weather conditions. By including these variables in the modeling process, we can account for their impact and improve the accuracy of the forecasts.

4. Time Series Decomposition: Time series decomposition separates the time series data into its individual components, including trend, seasonal, and residual components. This enables a deeper understanding of the underlying patterns and can guide the selection of appropriate modeling techniques. Decomposition methods like STL (Seasonal-Trend Decomposition using Loess) or classical decomposition provide insights into the individual components of the time series.

5. Seasonal Models: Seasonal forecasting models are specifically designed to handle data with observed seasonality. Models such as SARIMA (Seasonal ARIMA), seasonal Holt-Winters, or harmonic regression models can capture and incorporate the seasonal patterns in the data. These models consider the seasonality by including seasonal lags or parameters that capture the seasonal behavior.

6. Trend Models: Trend models focus on capturing the underlying long-term trend in the time series data. Models such as linear regression, polynomial regression, or exponential smoothing models can be used to model and forecast the trend component. Trend models are especially useful when the data exhibits a clear upward or downward trend over time.

7. Robust Models: Robust models are designed to handle time series data with irregular or changing patterns. These models can adapt to shifts in the trend, seasonality, or other characteristics. Examples of robust models include robust regression, robust exponential smoothing, or robust ARIMA models.

It is important to note that the approach for handling seasonality and trends depends on the specific characteristics of the time series data and the forecasting requirements. A careful analysis of the data and experimentation with different techniques can help identify the most appropriate approach for addressing seasonality and trends to obtain accurate forecasts.

Advanced Techniques for Time Series Analysis

Time series analysis encompasses a wide range of advanced techniques that go beyond traditional methods to extract deeper insights and improve forecasting accuracy. These advanced techniques leverage sophisticated algorithms and models to handle complex patterns and relationships within the time series data. Here are some notable advanced techniques for time series analysis:

1. Machine Learning: Machine learning algorithms, such as support vector machines (SVM), random forests, or neural networks, can be applied to time series data. These algorithms can capture nonlinear relationships, handle large amounts of data, and adapt to changing patterns. Machine learning techniques can be used for feature selection, anomaly detection, and forecasting tasks.

2. Deep Learning: Deep learning models, particularly recurrent neural networks (RNNs) and their variant, long short-term memory (LSTM) networks, are effective for time series analysis. RNNs can capture temporal dependencies and long-term patterns in the data. LSTM networks excel in handling long-term memory and can capture complex relationships in time series data. They are particularly useful when faced with sequential data or time series with variable length.

3. Wavelet Analysis: Wavelet analysis is a signal processing technique that decomposes time series data into different frequency components. It can capture localized patterns and changes in the data across multiple scales. Wavelet analysis is useful for denoising, trend detection, and feature extraction in time series data.

4. State Space Models: State space models (SSM) represent time series data as a series of unobservable states and observable measurements. These models can handle complex dynamics, random effects, and latent variables. Popular state space models include the Kalman filter and hidden Markov models (HMM), which can be extended to incorporate seasonality and other temporal patterns.

5. Bayesian Time Series Analysis: Bayesian methods provide a probabilistic framework for time series analysis. These methods combine prior knowledge with observed data to estimate posterior distributions of the model parameters. Bayesian time series models, such as dynamic linear models (DLM), Bayesian structural time series (BSTS), or Gaussian processes, offer flexibility, uncertainty quantification, and the ability to update forecasts as new data becomes available.

6. Functional Data Analysis: Functional data analysis treats time series data as continuous functions rather than discrete observations. It focuses on characterizing the overall trajectory and shape of the data. Functional data analysis methods, such as functional principal component analysis (FPCA) or functional regression, provide insights into the underlying dynamics of time series data.

7. Ensemble Forecasting: Ensemble forecasting combines multiple models or variations of a single model to improve forecasting accuracy. By aggregating the forecasts from different models, ensemble methods can reduce individual model biases, capture diverse patterns, and provide robust forecasts. Techniques such as model averaging, weighted averaging, or stacking can be used for ensemble forecasting.

These advanced techniques offer powerful tools to tackle challenging aspects of time series analysis, providing more accurate forecasts, identification of complex patterns, and improved understanding of the underlying dynamics within the data. Understanding the strengths and limitations of these techniques is crucial in selecting the most suitable approach for specific time series analysis tasks.

Challenges and Limitations of Time Series Data in Machine Learning

While time series data provides valuable insights and enables accurate predictions, it presents unique challenges and limitations in the context of machine learning. Understanding these challenges is crucial for effectively working with time series data and developing robust models. Here are some notable challenges and limitations of time series data in machine learning:

1. Temporal Dependency: Time series data exhibits temporal dependency, meaning that consecutive observations are correlated. This violates the assumption of independence in many machine learning algorithms and requires special consideration when selecting and applying models. Techniques such as autoregressive models or recurrent neural networks are designed to capture this temporal dependency effectively.

2. Seasonality and Trends: Time series data often contains seasonal patterns, trends, or other cyclical components. Modeling and handling these patterns accurately can be challenging and require specialized techniques such as SARIMA models or decomposition methods. Failure to account for seasonality and trends can lead to poor forecasting performance.

3. Data Sparsity: Time series data can be sparse, meaning that it may have missing values or irregularly spaced observations. Missing values introduce challenges in modeling and forecasting, and appropriate strategies for filling or handling missing values need to be employed. Irregular spacing of observations can make it difficult to apply certain algorithms that assume regular time intervals.

4. Data Stationarity: Stationarity is an essential assumption in many time series models. However, real-world time series data often exhibits non-stationarity, where the mean, variance, or other statistical properties change over time. Handling non-stationary data requires techniques such as differencing or decomposition to make the data stationary before modeling.

5. Overfitting: Time series data with limited observations and complex patterns can be prone to overfitting. Overfitting occurs when a model captures noise or idiosyncrasies in the data instead of the underlying patterns. Regularization techniques, cross-validation, and careful selection of model complexity can help mitigate the risk of overfitting and improve generalization performance.

6. Data Quality: Time series data can suffer from quality issues such as outliers, measurement errors, or missing values. These issues can impact the accuracy of models and forecasts. Proper data cleaning, outlier detection, and imputation techniques are necessary to ensure the integrity and reliability of the time series data.

7. Forecast Uncertainty: Unlike classification or regression tasks, time series forecasting involves making predictions about the future, which inherently carries uncertainties. Accurately quantifying and expressing forecast uncertainty is challenging but critical for decision-making and risk assessment. Bayesian approaches or ensemble techniques can help incorporate uncertainty into time series forecasts.

It is important to be aware of these challenges and limitations when working with time series data in machine learning. By understanding these factors, employing appropriate modeling techniques, and considering the specific characteristics of the data, it is possible to overcome these challenges and develop accurate and reliable models for time series analysis and forecasting tasks.