What is Cross Entropy Loss?

Cross Entropy Loss is a key concept in machine learning, particularly in the field of classification problems. It is a measurement of how well a particular classification model predicts the outcome of a given set of data. It quantifies the difference between the predicted probability distribution and the actual probability distribution.

To understand Cross Entropy Loss, it is essential to grasp the notion of entropy. Entropy defines the amount of uncertainty or information contained within a set of data. Higher entropy indicates higher uncertainty, while lower entropy signifies more predictable and structured information.

Cross Entropy Loss is derived from the concept of entropy and is commonly used in machine learning models that involve discrete outcomes, such as classification tasks. It measures the dissimilarity between the predicted probabilities assigned by the model and the actual labels of the data points.

By minimizing the Cross Entropy Loss, the model aims to adjust its parameters to maximize the likelihood of the correct classification. Essentially, the goal is to find the set of parameters that leads to the most accurate predictions.

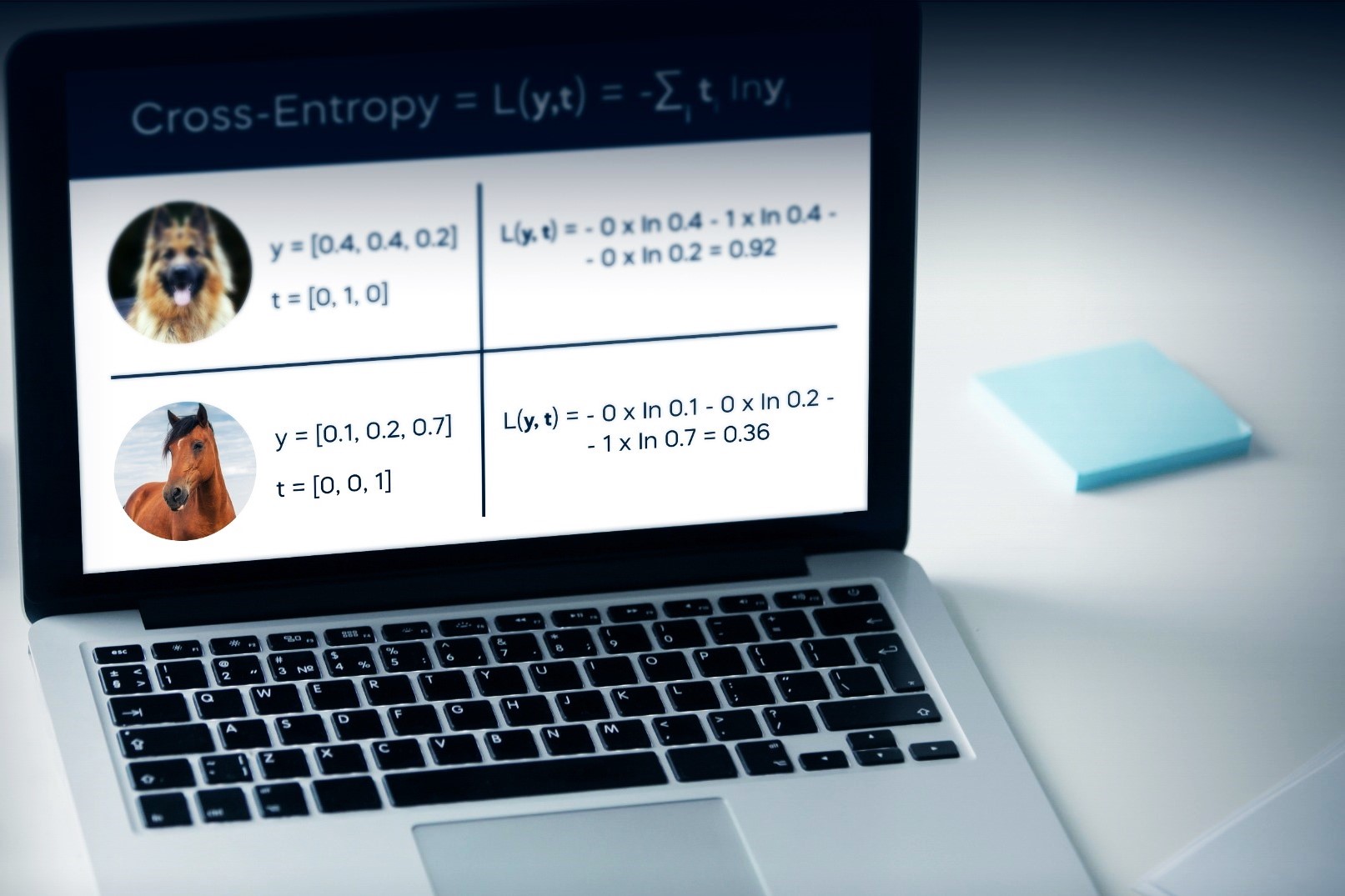

The Cross Entropy Loss is calculated using the formula:

CE = -∑(y * log(p) + (1-y) * log(1-p))

Where CE represents the Cross Entropy Loss, y is the actual label (0 or 1), and p is the predicted probability assigned by the model.

It is essential to note that Cross Entropy Loss is a positive scalar value, and a lower value indicates a better prediction performance. A perfect model would have a Cross Entropy Loss of 0, indicating that the predicted probabilities align perfectly with the actual labels.

However, in practice, it is rare to achieve a Cross Entropy Loss of 0. Instead, machine learning models strive to minimize the loss as much as possible to improve their predictive capabilities.

In the next section, we will explore how Cross Entropy Loss is utilized in classification problems and its significance in machine learning applications.

Understanding Entropy and Cross Entropy

To grasp the concept of Cross Entropy Loss, it is important to have a clear understanding of entropy and how it relates to machine learning.

Entropy, in the context of information theory, refers to the measure of uncertainty or randomness in a set of data. It is a fundamental concept that helps quantify the amount of information contained within a dataset. In machine learning, entropy is often used to assess the purity or impurity of a decision tree or to evaluate the performance of classification models.

In the context of binary classification, where there are two possible outcomes (e.g., true or false, positive or negative), the entropy is calculated using the following formula:

Entropy = -p * log2(p) – (1 – p) * log2(1 – p)

Where p represents the probability of one of the outcomes. A value of p close to 0 indicates high uncertainty and high entropy, while a value of p close to 1 signifies low uncertainty and low entropy.

Now, let’s delve into cross entropy. Cross entropy is a modification of entropy that compares the predicted probability distribution of a model with the actual probability distribution. In classification tasks, it allows us to measure the dissimilarity between the predicted and true labels.

Mathematically, the cross entropy between two probability distributions is calculated using the formula:

Cross Entropy = -∑(y * log(p) + (1 – y) * log(1 – p))

Here, y represents the actual label (either 0 or 1), and p denotes the predicted probability assigned by the model. The cross entropy value ranges from 0 to infinity, where a lower value indicates better model performance.

When the predicted and true labels perfectly match, the cross entropy becomes 0, indicating that the model’s predictions align perfectly with the actual outcomes. Conversely, as the predictions deviate from the true labels, the cross entropy value increases, signifying a larger discrepancy between the predicted and actual distributions.

Cross Entropy in Machine Learning

Cross Entropy is a widely used concept in machine learning, particularly in the context of classification problems. It serves as a fundamental loss function that guides the training process of models to make accurate predictions.

In machine learning, the goal is to develop models that can effectively classify data into different categories. Cross Entropy plays a crucial role in achieving this objective by quantifying the discrepancy between predicted probabilities and true labels.

When training a classification model, the parameters are adjusted iteratively to minimize the Cross Entropy Loss. This optimization process, often done through algorithms like gradient descent, helps the model learn the patterns and relationships within the data.

By minimizing the Cross Entropy Loss, the model learns to assign higher probabilities to the correct labels and lower probabilities to the incorrect labels. In other words, the model becomes more confident in its predictions, leading to improved performance on unseen data.

Cross Entropy Loss is preferred over other loss functions in classification tasks because it is more sensitive to the differences between predicted and actual probabilities. It penalizes larger deviations, allowing the model to focus on areas that require improvement.

Moreover, Cross Entropy Loss is suited for multi-class classification problems as well. In such scenarios, the loss is calculated across all classes, providing a comprehensive measure of the overall prediction accuracy.

It is worth noting that Cross Entropy Loss is not limited to classification tasks alone. It can also be applied to other machine learning domains, such as natural language processing, where the objective is to predict the probability distribution of the next word in a sentence.

Overall, Cross Entropy serves as a valuable tool in machine learning to optimize models for accurate classification. By quantifying the dissimilarity between predicted and true labels, it guides the learning process, leading to more robust and reliable predictions.

How Cross Entropy is Used in Classification Problems

Cross Entropy is widely used as a loss function in classification problems due to its effectiveness in guiding the training process and improving the accuracy of predictions. Let’s explore how Cross Entropy is utilized in the context of classification tasks.

In classification problems, the goal is to assign a specific label or category to each input data point. For example, predicting whether an email is spam or not, identifying the sentiment of a customer review, or classifying images into different object categories.

To train a classification model, we need a way to measure the discrepancy between the predicted probabilities and the true labels. This is where Cross Entropy Loss comes into play.

When encountering a classification problem, the model predicts the probabilities of each possible outcome for a given input. These probabilities are then compared with the true labels to calculate the Cross Entropy Loss.

The model aims to minimize the Cross Entropy Loss by adjusting its parameters. By doing so, it learns to assign higher probabilities to the correct labels and lower probabilities to incorrect ones.

For example, in a binary classification problem (with two possible labels), the Cross Entropy Loss is calculated as:

CE = -∑(y * log(p) + (1 – y) * log(1 – p))

Where y represents the actual label (either 0 or 1), and p denotes the predicted probability assigned by the model.

The model adjusts its parameters through optimization algorithms like gradient descent, which iteratively updates the parameters to minimize the Cross Entropy Loss.

By using Cross Entropy Loss as the objective function, the model learns to make more accurate predictions. It understands the relationship between the input features and the corresponding labels, ultimately improving its ability to classify new, unseen data.

Furthermore, Cross Entropy Loss can be extended to multi-class classification problems as well. In these scenarios, the loss is calculated across all possible classes, providing a comprehensive measure of the model’s overall accuracy.

Overall, Cross Entropy Loss forms a crucial component in classification problems, allowing models to refine their predictions and make accurate classifications. It serves as a guiding force in the training process, leading to improved performance in many real-world applications.

Calculating Cross Entropy Loss

The calculation of Cross Entropy Loss involves comparing the predicted probabilities assigned by a model with the true labels of the data points. This calculation provides a measure of the dissimilarity between the predicted and actual distributions.

To understand how Cross Entropy Loss is computed, let’s consider a binary classification problem with two possible outcomes (e.g., true or false, positive or negative). In this case, the Cross Entropy Loss can be calculated using the following formula:

CE = -∑(y * log(p) + (1 – y) * log(1 – p))

Here, CE represents the Cross Entropy Loss, y is the actual label (either 0 or 1), and p denotes the predicted probability assigned by the model.

To break it down further, when the actual label (y) is 1, the first term of the equation, y * log(p), will contribute to the loss calculation. If the predicted probability (p) is close to 0 (indicating a low confidence in the positive outcome), the loss will be high. Conversely, if the predicted probability is close to 1 (indicating a high confidence in the positive outcome), the loss will be low.

Similarly, when the actual label is 0, the second term, (1 – y) * log(1 – p), will contribute to the loss calculation. If the predicted probability is close to 1 (indicating a low confidence in the negative outcome), the loss will be high. Conversely, if the predicted probability is close to 0 (indicating a high confidence in the negative outcome), the loss will be low.

The Cross Entropy Loss is a positive scalar value. A lower value indicates a better prediction performance, as it signifies a smaller discrepancy between the predicted and actual distributions. In an ideal scenario, where the model’s predictions perfectly match the true labels, the Cross Entropy Loss would be 0.

It is important to note that the Cross Entropy Loss calculation can be extended to multi-class classification problems as well. In these cases, the loss is calculated across all possible classes and provides an overall measure of the model’s performance.

By minimizing the Cross Entropy Loss, typically through optimization algorithms like gradient descent, the model learns to adjust its parameters and improve its predictive capabilities.

In the next section, we will explore the properties of Cross Entropy Loss and its implications in the field of machine learning.

Properties of Cross Entropy Loss

The Cross Entropy Loss has several properties that make it a valuable tool in the realm of machine learning. Understanding these properties can help us better appreciate the significance of Cross Entropy Loss in model optimization and performance evaluation.

1. Non-negative value: The Cross Entropy Loss is always a non-negative scalar value. This means that the loss can never be negative, indicating that it serves as a measure of dissimilarity rather than similarity between the predicted and true distributions.

2. Asymmetry: Cross Entropy Loss is not symmetric. In other words, the loss value will differ depending on whether the predicted probability is higher or lower than the corresponding true label. This asymmetry ensures that larger deviations from the true label are penalized more heavily in the loss calculation.

3. High Loss for Incorrect Predictions: When the predicted probability deviates significantly from the true label, the Cross Entropy Loss will be high. This means that the model is penalized for making incorrect predictions, motivating it to adjust its parameters to improve accuracy.

4. Additivity: For multi-class classification problems, the Cross Entropy Loss can be calculated across all possible classes and summed to obtain an overall measure of the model’s performance. This additivity property allows for comprehensive evaluation of the model’s accuracy across various categories.

5. Continuous and Differentiable: The Cross Entropy Loss function is continuous and differentiable, allowing it to be used with optimization algorithms like gradient descent, which rely on these properties to iteratively update model parameters.

6. Sensitivity to Probabilities: Cross Entropy Loss is sensitive to the predicted probabilities assigned by the model. It places greater emphasis on larger deviations from the true labels, allowing the model to focus on areas that require improvement and adjust its predictions accordingly.

Understanding the properties of Cross Entropy Loss is essential when optimizing machine learning models. By leveraging these properties, practitioners can effectively guide the training process and evaluate the performance of classification models.

In the next section, we will explore the benefits and limitations of using Cross Entropy Loss in machine learning applications.

Benefits and Limitations of Cross Entropy Loss

Cross Entropy Loss offers several benefits in machine learning, making it a widely used loss function in classification tasks. However, it also comes with certain limitations that need to be considered. Let’s explore both the benefits and limitations of Cross Entropy Loss.

Benefits:

- Effective in classification tasks: Cross Entropy Loss provides a powerful tool for training classification models. It measures the dissimilarity between predicted and true distributions, allowing the model to learn and improve its predictive capabilities.

- Sensitive to differences: Cross Entropy Loss is highly sensitive to deviations between predicted and true probabilities. This property ensures that the model focuses on areas with larger discrepancies, leading to more accurate predictions.

- Allows for multi-class classification: Cross Entropy Loss can be extended to handle multi-class classification problems. By summing the loss across all classes, it provides an overall measure of the model’s performance, allowing for comprehensive evaluation.

- Supports optimization algorithms: The continuous and differentiable nature of Cross Entropy Loss allows it to be used effectively with optimization algorithms like gradient descent. This enables efficient parameter updates and model optimization.

Limitations:

- Imbalanced class distribution: In cases where the class distribution in the data is imbalanced, Cross Entropy Loss may prioritize the majority class. This can lead to biased predictions and a lower accuracy for the minority class.

- Insensitive to confidence levels: Cross Entropy Loss treats all incorrect predictions equally, regardless of the confidence level of the prediction. This means that a highly confident incorrect prediction is considered equally as a partially confident incorrect prediction.

- Vulnerability to outliers: Cross Entropy Loss can be sensitive to outliers in the data. Outliers with extreme probabilities can significantly impact the loss calculation, potentially distorting the model’s optimization process.

- Assumes independence of predictions: Cross Entropy Loss assumes that the predicted probabilities assigned to each class are independent of each other. However, in certain cases, the classes may have dependencies or relationships that are not captured by this assumption.

Despite these limitations, Cross Entropy Loss remains a popular and effective loss function in many classification problems. It offers valuable insights into the model’s performance and guides the learning process, leading to improved predictive capabilities.

In the next section, we will provide an example to illustrate how Cross Entropy Loss is applied in practice.

An Example of Cross Entropy Loss in Action

To illustrate the application of Cross Entropy Loss, let’s consider an example of a binary classification problem: predicting whether an email is spam or not.

In this scenario, let’s assume we have a dataset of 1000 emails, labeled as either spam (1) or not spam (0). We train a machine learning model to classify new emails as spam or not based on various features.

After training the model, we evaluate its performance using Cross Entropy Loss as the loss function. The model predicts the probabilities of each email being spam, and we compare these predictions with the true labels to calculate the loss.

Let’s say the model predicts the following probabilities for the first five emails:

- Email 1: Predicted probability of spam (p) = 0.8, True label (y) = 1

- Email 2: p = 0.2, y = 1

- Email 3: p = 0.6, y = 0

- Email 4: p = 0.9, y = 1

- Email 5: p = 0.3, y = 0

Using the formula for Cross Entropy Loss, we can calculate the loss for each email and then take the average to obtain the overall loss:

CE1 = -((1 * log(0.8)) + (1 – 1) * log(1 – 0.8))≈0.223

CE2 = -((1 * log(0.2)) + (1 – 1) * log(1 – 0.2))≈1.609

CE3 = -((0 * log(0.6)) + (1 – 0) * log(1 – 0.6))≈0.916

CE4 = -((1 * log(0.9)) + (1 – 1) * log(1 – 0.9))≈0.105

CE5 = -((0 * log(0.3)) + (1 – 0) * log(1 – 0.3))≈1.204

Overall Cross Entropy Loss = (CE1 + CE2 + CE3 + CE4 + CE5) / 5 ≈ 0.811

In this example, the overall Cross Entropy Loss is approximately 0.811, indicating the average dissimilarity between the predicted and true distributions. A lower value would suggest better predictive performance.

The model can then use methods like gradient descent to adjust its parameters and minimize the Cross Entropy Loss, thereby improving its ability to accurately classify emails as spam or not.

This example demonstrates how Cross Entropy Loss provides a quantitative measure of the model’s performance in classification problems. By optimizing this loss, the model can enhance its predictive capabilities and make more accurate predictions.

In the next section, we will explore the difference between Cross Entropy Loss and Mean Squared Error (MSE) as loss functions.

Cross Entropy vs. Mean Squared Error

Both Cross Entropy Loss and Mean Squared Error (MSE) are popular loss functions used in different types of machine learning problems. While they serve similar purposes, there are distinct differences between the two. Let’s explore the key contrasts between Cross Entropy and MSE.

Cross Entropy Loss:

Cross Entropy Loss is commonly used in classification problems, particularly when dealing with discrete outcomes. It quantifies the dissimilarity between predicted probabilities and true labels, guiding the model to make accurate predictions.

One main advantage of Cross Entropy Loss is that it is sensitive to the differences between predicted and true probabilities. It penalizes larger deviations, allowing the model to focus on areas that require improvement.

Cross Entropy Loss is well-suited for multi-class classification tasks as well, as it can be calculated across all possible classes, providing an overall evaluation of the model’s performance.

Mean Squared Error (MSE):

MSE, on the other hand, is commonly used in regression problems, where the goal is to predict continuous values. It measures the average squared difference between predicted and true values, evaluating the overall accuracy of the model’s predictions.

Unlike Cross Entropy Loss, which focuses on probability distributions, MSE calculates the discrepancy between actual values and the predictions themselves. This difference makes it a suitable choice for problems where magnitude and proximity of the predicted and true values are important.

MSE is sensitive to outliers, as the squared nature of the loss heavily penalizes large differences between predictions and true values. It may lead to the model being overly affected by extreme values in the dataset.

The choice between Cross Entropy Loss and MSE depends on the nature of the problem. If the goal is to make classification predictions with discrete outcomes, Cross Entropy Loss is more appropriate. However, if the objective is regression and predicting continuous values, MSE is typically the preferred choice.

It is worth noting that there are variations and alternative loss functions available for different scenarios, and the choice depends on the specific requirements and characteristics of the problem at hand.

In the next section, we will explore some tips for effectively working with Cross Entropy Loss in machine learning applications.

Tips for Working with Cross Entropy Loss

When working with Cross Entropy Loss in machine learning applications, there are several tips that can help improve model performance and optimize the training process. Let’s explore some of these tips:

1. Normalize Input Data: Standardize or normalize the input features before training the model. This can help prevent biases in the predictions and ensure that the model converges faster during the training process.

2. One-Hot Encoding: For multi-class classification problems, consider using one-hot encoding for the true labels. This ensures that the Cross Entropy Loss is calculated correctly across all classes.

3. Class Weighting: Handle imbalanced class distributions by applying class weighting. Assign higher weights to the minority class or samples to alleviate the bias towards the majority class during training and improve performance on the underrepresented class.

4. Regularization: Incorporate regularization techniques like L1 or L2 regularization to prevent overfitting. Regularization helps control the model’s complexity and encourages generalization to unseen data.

5. Learning Rate Tuning: Experiment with different learning rates during training to find an optimal value. A learning rate that is too large may cause the model to overshoot the minimum, while a learning rate that is too small may slow down the convergence.

6. Early Stopping: Implement early stopping to prevent overfitting and save computational resources. Monitor the validation loss during training and stop the process if the loss does not improve for a certain number of epochs.

7. Additional Evaluation Metrics: Alongside Cross Entropy Loss, consider using additional evaluation metrics like accuracy, precision, recall, or F1 score to gain a more comprehensive understanding of the model’s performance.

8. Model Selection: Compare the performance of different models by evaluating their Cross Entropy Loss and other metrics on a validation set. Select the model that demonstrates the lowest loss and the best overall performance.

9. Increasing Model Capacity: If your model is underperforming, consider increasing its capacity by adding more layers or neurons. This can help the model learn more complex patterns and improve its predictive capabilities.

10. Regular Monitoring and Retraining: Periodically evaluate the model’s performance on new data and consider retraining the model if necessary. This ensures that the model remains up-to-date and continues to perform well in evolving circumstances.

By following these tips, practitioners can enhance the effectiveness of their machine learning models when using Cross Entropy Loss. These strategies promote better training, improve model accuracy, and help achieve better predictions in classification tasks.