Early Computer Devices

Before the invention of the electronic computer, several mechanical and electromechanical devices laid the foundation for modern computing. These early computer devices, although cumbersome and limited in their capabilities, played a crucial role in paving the way for future technological advancements.

One such device was the abacus, which is believed to have originated in ancient Mesopotamia. This simple counting tool consisted of a series of rods or wires, each holding a set of movable beads. By manipulating the beads, users could perform basic arithmetic calculations.

Another important early device was the slide rule, which was widely used by mathematicians and engineers until the advent of electronic calculators. Developed in the 17th century, the slide rule allowed users to perform complex mathematical operations quickly and accurately.

Charles Babbage, often referred to as the “father of computing,” made significant contributions to early computer development. In the 19th century, he designed the Difference Engine, a mechanical device capable of performing mathematical calculations. Although the Difference Engine was never fully constructed during his lifetime, Babbage’s ideas laid the groundwork for future computer designs.

Following Babbage’s work, Ada Lovelace, a mathematician and writer, wrote a series of notes in the 1840s hypothesizing the potential of computers to manipulate symbols and not just numbers. Her visionary ideas and analytical approach made her the world’s first computer programmer.

In the late 19th and early 20th centuries, numerous mechanical calculating machines were invented, such as the punched-card machines developed by Herman Hollerith for the 1890 US Census. These machines used punched cards to store and process data, greatly improving efficiency in data analysis.

By the mid-20th century, electromechanical computers emerged, using relays, switches, and rotating shafts to perform calculations. One notable example is the Harvard Mark I, a massive electromechanical device used during World War II to aid in military calculations.

While these early computer devices were limited in terms of processing power and storage capacity, they played an instrumental role in laying the groundwork for the electronic computers that would revolutionize the world.

Next, we will delve into the advancements that led to the creation of the analytical engine, a critical milestone on the path toward electronic computing.

The Analytical Engine

The Analytical Engine, conceived by Charles Babbage in the 19th century, was a groundbreaking invention that can be considered a precursor to the modern electronic computer. Babbage’s designs for the Analytical Engine incorporated concepts that would revolutionize computing and lay the foundation for future technological advancements.

The Analytical Engine was designed to be a general-purpose mechanical computer that could perform complex calculations. It featured several key components, including a memory unit called the “store” that could hold data and instructions, a processing unit known as the “mill” that could perform arithmetic and logical operations, and input and output devices.

One of the remarkable features of the Analytical Engine was its ability to use punched cards as a means of input and output. These punched cards, inspired by the machines developed by Herman Hollerith, allowed users to input data and instructions into the machine and receive output in the form of printed results.

Babbage’s design for the Analytical Engine also included the concept of a “control unit” that would manage the execution of instructions and coordinate the various components of the machine. This concept of a central control unit would become a fundamental aspect of modern computer architecture.

Another groundbreaking aspect of the Analytical Engine was its use of a program to control its operation. Babbage envisioned a system where users could create programs on punched cards to instruct the machine on specific tasks. This concept, known as “programmability,” was a revolutionary idea that laid the groundwork for the development of stored-program computers.

Unfortunately, due to various challenges, including financial constraints and the complexity of the design, the Analytical Engine was never fully built during Babbage’s lifetime. However, his visionary ideas and concepts influenced future inventors and engineers, who would bring his vision to life in the form of electronic computers.

The Atanasoff-Berry Computer

The Atanasoff-Berry Computer, or ABC, is considered one of the earliest electronic digital computers. Conceived and built by physicist John Atanasoff and his student Clifford Berry in the late 1930s and early 1940s, the ABC laid the foundation for the development of modern computing.

The ABC utilized binary arithmetic and electronic switches known as vacuum tubes to perform calculations. It employed a combination of mechanical and electronic components to store data and perform operations, making it a significant departure from earlier mechanical computing machines.

One of the key innovations of the ABC was the use of binary representation for numbers. By using only two digits, 0 and 1, the ABC made calculations more efficient and simplified the hardware required for computation. This binary system would later become the foundation of digital computing and is still used in modern computers today.

Another notable feature of the ABC was the use of parallel processing. Instead of relying on a single processor, the ABC divided complex calculations into smaller tasks that could be executed simultaneously. This parallel processing concept allowed for faster computation and laid the groundwork for future advancements in computer architecture.

The ABC also introduced the concept of regenerative memory, which allowed the computer to store and retrieve information electronically. This memory system used capacitors to store and maintain data, a significant advancement over earlier mechanical storage methods.

Despite its innovative design, the ABC was never fully completed or replicated due to various challenges, including funding and the outbreak of World War II. However, the ideas and concepts developed by Atanasoff and Berry influenced future computer pioneers and laid the foundation for the development of electronic computers.

The significance of the ABC was recognized later when a legal battle ensued over the patent for the electronic computer. In a landmark decision in 1973, a federal court ruled that the ENIAC, a later computer invented by John Mauchly and J. Presper Eckert, had been derived from the work of Atanasoff and Berry on the ABC, making them the true pioneers of electronic computing.

The Atanasoff-Berry Computer may not have achieved widespread recognition or commercial success, but its contributions to the field of computing cannot be underestimated. It was a crucial milestone in the journey towards the creation of the computers we rely on today.

The ENIAC

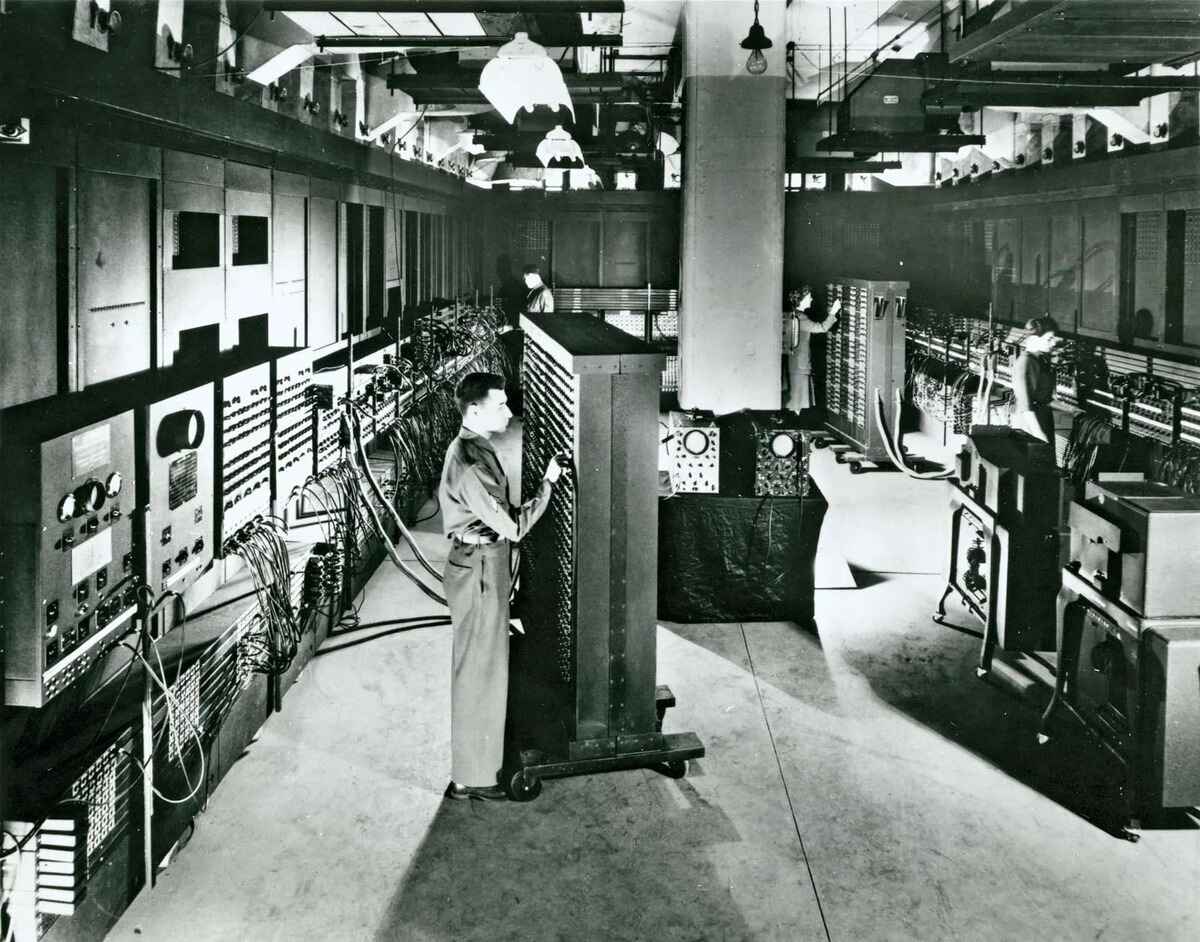

The ENIAC (Electronic Numerical Integrator and Computer) is widely recognized as one of the world’s first general-purpose electronic digital computers. Developed during World War II by John Mauchly and J. Presper Eckert at the University of Pennsylvania, the ENIAC revolutionized computing by introducing several innovative features and advancing the capabilities of electronic machines.

Completed in 1945, the ENIAC was an enormous machine, occupying a large room and consisting of thousands of vacuum tubes, switches, and interconnecting cables. It was specifically designed to assist in military calculations, such as the trajectory analysis of artillery shells, but its impact would extend far beyond its original purpose.

One of the major breakthroughs of the ENIAC was its ability to perform calculations at unprecedented speeds. It was capable of executing over 5,000 additions or subtractions per second, a tremendous advancement compared to the mechanical calculators of the time. This increased speed and computational power opened up new possibilities for scientific research, engineering, and data processing.

The ENIAC also introduced the concept of programming. Instead of being hard-wired for specific tasks, the ENIAC utilized a system of patch cords and switches that allowed the machine to be reprogrammed for different calculations. This flexibility meant that the ENIAC could be repurposed for various applications, making it a truly versatile computing machine.

Despite its groundbreaking capabilities, the ENIAC had its limitations. Programming the machine was a complex and time-consuming task that required physically rewiring the system. Additionally, the ENIAC did not have any internal memory or storage, relying on external devices such as punched cards and magnetic tape for data storage.

Nevertheless, the ENIAC’s impact on the field of computing was immense. Its success paved the way for further developments in electronic computing, leading to the creation of smaller, faster, and more efficient machines. The experience gained from designing and operating the ENIAC directly influenced the development of subsequent computers, such as the EDVAC and the UNIVAC.

The ENIAC marked a significant shift from mechanical and electromechanical computing to electronic computing. Its introduction heralded a new era in which computers would become essential tools for scientific research, engineering, business, and many other fields.

The story of the ENIAC is not only a testament to the ingenuity of its creators but also a crucial milestone in the ongoing evolution of computing technology.

The EDVAC and the Von Neumann Architecture

The Electronic Discrete Variable Automatic Computer (EDVAC) was a significant milestone in the development of modern computers and played a vital role in advancing the field of electronic computing. Developed in the late 1940s by a team led by John von Neumann at the Institute for Advanced Study in Princeton, the EDVAC introduced the groundbreaking concept of the Von Neumann architecture.

Prior to the EDVAC, computers like the ENIAC were programmed by physically reconfiguring the machine’s hardware, a time-consuming and error-prone process. The Von Neumann architecture, named after John von Neumann, introduced a revolutionary approach by using stored programs and electronic memory to hold both data and instructions.

The heart of the EDVAC was its memory unit, which consisted of a binary storage system using vacuum tubes. This memory allowed the computer to store and retrieve both data and instructions, making it more flexible and independent of external devices. Von Neumann’s idea of having a unified memory for data and program code became a hallmark of modern computer architecture.

With the Von Neumann architecture, programs were stored as a series of instructions in memory. These instructions were fetched by the computer’s Central Processing Unit (CPU) and executed in a sequential manner. This approach significantly improved the programmability and versatility of computers, as it allowed for the efficient execution of a wide range of tasks without the need for physical rewiring.

Another important contribution of the EDVAC was the use of binary representation for both data and instructions. This binary format simplified data processing and allowed for the efficient manipulation of information within the computer’s circuits. By adopting a universal binary system, the EDVAC laid the foundation for future computer systems.

The EDVAC also introduced the first high-level programming language, known as the Short Code. This language made programming more accessible by using symbolic instructions that were easier to read and write than machine code.

The Von Neumann architecture, as demonstrated by the EDVAC, set the standard for many of today’s computers. This architecture is still widely used, with computers storing both data and program instructions in electronic memory, and fetching and executing instructions sequentially.

In addition to its technical achievements, the EDVAC also played a pivotal role in advancing the field of computer science. The work conducted on the EDVAC and the concept of the Von Neumann architecture led to further developments in computer design, such as the creation of the stored-program computer and the advancement of programming languages.

The EDVAC’s contributions to computing and its influence on subsequent computer designs cemented its place in history as a significant milestone in the ongoing evolution of electronic computers.

The Manchester Baby and the EDSAC

The Manchester Baby, also known as the Small Scale Experimental Machine (SSEM), holds a significant place in the history of computing as one of the world’s first stored-program computers. Developed at the University of Manchester in 1948, the Manchester Baby played a crucial role in advancing electronic computing and paved the way for the development of the Electronic Delay Storage Automatic Calculator (EDSAC).

The Manchester Baby was a groundbreaking machine that demonstrated the practicality and effectiveness of the stored-program concept. It utilized a cathode ray tube (CRT) as its primary storage mechanism, where both program instructions and data were stored in the form of electrical charges on the face of the CRT. This breakthrough enabled the Baby to fetch and execute instructions stored in its memory, marking a significant departure from earlier machines that relied on hardwiring for each calculation.

Although the Manchester Baby had limited capabilities and was primarily used for testing and proving the concept of the stored-program computer, its success set the stage for the development of the EDSAC. The EDSAC, designed by Maurice Wilkes and his team at the University of Cambridge, was the world’s first operational stored-program computer.

The EDSAC improved upon the Manchester Baby by utilizing a mercury delay line memory system, which allowed for longer programs to be stored and executed. It also introduced several enhancements in terms of speed and reliability, making it more practical for general-purpose computing tasks.

The EDSAC was considered a significant breakthrough in computer architecture and programming. It featured an instruction set that allowed programmers to write high-level programs, paving the way for more efficient and accessible software development. Additionally, the EDSAC’s innovation in accurate arithmetic computation and its ability to handle complex calculations opened up new possibilities for scientific research, engineering, and other disciplines.

The success of the Manchester Baby and the EDSAC demonstrated the power and versatility of the stored-program computer model. They inspired further developments in computer design, including the influential Electronic Delay Storage Automatic Computer (EDSAC 2) and the University of Manchester’s Ferranti Mark I, the world´s first commercially available computer.

Today, the Manchester Baby and the EDSAC are celebrated for their contributions to the field of computing. They laid the foundation for modern computer architecture and programming, shaping the development of subsequent generations of computers, and setting the stage for the digital revolution that has transformed nearly every aspect of our lives.

The IBM 650 and the First Commercially Successful Computer

The IBM 650 stands as a significant milestone in computing history as the first commercially successful computer. Introduced by IBM in 1954, the IBM 650 played a crucial role in advancing the adoption of computers in businesses, scientific institutions, and government organizations.

The IBM 650 utilized vacuum tubes and punched cards as its primary components. It was an electromechanical computer that combined electronic circuitry with mechanical components, bridging the gap between earlier mechanical calculators and fully electronic machines. It featured a memory unit that used magnetic drums to store and retrieve data, allowing for faster and more efficient access to information.

One of the key factors behind the success of the IBM 650 was its ability to be programmed for a wide range of applications. It supported a variety of mathematical and scientific computations and could be used to handle payroll, accounting, and inventory systems. This versatility made it attractive for a wide range of industries, leading to its widespread adoption.

Another crucial aspect of the IBM 650’s success was its affordability. With a price tag significantly lower than earlier computers, the IBM 650 became accessible to organizations with limited budgets. This accessibility and affordability played a major role in helping businesses and academia embrace computer technology.

The IBM 650 featured a relatively user-friendly programming system. It used symbolic machine language instructions that were easier to read and write compared to the binary code of earlier computers. This made programming less complex and more accessible to a broader range of users.

The success of the IBM 650 prompted IBM to establish a dominant position in the early computer market. By the end of the 1950s, thousands of IBM 650 computers had been sold worldwide, solidifying IBM’s reputation as a leading computer manufacturer.

The impact of the IBM 650 extended beyond its immediate success. It paved the way for further advancements and inspired the development of more powerful and sophisticated computer systems. It also demonstrated the value of computers in a commercial context, leading businesses to recognize their potential for improving productivity and efficiency.

In many ways, the IBM 650 marked the transition from computers being highly specialized machines to becoming commonplace tools that could be used by various industries and organizations. Its commercial success played a pivotal role in shaping the trajectory of computer technology and set the stage for the widespread adoption of computers in the years to come.

The Transistor and the Birth of Modern Computing

The invention of the transistor in 1947 by John Bardeen, Walter Brattain, and William Shockley at Bell Labs revolutionized the field of electronics and played a pivotal role in the birth of modern computing. The transistor, a small solid-state device that can amplify and switch electronic signals, replaced the bulkier and less efficient vacuum tubes that were used in early computers.

The advent of the transistor marked a significant breakthrough in electronics. Transistors were smaller, more reliable, and consumed much less power than vacuum tubes. These advantages opened up new possibilities for creating smaller, faster, and more efficient electronic devices.

In the context of computing, the transistor’s impact was transformative. Early computers, such as the ENIAC and the UNIVAC, relied on vacuum tubes, which were large, delicate, and generated significant amounts of heat. The introduction of transistors allowed for the development of smaller, more reliable, and more energy-efficient computers.

Miniaturization became a defining characteristic of modern computers, as transistors enabled the creation of increasingly compact and powerful systems. Transistors also allowed for the improvement of computing speed and processing power, as more transistors could be packed into a smaller space, enabling more calculations to be performed in a shorter amount of time.

Furthermore, the transistor facilitated the mass production and commercialization of computers. The reduced size, lower power consumption, and increased reliability of transistor-based computers made them more affordable and accessible to a wider market. This led to the proliferation of computers in businesses, research institutions, and eventually in homes.

The transistor’s impact on computer technology extended beyond size and power. It also paved the way for integrated circuits, which combined multiple transistors and other electronic components onto a single semiconductor material. Integrated circuits, or chips, became the foundation of modern computer systems, allowing for more complex and powerful computers to be developed.

The transistor and integrated circuits also led to the development of personal computers. The increased affordability and miniaturization made it possible to create computers that could fit on a desk and be used by individuals for various tasks, such as word processing, number crunching, and entertainment.

The birth of modern computing owes a great debt to the invention of the transistor. Its impact on electronics and computer technology paved the way for the incredible advancements we witness today. Without the transistor, the evolution of computers and the digital age as we know it would not have been possible.

The IBM 704 and the FORTRAN Language

The IBM 704, introduced by IBM in 1954, was a significant breakthrough in computer technology and played a crucial role in the development of programming languages. It was the first mass-produced computer to support high-level programming, and it paved the way for the creation of the FORTRAN language.

The IBM 704 was a vacuum tube-based computer that offered significant improvements in speed and memory capacity compared to its predecessors. It featured a magnetic core memory system, which provided faster and more reliable data storage and retrieval.

One of the most notable aspects of the IBM 704 was its role in the development of the FORTRAN language. The IBM team, led by John W. Backus, created FORTRAN (short for “Formula Translation”) as a high-level programming language specifically designed for scientific and engineering applications.

Prior to FORTRAN, programming was typically done in machine language or assembly language, which required intricate knowledge of the computer’s hardware and was time-consuming to write and debug. FORTRAN introduced a more accessible and efficient approach by allowing programmers to express mathematical formulas and scientific equations directly in their code.

The development of FORTRAN improved the productivity of programmers and made complex scientific calculations much easier to implement. It introduced numerous features, such as arithmetic expressions, looping constructs, and subroutines, which simplified the programming process and enhanced code readability.

The success of FORTRAN is evident from its widespread adoption and continued use to this day. It became the standard language for scientific and engineering computing and played a crucial role in advancing scientific research and engineering design.

The IBM 704, powered by the versatility of FORTRAN, became a popular choice for scientific and research institutions. Its fast processing capabilities and efficient programming language allowed scientists and engineers to tackle complex calculations and simulations more effectively than ever before.

The impact of the IBM 704 and FORTRAN extends beyond their direct contributions. They laid the foundation for subsequent developments in programming languages, inspiring the creation of new languages and frameworks that have shaped the evolution of computer software.

Overall, the IBM 704 and FORTRAN were groundbreaking innovations that propelled the field of computer science forward. Their introduction revolutionized programming, making computers more accessible and efficient for scientific and engineering applications, and set the stage for future advancements in computer languages and software development.

The IBM 7090 and the ALGOL Language

The IBM 7090, introduced by IBM in 1959, was a significant milestone in the development of computer technology and played a crucial role in the advancement of programming languages. It was one of the first computers to support high-level languages, notably the ALGOL language, which revolutionized software development.

The IBM 7090 was a transistor-based computer that offered improved performance and enhanced memory capacity compared to previous models. It was designed for scientific and technical applications, and its speed and computational power made it a popular choice among research institutions and government agencies.

One of the key contributions of the IBM 7090 was its support for the ALGOL (Algorithmic Language) programming language. ALGOL, developed in the late 1950s, was designed to be a universal language for scientific computing. It aimed to provide a standardized and efficient means of expressing algorithms and mathematical procedures.

The ALGOL language introduced significant innovations, such as block structures, hierarchical data structures, and a formal syntax specification. It provided a clear and structured approach to programming, facilitating the development of complex software systems.

ALGOL was highly influential and became a foundation for subsequent programming languages. It influenced the design of languages such as Pascal, C, and Java, and its principles and concepts are still evident in modern programming paradigms.

The IBM 7090, with its support for ALGOL, enabled programmers to create more sophisticated and efficient software. It empowered scientists, engineers, and researchers to tackle complex computational tasks with greater ease and accuracy.

The capabilities of the IBM 7090 and the ALGOL language led to significant advancements in scientific and technical computing. It became a catalyst for breakthroughs in various fields, including physics, chemistry, weather forecasting, and aerospace engineering.

Beyond its immediate impact, the IBM 7090 and the ALGOL language set the stage for the further evolution of programming languages. They demonstrated the value of high-level languages in enhancing productivity and facilitating software development, leading to the creation of more powerful and versatile programming tools.

The legacy of the IBM 7090 and ALGOL extended beyond their time. They laid the groundwork for the development of subsequent computer systems and inspired further advancements in programming languages and software engineering techniques.

The IBM 7090 and ALGOL were key contributors to the progress of computer technology, enabling scientists and engineers to push the boundaries of what was possible and paving the way for future innovations in computing.

The IBM System/360 and the Rise of Mainframe Computers

The IBM System/360, introduced by IBM in 1964, marks a significant milestone in the history of computers and played a pivotal role in the rise of mainframe computing. This groundbreaking system revolutionized computer architecture and set the industry standard for decades to come.

The IBM System/360 was designed to address the growing complexity and diversity of computational needs across various industries. It introduced a family of compatible computers that shared a common architecture, allowing for seamless compatibility and software portability. This flexibility made the System/360 attractive to organizations of all sizes, from small businesses to large enterprises.

Prior to the System/360, computers were typically custom-built, with different models having incompatible architectures and software. The IBM System/360 changed this landscape by offering a range of models that could handle a wide variety of tasks and supported a vast array of applications.

One of the key innovations of the System/360 was its use of microcode, a layer of software that controlled the internal operations of the computer. This microcode was stored in read-only memory (ROM), enabling easy updates and enhancements to the computer’s functionality without the need for physical hardware modifications.

The System/360 also introduced virtual memory, a revolutionary concept that allowed the computer to address more memory than physically available. This feature greatly improved the efficiency of memory management and enabled the execution of larger and more complex programs.

Furthermore, the IBM System/360 established IBM’s dominance in the mainframe computer market. The System/360 family offered a wide range of processing power, from smaller models suitable for small businesses to powerful mainframes capable of handling massive data processing tasks. This versatility and scalability solidified IBM’s position as a leading provider of mainframe computers.

The impact of the IBM System/360 extended beyond hardware advancements. It also led to innovations in software development and established standards for programming languages and operating systems. For example, the System/360 introduced the high-level programming language PL/I, which became widely used in scientific and business applications.

The rise of mainframe computers with the System/360 gave organizations the ability to process and manage large amounts of data efficiently. Mainframes became the backbone of major industries such as banking, government, and research, where the processing power and reliability of these systems were indispensable.

The IBM System/360 paved the way for subsequent advancements in computing, including the development of supercomputers and distributed computing networks. It also influenced the design of future computer architectures and played a significant role in shaping the landscape of the computing industry.

The IBM System/360 and its impact on mainframe computing represents a critical chapter in the history of computers. Its contributions in terms of hardware, software, and industry adoption were transformative and left a lasting legacy that continues to influence modern computing systems.

The First Personal Computers

The advent of personal computers in the mid-1970s revolutionized the computing landscape, bringing the power of computing to individuals in their homes and offices. These early personal computers laid the foundation for the widespread use of computers by everyday people, marking a significant milestone in the history of technology.

One of the first personal computers was the Altair 8800, introduced in 1974 by Micro Instrumentation and Telemetry Systems (MITS). It was a build-it-yourself computer kit that captured the imagination of hobbyists and computer enthusiasts. Despite its primitive design and lack of a keyboard or display, the Altair 8800 sparked a wave of interest and inspired others to enter the personal computer market.

In 1976, Steve Wozniak and Steve Jobs designed the Apple I, known as the first fully assembled personal computer. It featured a compact design and a built-in keyboard, making it more user-friendly than previous models. Building on the success of the Apple I, they went on to create the Apple II in 1977, which brought personal computing to a wider audience with its color graphics and expandability.

The Commodore PET, introduced in 1977, was another early personal computer that gained popularity. With its integrated keyboard and cassette tape drive for storage, the Commodore PET offered a complete solution for home and small-business users.

However, it was the IBM Personal Computer (IBM PC) introduced in 1981 that truly solidified the concept of personal computing. The IBM PC set a standard for compatibility and provided a strong base for software development. Its open architecture allowed third-party manufacturers to create and sell hardware and software that expanded the capabilities of the system.

As personal computers continued to evolve, more companies entered the market and competition intensified. Compaq, Tandy, and Atari were among the influential companies that introduced popular personal computers in the 1980s.

These early personal computers, though limited in processing power and storage capacity by today’s standards, paved the way for the technological advancements that followed. They brought computing to a wider audience and enabled individuals to perform tasks such as word processing, calculations, and gaming from the comfort of their own homes or offices.

The introduction of personal computers also prompted a shift in the software industry. With a growing user base, software developers began creating applications tailored to individual needs, from productivity software to games, stimulating innovation and economic growth.

The affordability and accessibility of personal computers became key factors in driving computer literacy and technological literacy in general. As the technology progressed and prices dropped, personal computers became essential tools for education, business, and personal use, shaping the modern digital age we live in today.

The impact of the first personal computers cannot be overstated. They transformed computing from being a highly specialized and exclusive field to becoming an integral part of mainstream society, fueling the democratization of technology and opening up endless possibilities for individuals and businesses alike.

The Microprocessor Revolution

The microprocessor revolution, which began in the early 1970s, marked a monumental turning point in the history of computing. The invention and widespread adoption of microprocessors revolutionized the field by making computers smaller, more powerful, and more affordable, leading to a transformative impact on industries and society as a whole.

A microprocessor is a single integrated circuit that contains the functions of a central processing unit (CPU). In 1971, Intel introduced the pioneering Intel 4004, the world’s first commercially available microprocessor. With its compact size and capability to perform complex calculations, the Intel 4004 laid the foundation for the microprocessor revolution.

In the years following the release of the Intel 4004, rapid advancements were made in microprocessor technology. Manufacturers like Intel, Motorola, and Texas Instruments developed increasingly powerful and efficient microprocessors, bolstering the capabilities of personal computers and other electronic devices.

The microprocessor revolution resulted in the miniaturization of computers. Prior to microprocessors, computers were large, room-sized machines that required extensive power and cooling systems. With the advent of microprocessors, computers could fit on a desk or even in the palm of your hand, making them accessible to individuals and facilitating widespread adoption.

The impact of microprocessors extended beyond personal computing. They contributed to the development of embedded systems, enabling computers to be integrated into everyday objects such as cars, appliances, and medical devices. This integration has led to increased automation, improved efficiency, and enhanced functionality in various industries.

The microprocessor revolution also fueled the growth of the software industry. With more powerful processors, software developers were able to create increasingly sophisticated applications to meet a wide range of needs. From business software to video games, microprocessors have been instrumental in driving innovation and expanding the capabilities of computers.

Furthermore, the increasing affordability of microprocessors played a critical role in the democratization of technology. As costs decreased, more people gained access to computers and electronic devices, breaking down barriers to information and creating new opportunities for education, communication, and entrepreneurship.

Continual advancements in microprocessor technology have led to exponential increases in computing power, with modern microprocessors featuring billions of transistors and enabling complex tasks like artificial intelligence and high-performance computing.

The microprocessor revolution has reshaped nearly every aspect of our lives, from how we work and communicate to how we relax and entertain ourselves. It has transformed industries, led to the creation of entirely new ones, and connected people across the globe in ways unimaginable just a few decades ago.

As microprocessor technology continues to evolve, we can expect further advancements, opening up possibilities for future innovation and further shaping the world we live in.

The Advent of the Desktop Computer

The advent of the desktop computer, beginning in the 1970s and gaining significant momentum in the 1980s, marked a transformative era in computing history. These compact and affordable machines brought the power of computing into the homes and offices of everyday individuals, sparking a revolution that would shape the modern digital age.

One of the key contributors to the rise of the desktop computer was the development of integrated circuits and microprocessors. These advancements led to the creation of smaller, more efficient, and more powerful computer systems. Companies like Apple, IBM, and Commodore seized this opportunity and introduced groundbreaking desktop computers that changed the computing landscape.

The Apple II, introduced in 1977, was notable for its user-friendly design and emphasis on business and educational use. With its color graphics and expandability, the Apple II found success in various industries and educational institutions.

In 1981, IBM released the IBM Personal Computer (IBM PC), which became a standard in the industry. Built on an open architecture, the IBM PC allowed third-party manufacturers to develop compatible hardware and software, creating a robust ecosystem that propelled the desktop computer to new heights of popularity.

With the popularity of the IBM PC, a multitude of companies emerged, including Dell and Compaq, offering their own versions of desktop computers. This increased competition not only drove down prices but also spurred technological advancements and innovation.

The desktop computer provided individuals with the ability to perform tasks such as word processing, data analysis, and gaming from the convenience of their own homes or offices. It facilitated more efficient and streamlined workflows, transformed communication and entertainment, and empowered users to explore their creativity and potential.

Furthermore, the desktop computer gave rise to a wide range of software applications tailored to individual needs. Word processors, spreadsheets, database management systems, and graphic editing software became commonly used, revolutionizing work processes and enabling new levels of productivity.

The desktop computer also played a vital role in internet connectivity. With the release of user-friendly operating systems and web browsers, individuals could easily connect to the internet and access a wealth of information and communication platforms. This connectivity ushered in an era of global communication, e-commerce, and online collaboration.

Over time, the desktop computer continued to evolve, becoming more powerful and feature-rich. Advances in hardware, such as increased processor speed and expanded memory capacity, allowed for more complex tasks and the development of visually captivating multimedia content.

Today, while mobile devices have gained popularity, the desktop computer remains a fundamental tool in various fields, including business, research, and creative industries. Its power, versatility, and larger display size make it indispensable for complex computing tasks and applications that require extensive processing power.

The advent of the desktop computer transformed society, enabling individuals to harness the power of computing on a personal level. It democratized access to information, communication, and computing capabilities, shaping the way we live, work, and interact in the modern digital era.

The Rise of the Laptop

The rise of the laptop computer, beginning in the 1990s and continuing to the present day, marked a significant shift in computing technology and user mobility. Laptops, also known as notebooks, offered the power and functionality of a desktop computer in a portable and compact form factor, transforming the way people work, communicate, and access information.

The widespread adoption of laptops was made possible by advancements in microprocessor technology, miniaturization of components, and improvements in battery life. These developments allowed manufacturers to create portable computers that could rival the performance of desktop systems.

One of the early pioneers in laptop innovation was the GRiD Compass, introduced in 1982. It was one of the first commercially successful portable computers, featuring a clamshell design, a built-in display, and a keyboard. Though expensive and targeted primarily at military and business professionals, the GRiD Compass set the stage for future laptop designs.

In the 1990s, several manufacturers, such as IBM, Toshiba, and Compaq, brought the concept of the laptop to a broader audience. They introduced lighter and more affordable models with improved battery life, making laptops increasingly practical for business travelers, students, and professionals.

The introduction of the Intel Pentium processor in the mid-1990s greatly enhanced laptop performance, allowing for more demanding tasks such as multimedia editing and 3D gaming. This, coupled with the rise of Windows operating systems designed with laptops in mind, solidified the appeal of portable computing for a wide range of users.

Advancements in display technology also played a crucial role in the success of laptops. The introduction of active-matrix liquid crystal displays (LCDs), also known as TFT screens, offered improved brightness, clarity, and color reproduction. This made laptops more visually appealing and provided a better user experience.

In the 2000s, laptops became more affordable and accessible to the general public. Manufacturers like Dell, HP, and Lenovo introduced a wider range of models catering to different user needs, from budget-friendly options to high-performance systems for professionals and gamers.

The increasing popularity of wireless connectivity, with the widespread adoption of Wi-Fi technology, further contributed to the rise of laptops. Users could now connect to the internet and access online resources without the need for physical network connections, allowing for greater mobility and convenience.

In recent years, advancements in laptops have continued, with the introduction of faster processors, solid-state drives (SSDs) for faster storage, and improved graphics capabilities. Ultrabooks and 2-in-1 convertibles have emerged, offering even greater portability and versatility, blurring the lines between laptops and tablets.

The rise of the laptop has had a profound impact on how people work, learn, and stay connected. Laptops have become essential tools for professionals, students, and casual users alike, allowing for productivity on the go and enabling seamless communication and collaboration.

Today, laptops remain a prevalent computing device, complementing the rising popularity of mobile devices like smartphones and tablets. With their combination of portability, power, and versatility, laptops continue to evolve, adapting to meet the changing needs of users in an increasingly mobile and interconnected world.

The Birth of the Smartphone

The birth of the smartphone marked a transformative moment in the history of technology and communication. Combining the functionalities of a mobile phone with advanced computing capabilities, the smartphone revolutionized the way people communicate, access information, and interact with the world around them.

The origins of the smartphone can be traced back to the early 1990s when mobile phones began adding additional features beyond voice communication. Devices like the IBM Simon, released in 1994, were among the first to incorporate features such as a touchscreen, email capabilities, and basic applications.

However, it was the introduction of the BlackBerry in 2000 that helped popularize the concept of the smartphone. The BlackBerry offered mobile email access, advanced messaging capabilities, and a full QWERTY keyboard, appealing primarily to business professionals.

In 2007, Apple revolutionized the smartphone industry with the introduction of the iPhone. The iPhone combined a sleek design, a multi-touch screen, and an intuitive user interface powered by iOS, Apple’s mobile operating system. With its App Store, the iPhone provided a platform for developers to create a wide range of applications, making it a truly versatile device.

Following the success of the iPhone, other manufacturers like Samsung, HTC, and Google entered the smartphone market, introducing Android-based devices that offered a more customizable and varied user experience. This gave rise to increased competition and innovation in the smartphone industry.

The smartphone’s rise to prominence was further fueled by advancements in hardware and software. Processors became faster, memory capacities expanded, and display technologies improved, delivering stunning visuals and seamless user experiences. Built-in sensors and GPS capabilities opened up possibilities for location-based services and augmented reality applications.

The integration of wireless connectivity, such as Wi-Fi and cellular data, enabled instant access to the internet and a myriad of online services. Social media platforms, messaging apps, and streaming services became integral parts of people’s daily lives, allowing for unprecedented connectivity and entertainment on the go.

Smartphones also played a significant role in the proliferation of mobile photography and video. The introduction of high-quality built-in cameras, combined with advancements in image processing and editing software, allowed users to capture, edit, and share their moments instantaneously.

The impact of smartphones extended beyond communication and entertainment. They disrupted various industries, such as transportation with ride-sharing apps, finance with mobile banking, and healthcare with telehealth services. Smartphones became indispensable tools for productivity, enabling users to manage their schedules, access documents, and collaborate with others on the fly.

As technology continues to progress, smartphones are becoming increasingly powerful, incorporating advanced features such as artificial intelligence, facial recognition, and biometric authentication. They are at the center of the Internet of Things (IoT) ecosystem, connecting and controlling smart devices in homes and cities.

The birth of the smartphone brought about a new era of mobile computing and transformed the way we live, work, and play. Its constant connectivity and limitless possibilities have made it an indispensable device in the modern digital age, bridging gaps and bringing the world to our fingertips.

The Modern Age of Computing

The modern age of computing encompasses a vast array of technological advancements and innovations that have transformed nearly every aspect of our lives. This era is characterized by exponential growth in computing power, connectivity, and the widespread integration of technology into various industries and everyday activities.

One of the central drivers of the modern age of computing is the concept of cloud computing. The ability to store and access vast amounts of data remotely through cloud services has revolutionized the way businesses operate and individuals store and share information. Cloud computing has enabled seamless collaboration, enhanced data security, and the development of new services and applications.

The rise of powerful mobile devices, particularly smartphones, has extended the reach of computing and connectivity to an unprecedented scale. Mobile apps and platforms have become integral to our lives, providing personalized experiences, instant access to information, and a myriad of services at our fingertips. The combination of mobility, high-speed internet, and powerful processing capabilities has spurred the growth of the mobile industry and changed the way we communicate, work, and entertain ourselves.

The advent of artificial intelligence (AI) and machine learning has further propelled the modern age of computing. AI algorithms can now analyze vast amounts of data, detect patterns, and make predictions with remarkable accuracy. Applications of AI range from virtual assistants, personalized recommendations, and autonomous systems to cutting-edge research in areas like healthcare, finance, and self-driving vehicles.

The Internet of Things (IoT) has also emerged as a defining characteristic of the modern age of computing. It refers to the interconnectedness of physical devices, sensors, and actuators, allowing them to communicate and exchange data. IoT has led to the development of smart homes, smart cities, and industrial automation, streamlining processes, optimizing resource utilization, and creating enhanced user experiences.

Quantum computing is another frontier that holds tremendous promise for the future of computing. These powerful machines harness quantum properties to perform complex calculations exponentially faster than traditional computers. While still in its infancy, quantum computing has the potential to revolutionize areas such as cryptography, optimization, and drug discovery.

Data privacy and cybersecurity have also become critical concerns in the modern age of computing. With the increasing amount of data being generated and stored, protecting sensitive information and ensuring digital privacy have become paramount. Governments and organizations worldwide are grappling with the complex task of establishing regulations and implementing robust security measures.

The modern age of computing has fostered immense collaboration and innovation. Open-source software, code repositories, and online communities have facilitated knowledge sharing and collective problem-solving. Start-ups and entrepreneurs have thrived in this environment, developing disruptive technologies and seizing opportunities to make lasting impacts on industries and society.

Looking ahead, the modern age of computing holds immense potential. Advancements in quantum computing, AI, robotics, 5G connectivity, and immersive technologies continue to push the boundaries of what is possible. The integration of technology into healthcare, education, transportation, and beyond promises to reshape industries, improve quality of life, and tackle global challenges.

Ultimately, the modern age of computing represents an era of unprecedented innovation, connectivity, and digital transformation. It has connected people across the globe, empowered individuals and businesses alike, and set the stage for exciting advancements that will continue to shape our future.