Definition of False Positives

When it comes to machine learning and data analysis, false positives are a crucial concept to understand. In simplest terms, a false positive occurs when a machine learning model incorrectly identifies an instance or sample as belonging to a certain class or category, when it shouldn’t. This means that the model has generated a positive result for a particular condition, even though the condition is not actually present.

False positives can have significant consequences in various fields, such as healthcare, finance, and cybersecurity. For instance, in healthcare, a false positive diagnosis may lead to unnecessary medical procedures or treatments, causing unnecessary stress for patients and increasing healthcare costs. In finance, it might result in false credit card fraud alerts, inconveniencing customers and potentially damaging relationships with financial institutions. In cybersecurity, false positives can trigger unnecessary security alerts or even block legitimate user access, disrupting business operations.

It is important to note that false positives are directly related to the concept of accuracy in machine learning. Accuracy refers to the proportion of correctly classified instances or samples out of the total number of instances or samples. Therefore, a high false positive rate can significantly impact the accuracy of a model, leading to potential problems in decision making based on the model’s predictions.

One common example of false positives is in spam email filters. When these filters incorrectly classify legitimate emails as spam, they generate a false positive. This can result in important emails being filtered out and sent to the spam folder, potentially causing users to miss crucial information or opportunities.

To mitigate the impact of false positives, it is essential to strike a balance between sensitivity (the ability to correctly identify positive instances) and specificity (the ability to correctly identify negative instances). Achieving this balance requires fine-tuning the model and optimizing the classification threshold.

Understanding True Positives

In machine learning, the concept of true positives is the opposite of false positives. A true positive occurs when a machine learning model correctly identifies an instance or sample as belonging to a certain class or category. It means that the model has generated a positive result for a particular condition, and the condition is indeed present.

True positives are an essential measure of the model’s accuracy and effectiveness. They represent the instances where the model’s predictions align with the ground truth or the actual state of the data. The ability to correctly identify positive instances is crucial in various applications, such as medical diagnosis, fraud detection, and sentiment analysis.

For example, in medical diagnosis, a true positive would mean that the model correctly identifies a patient as having a specific disease or condition, aiding healthcare professionals in providing appropriate treatment. In fraud detection, a true positive occurs when the model accurately identifies a fraudulent transaction, helping financial institutions prevent financial losses. In sentiment analysis, a true positive signifies that the model correctly recognizes positive sentiment in text, enabling businesses to gauge customer satisfaction.

In evaluating the performance of a machine learning model, true positives are typically considered along with other evaluation metrics such as true negatives, false positives, and false negatives. These metrics are used to construct a confusion matrix, which provides a comprehensive overview of the model’s performance by illustrating the classification outcomes.

It is important to understand that the balance between false positives and true positives can vary depending on the specific application. In some cases, minimizing false positives is crucial to avoid unnecessary costs or inconveniences, while in other cases, maximizing true positives is the priority, even if it means tolerating a higher rate of false positives. The appropriate trade-off depends on the potential impacts and consequences of false positives and the desired outcome of the task at hand.

Ultimately, understanding true positives helps us evaluate and improve the performance of machine learning models. By accurately identifying positive instances, we can gain valuable insights and make informed decisions based on the model’s predictions.

Understanding False Negatives

In the realm of machine learning and data analysis, false negatives are an important concept to grasp. A false negative occurs when a machine learning model incorrectly identifies an instance or sample as not belonging to a certain class or category, when it should have. In other words, a false negative means that the model has generated a negative result for a particular condition, even though the condition is actually present.

False negatives can have significant implications in various fields, including healthcare, security, and quality control. In healthcare, for instance, a false negative diagnosis could lead to a delay in treatment or the overlooking of a serious condition, potentially jeopardizing a patient’s health. In security applications, false negatives might result in undetected suspicious activities or threats, putting individuals or organizations at risk. In quality control, false negatives can lead to the acceptance of defective products, compromising safety and customer satisfaction.

It is important to note that false negatives are directly related to the concept of sensitivity or recall in machine learning. Sensitivity measures the model’s ability to correctly identify positive instances out of all the instances that should have been identified as positive. A high false negative rate can significantly impact sensitivity, indicating a higher likelihood of missing positive instances and potentially leading to critical errors in decision-making.

One common example of false negatives is in medical screenings. When a screening test fails to detect a disease or condition that is actually present, it generates a false negative result. This can create a false sense of security, as patients may mistakenly believe that they are healthy, leading to a delay in diagnosis and treatment.

To address false negatives, it is crucial to strike a balance between specificity (the ability to correctly identify negative instances) and sensitivity. Trade-offs need to be carefully considered depending on the specific application and the potential risks associated with false negatives. Adjusting the model’s classification threshold, improving feature selection, or utilizing different algorithms or techniques can help mitigate the impact of false negatives.

Understanding false negatives is essential for evaluating the performance and reliability of machine learning models. By identifying instances where the model fails to recognize positive conditions, we can refine the model, enhance its accuracy, and ensure better decision-making based on its predictions.

Understanding the Confusion Matrix

In machine learning, the confusion matrix is a valuable tool for evaluating the performance of a classification model. It provides a comprehensive summary of the model’s predictions and the actual ground truth, allowing us to assess the accuracy and effectiveness of the model.

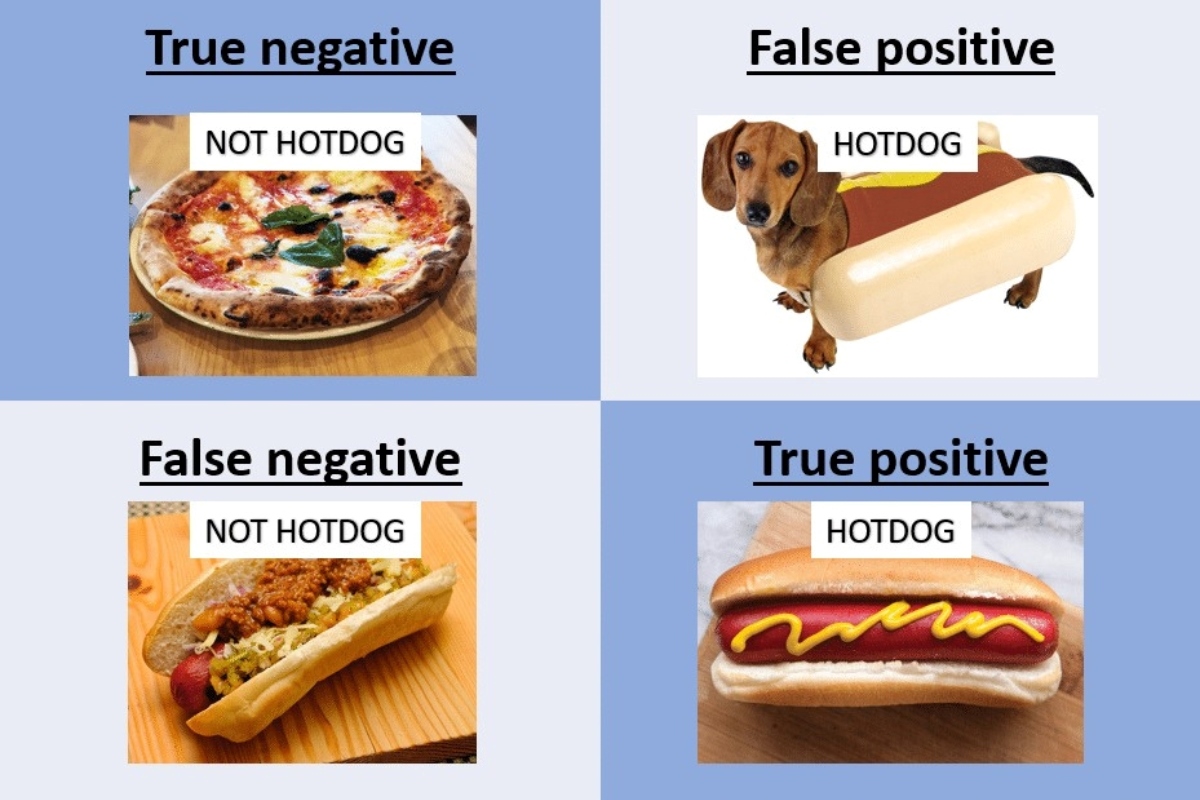

The confusion matrix is a square matrix that visually represents the four possible outcomes of a classification task: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

The rows of the confusion matrix represent the true classes or labels, while the columns represent the predicted classes or labels. This arrangement provides a clear breakdown of how the model classified instances into each category.

Here is a breakdown of the four components of the confusion matrix:

True Positives (TP): These are the instances that are correctly classified as positive by the model. In other words, the model predicted the presence of a condition, and it was correct.

True Negatives (TN): These are the instances that are correctly classified as negative by the model. The model predicted the absence of a condition, and it was correct.

False Positives (FP): These are the instances that are incorrectly classified as positive by the model. The model predicted the presence of a condition, but it was actually absent.

False Negatives (FN): These are the instances that are incorrectly classified as negative by the model. The model predicted the absence of a condition, but it was actually present.

The confusion matrix allows us to gain insights into the strengths and weaknesses of the model. By analyzing its components, we can determine the accuracy, precision, recall, and other evaluation metrics that provide a comprehensive understanding of the model’s performance.

For example, precision measures the proportion of correctly predicted positive instances out of all the predicted positive instances. Recall, also known as sensitivity, measures the proportion of correctly predicted positive instances out of all the actual positive instances. These metrics can be calculated using the values in the confusion matrix.

Through the confusion matrix, we can also identify if the model is more prone to false positives or false negatives. This knowledge can help us make adjustments to improve the model’s accuracy and optimization for better decision-making.

Understanding the confusion matrix provides a comprehensive overview of the performance of a classification model. It serves as a roadmap for evaluating and fine-tuning the model to enhance its accuracy, precision, recall, and overall effectiveness.

The Importance of False Positive Rate

The false positive rate (FPR) is a crucial metric in machine learning and statistical analysis. It measures the proportion of negative instances that are incorrectly classified as positive by a model. The FPR is particularly important because it directly affects the accuracy and reliability of a classification model.

In many real-world scenarios, the cost or impact of false positives can be significant. False positives can lead to unnecessary actions, wastage of resources, and detrimental effects on decision-making processes. For example, in medical diagnostics, a high false positive rate can result in unnecessary medical tests or treatments, causing patient anxiety and additional healthcare costs.

The FPR is closely linked to the concept of precision, which measures the proportion of correctly predicted positive instances out of all the predicted positive instances. A high FPR can lower the precision of a model, indicating the presence of a larger number of false positives in the predictions. Precision is crucial in applications where avoiding false positives is critical, such as fraud detection or spam filtering.

On the contrary, reducing false positives can increase customer trust, improve the user experience, and minimize unnecessary interventions. In applications such as security systems, a high false positive rate can result in the blocking of legitimate users, leading to inconvenience and frustration.

It is worth noting that reducing the false positive rate often involves a trade-off with the false negative rate. A more conservative approach to minimize false positives may increase the chances of false negatives. Therefore, the desired balance between false positive and false negative rates depends on the specific domain and the associated risks or costs related to each type of error.

By understanding the importance of the false positive rate, data analysts and machine learning practitioners can make informed decisions during model development, optimization, and evaluation. They can adjust classification thresholds, modify feature selection techniques, or explore alternative algorithms to strike the right balance between precision, recall, and the overall performance of the model.

Ultimately, managing the false positive rate is crucial in ensuring the accuracy and reliability of machine learning models in various applications. By minimizing false positives, organizations can improve efficiency, reduce costs, and enhance the overall user experience.

Common Causes of False Positives

False positives can occur in machine learning models and statistical analysis due to various reasons. Understanding these common causes can help developers and data analysts identify and address them, ensuring more accurate and reliable predictions.

1. Noisy or unrepresentative data: When the training dataset contains noisy or unrepresentative samples, the model may learn incorrect patterns and generate false positives. It is crucial to carefully pre-process and clean the data to eliminate irrelevant or misleading information.

2. Imbalanced class distribution: In datasets where one class is significantly more prevalent than others, the model may have a bias towards the majority class, leading to an increased number of false positives for the minority class. Techniques such as oversampling or undersampling can help balance the dataset and mitigate this issue.

3. Overfitting: Overfitting occurs when a model is overly complex and learns the training data too well, resulting in poor generalization to unseen data. This can lead to false positives as the model becomes too sensitive to noise or outliers. Regularization techniques and cross-validation can help prevent overfitting, improving the model’s performance.

4. Incorrect feature selection: Choosing irrelevant or insufficient features can contribute to false positives. It is important to carefully select features that have a strong correlation with the target variable and discard irrelevant or redundant features.

5. Insufficient training data: When the training dataset is small or lacks diversity, the model may not learn the underlying patterns effectively. This can lead to false positives as the model fails to capture the true characteristics of the data. Augmenting the dataset or collecting additional data can help address this issue.

6. Biased training data: If the training dataset is biased and does not adequately represent the real-world distribution, the model may generate false positives when exposed to new data. It is crucial to ensure that the training data is diverse, representative, and free from any inherent biases.

7. Inappropriate threshold selection: The threshold for classification plays a significant role in determining the occurrence of false positives. Selecting an incorrect threshold can result in a higher false positive rate. Adjusting the threshold based on the application’s requirements and the associated trade-offs can help mitigate this issue.

By addressing these common causes of false positives, data experts can improve the performance and reliability of their machine learning models. Analyzing and mitigating these causes can lead to more accurate predictions, reducing the occurrence of false positives in various applications.

Techniques to Reduce False Positives

Reducing the occurrence of false positives is crucial for improving the accuracy and reliability of machine learning models. Fortunately, there are several effective techniques that can be employed to mitigate the impact of false positives. These techniques help refine the model’s performance and enhance its ability to correctly identify positive instances.

1. Adjusting classification thresholds: The classification threshold determines the point at which the model classifies an instance as positive or negative. By adjusting the threshold, it is possible to balance the trade-off between false positives and false negatives. Increasing the threshold can lead to a decrease in the false positive rate at the cost of potentially increasing the false negative rate.

2. Feature selection and engineering: Carefully selecting and engineering relevant features can improve the model’s ability to differentiate between positive and negative instances. This helps reduce the occurrence of false positives by providing the model with more informative and discriminative features.

3. Ensemble methods: Ensemble methods, such as bagging, boosting, or random forests, combine multiple models to make predictions. These methods can be effective in reducing false positives by aggregating the opinions of multiple models, thereby increasing the overall prediction accuracy.

4. Regularization techniques: Regularization methods, such as L1 or L2 regularization, help prevent overfitting and improve model generalization. Regularization can reduce the model’s sensitivity to noise and outliers, thus reducing false positives.

5. Cross-validation: Cross-validation is a technique used to evaluate the model’s performance by partitioning the dataset into multiple subsets and training the model on different combinations of these subsets. It helps identify the optimal model configuration and reduces the risk of overfitting, resulting in fewer false positives.

6. Handling class imbalance: When dealing with imbalanced datasets, where one class is significantly more prevalent than the others, employing techniques such as oversampling, undersampling, or generating synthetic samples can help balance the dataset and minimize false positives for the minority class.

7. Fine-tuning hyperparameters: Hyperparameters control the behavior and performance of the model. Fine-tuning these hyperparameters, such as the learning rate, regularization factor, or number of layers, can optimize the model and reduce false positives.

8. Incorporating domain knowledge: Incorporating domain knowledge and expertise can play a significant role in reducing false positives. By understanding the specific application and the nature of the data, experts can guide feature selection, threshold determination, and model optimization to improve the model’s performance.

By implementing these techniques, data analysts and machine learning practitioners can effectively reduce the occurrence of false positives, enhancing the accuracy and reliability of their models. These approaches, combined with continuous evaluation and validation, contribute to the continuous improvement of the model’s performance.

Evaluation Metrics for False Positives

When it comes to assessing the performance of machine learning models in terms of false positives, several evaluation metrics can provide valuable insights. These metrics help quantify the occurrence of false positives and measure the model’s accuracy in identifying positive instances correctly.

1. False Positive Rate (FPR): The false positive rate measures the proportion of negative instances that are incorrectly classified as positive by the model. It is calculated as the ratio of false positives to the sum of true negatives and false positives. A lower FPR indicates a higher accuracy in avoiding false positives.

2. Precision: Precision, also known as positive predictive value, measures the proportion of correctly predicted positive instances out of all the predicted positive instances. It is calculated as the ratio of true positives to the sum of true positives and false positives. High precision indicates a low rate of false positives.

3. Specificity: Specificity measures the proportion of correctly predicted negative instances out of all the actual negative instances. It is calculated as the ratio of true negatives to the sum of true negatives and false positives. High specificity indicates a low rate of false positives for negative instances.

4. Receiver Operating Characteristic (ROC) Curve: The ROC curve visualizes the trade-off between the true positive rate and the false positive rate at different classification thresholds. It helps determine an optimal threshold for balancing the true positive rate and the false positive rate, facilitating decision-making in real-world scenarios.

5. Area Under the ROC Curve (AUC): The AUC summarizes the overall performance of the model across all possible classification thresholds. It provides a single metric to compare models, with a higher AUC indicating better discrimination between positive and negative instances and a lower false positive rate.

6. Average Precision: In scenarios with imbalanced datasets, where the majority class dominates, average precision can be a useful metric. It calculates the average precision value over multiple recall levels, providing a more comprehensive measure of the model’s performance and its ability to handle false positives in different scenarios.

7. F1 Score: The F1 score is the harmonic mean of precision and recall. It captures the balance between precision and recall, considering both false positives and false negatives. A high F1 score indicates a low rate of false positives while also taking into account false negatives.

It is important to consider these evaluation metrics collectively to gain a comprehensive understanding of the model’s performance in relation to false positives. Depending on the specific application, different metrics may be prioritized, allowing for informed decision-making and model optimization.

By utilizing these evaluation metrics, data analysts and machine learning practitioners can assess the efficacy of their models in handling false positives and make informed decisions to improve their performance, accuracy, and reliability.

Challenges in Dealing with False Positives

While reducing false positives is a crucial goal in machine learning and data analysis, there are several challenges that organizations and data practitioners face in effectively dealing with them. These challenges can impact the accuracy and reliability of models and require careful consideration and mitigation strategies.

1. Imbalanced cost of false positives: False positives can have variable costs depending on the specific application. Determining the acceptable level of false positives requires a thorough understanding of the potential consequences and risks associated with each type of error. Balancing the costs associated with false positives and false negatives can be challenging, as their impacts can differ significantly depending on the context.

2. Lack of ground truth: Accurate evaluation of false positives heavily relies on having a reliable ground truth or labeled dataset. However, in some cases, obtaining a complete and representative ground truth can be challenging or time-consuming. This can make it difficult to measure and analyze false positives accurately and improve models accordingly.

3. Data quality and noise: Low-quality or noisy data can contribute to an increased occurrence of false positives. Inadequate data cleaning and preprocessing techniques may lead to misinterpretation of irrelevant or misleading signals by the model. Cleaning and curating the data to ensure its quality and reliability is essential to minimize false positives.

4. Contextual complexity: Certain applications exhibit inherent contextual complexity, making it challenging to distinguish true positives from false positives accurately. For example, in natural language processing tasks, sarcasm, irony, or context-dependent meanings can present difficulties in correctly identifying positive instances, leading to higher false positives.

5. Adversarial attacks: In security-related applications, adversaries can deliberately manipulate input data to deceive the model and increase false positive rates. Adversarial attacks pose a major challenge in maintaining the accuracy of models, requiring additional defenses and robustness measures.

6. Impact of class imbalance: Class imbalance, where one class significantly outweighs the others, can lead to skewed predictions and higher false positives. Addressing class imbalance requires techniques such as oversampling, undersampling, or generating synthetic samples to ensure a balanced representation of all classes in the data.

7. Overfitting and model complexity: Overfitting occurs when a model performs excellently on the training data but fails to generalize to unseen data, resulting in increased false positives. Balancing model complexity and optimizing regularizers can help reduce overfitting and improve the generalization capabilities of the model.

Overcoming the challenges associated with false positives requires a combination of careful data handling, robust model development, and continuous evaluation and improvement. By addressing these challenges, organizations can enhance the accuracy and reliability of their models, leading to more effective decision-making and improved results.

Real-world Examples of False Positives

False positives can occur in various real-world scenarios, impacting different industries and domains. Understanding these examples can shed light on the potential consequences and challenges associated with false positives. Here are a few notable examples:

1. Spam Email Filters: Spam email filters often use machine learning models to classify emails as either spam or legitimate. However, these filters occasionally classify legitimate emails as spam, resulting in false positives. This can lead to important emails being missed or sent to the spam folder, causing inconvenience and potential loss of crucial information.

2. Medical Diagnostics: In medical diagnostics, false positives can lead to unnecessary medical procedures or treatments. For example, in breast cancer screenings, a false positive result from a mammogram can result in additional testing such as biopsies, causing anxiety and unnecessary expenses for patients.

3. Credit Card Fraud Detection: Credit card companies employ machine learning algorithms to flag potentially fraudulent transactions. However, false positive alerts can occur, leading to inconveniences for customers who may experience declined payments or have to verify their identities. False positives in fraud detection systems can negatively impact customer experience and trust.

4. Airport Security: Airport security systems use various screening methods, including machine learning models, to identify potential threats and contraband items. False positives in these systems can result in delays and unnecessary screening procedures for passengers, leading to inconvenience and increased waiting times.

5. Facial Recognition Technology: Facial recognition technology is widely used for identity verification and security purposes. However, false positives can occur when the system incorrectly identifies an individual as someone else, leading to potential privacy concerns and security breaches.

6. Autonomous Vehicles: False positives in autonomous vehicles’ object detection systems can lead to unnecessary braking or swerving, disrupting the flow of traffic and potentially causing accidents. Balancing the false positive rate in these systems is essential to ensure safety on the road.

7. Sentiment Analysis: Sentiment analysis, which aims to determine the sentiment or emotions expressed in text, can produce false positives when classifying positive sentiment. For instance, classifying sarcastic or ironic statements as positive sentiment can lead to inaccuracies in measuring customer satisfaction or perception.

These real-world examples highlight the importance of minimizing false positives in various industries and applications. Addressing the challenges associated with false positives and continuously improving the accuracy and reliability of machine learning models are crucial steps to avoid unnecessary costs, inconvenience, and potential risks.