What Is F-Score?

The F-Score is an evaluation metric commonly used in machine learning to measure the performance of a classification model. It combines both precision and recall into a single value, providing a comprehensive assessment of the model’s ability to correctly classify positive and negative samples.

Precision and recall are two important metrics in binary classification. Precision measures the proportion of true positive predictions out of all positive predictions, while recall measures the proportion of true positive predictions out of all actual positive samples. While precision focuses on the accuracy of positive predictions, recall focuses on the completeness of the positive predictions.

The F-Score, also known as the F1-Score, is calculated using the harmonic mean of precision and recall. It is a weighted average of both metrics, giving equal importance to precision and recall. By taking into account both precision and recall, the F-Score provides a balanced measure of a model’s performance.

The formula to calculate the F-Score is as follows:

F-Score = 2 * (precision * recall) / (precision + recall)

The F-Score ranges from 0 to 1, with a higher value indicating better model performance. A perfect F-Score of 1 means that the model has achieved both high precision and high recall, accurately identifying all positive samples without any false positives or false negatives.

One of the main advantages of the F-Score is that it provides a single value to compare different models or algorithms. It allows data scientists and researchers to easily assess and compare the performance of different classification models, helping them choose the most suitable model for their specific problem.

However, it’s important to note that the F-Score may not be the ideal metric in all scenarios. It is particularly useful when the costs of false positives and false negatives are roughly equal, and both precision and recall are equally important. In some cases, domain-specific considerations may require the prioritization of precision or recall over the other.

Furthermore, the F-Score assumes that the classification task is balanced, meaning that the dataset has an equal number of positive and negative samples. In situations where the dataset is imbalanced, with a significantly larger number of samples in one class than the other, the F-Score may not accurately reflect the model’s performance. In such cases, alternative evaluation metrics like area under the receiver operating characteristic curve (AUC-ROC) or precision-recall curve may be more appropriate.

Precision, Recall, and F-Score

Precision, recall, and F-Score are three important metrics used in machine learning to evaluate the performance of classification models. Understanding these metrics is essential for assessing the accuracy and effectiveness of a model.

Precision, also known as positive predictive value, measures the proportion of correctly predicted positive instances out of all instances predicted as positive. It is calculated using the formula:

Precision = TP / (TP + FP)

Here, TP represents true positive, which refers to the number of correctly predicted positive instances, and FP represents false positive, which refers to the number of instances falsely predicted as positive.

Recall, also known as sensitivity or true positive rate, measures the proportion of correctly predicted positive instances out of all actual positive instances. It is calculated using the formula:

Recall = TP / (TP + FN)

Here, FN represents false negative, which refers to the number of instances that are wrongly predicted as negative while they are actually positive.

The F-Score, also known as the F1-Score, combines precision and recall into a single metric, providing a comprehensive evaluation of a model’s performance. It is calculated using the harmonic mean of precision and recall, as shown by the formula:

F-Score = 2 * (precision * recall) / (precision + recall)

The F-Score ranges from 0 to 1, with a higher value indicating better model performance. A perfect F-Score of 1 means that the model has achieved both high precision and high recall, accurately identifying all positive instances without any false positives or false negatives.

Precision and recall are interconnected metrics that reflect different aspects of a model’s performance. Precision focuses on reducing the number of false positives, while recall emphasizes minimizing false negatives. Depending on the context and requirements of the classification task, one metric might take priority over the other.

For example, in a spam email classification system, high precision is desirable to minimize the chances of legitimate emails being marked as spam. On the other hand, in a disease diagnosis system, high recall is important to ensure that all actual positive cases are correctly identified, even if it means accepting some false positives.

The F-Score provides a balance between precision and recall, making it a useful metric in many classification tasks. It allows researchers and data scientists to compare and select models based on both precision and recall performance simultaneously.

However, it’s crucial to consider the specific characteristics of the dataset and the requirements of the problem at hand. In certain cases, alternative evaluation metrics may be more appropriate to assess the performance of classification models.

Calculating Precision

Precision is a crucial metric for evaluating the performance of a classification model. It measures the accuracy of positive predictions, indicating the proportion of correctly predicted positive instances out of all instances predicted as positive.

The formula to calculate precision is:

Precision = TP / (TP + FP)

where TP represents the number of true positive instances, and FP represents the number of false positive instances.

To understand precision in a classification context, let’s consider an example. Suppose we have built a model to classify emails as either spam or not spam. We evaluate the model using a test dataset and obtain the following results:

- True Positive (TP): 200 emails correctly classified as spam

- False Positive (FP): 30 emails incorrectly classified as spam

- True Negative (TN): 800 non-spam emails correctly classified

- False Negative (FN): 50 non-spam emails incorrectly classified

Using these values, we can calculate precision as follows:

- Precision = TP / (TP + FP)

- Precision = 200 / (200 + 30)

- Precision = 0.87

In this example, the precision of the classification model for identifying spam emails is 0.87, or 87%. This means that out of all the emails predicted as spam, 87% of them were correctly classified.

A higher precision value indicates a lower number of false positives, which means that the model is better at distinguishing between positive and negative instances. High precision is desirable in scenarios where false positives have significant consequences or where accurate identification of positive instances is crucial.

However, it’s important to note that precision alone does not provide a complete picture of a model’s performance. It should be considered alongside other metrics such as recall and F-Score to have a comprehensive evaluation.

Calculating precision is essential for assessing the effectiveness of a classification model. By understanding the proportion of correctly predicted positive instances, data scientists can determine if their model is achieving the desired level of accuracy in positive predictions.

Calculating Recall

Recall, also known as sensitivity or true positive rate, is a crucial metric for evaluating the performance of a classification model. It measures the ability of the model to identify all actual positive instances correctly.

The formula to calculate recall is:

Recall = TP / (TP + FN)

where TP represents the number of true positive instances, and FN represents the number of false negative instances.

To understand recall in a classification context, let’s consider an example. Suppose we have built a model to detect fraudulent transactions. When evaluating the model using a test dataset, we obtain the following results:

- True Positive (TP): 150 fraudulent transactions correctly identified

- False Negative (FN): 50 fraudulent transactions missed or not identified

- True Negative (TN): 800 legitimate transactions correctly identified

- False Positive (FP): 20 legitimate transactions incorrectly identified as fraudulent

Using these values, we can calculate recall as follows:

- Recall = TP / (TP + FN)

- Recall = 150 / (150 + 50)

- Recall = 0.75

In this example, the recall of the classification model for detecting fraudulent transactions is 0.75, or 75%. This means that the model correctly identified 75% of the total fraudulent transactions present in the dataset.

A higher recall value indicates that the model is better at capturing all positive instances, minimizing the number of false negatives. High recall is desirable in scenarios where it is crucial to identify as many positive instances as possible, even if it means accepting some false positives.

However, it’s important to note that recall alone does not provide a complete picture of a model’s performance. It should be considered alongside other metrics such as precision and F-Score to have a comprehensive evaluation.

Calculating recall is an essential step in assessing the effectiveness of a classification model. By understanding the proportion of correctly identified positive instances, data scientists can determine if their model is achieving the desired level of recall in capturing all actual positive cases.

Understanding the F-Score

The F-Score, also known as the F1-Score, is a metric utilized in machine learning that combines precision and recall into a single value. It provides a balanced measure of a classification model’s performance by considering both the accuracy and completeness of positive predictions.

The F-Score is calculated using the harmonic mean of precision and recall, which gives equal importance to both metrics. The formula to calculate the F-Score is:

F-Score = 2 * (precision * recall) / (precision + recall)

A higher F-Score indicates better model performance, with a perfect score of 1 signifying that the model achieved high precision and high recall, accurately identifying all positive instances without any false positives or false negatives.

By incorporating both precision and recall, the F-Score provides a more comprehensive evaluation of a model’s effectiveness compared to using either metric alone. It highlights the trade-off between precision and recall and allows data scientists to assess the overall performance of a classification model.

When the F-Score is high, it suggests that the model has both good accuracy in predicting positive instances and the ability to capture most of the actual positive instances. This is particularly valuable in situations where both precision and recall are equally important.

However, it’s important to note that the F-Score may not always be the ideal metric for evaluating a classification model. Its applicability depends on the specific requirements and objectives of the problem at hand.

In cases where precision is of utmost importance, such as in spam email classification, a higher weight can be given to precision over recall. On the other hand, in scenarios where recall is more critical, such as in disease diagnosis, a higher weight can be assigned to recall over precision.

Furthermore, the F-Score assumes that the classification task is balanced, meaning that the dataset has an equal number of positive and negative instances. In situations where the dataset is imbalanced, with a significantly larger number of instances in one class than the other, the F-Score may not accurately reflect the model’s performance.

Therefore, it’s essential to carefully consider the specific requirements, objectives, and characteristics of the dataset when interpreting and utilizing the F-Score as an evaluation metric in machine learning.

Advantages of the F-Score

The F-Score, also known as the F1-Score, offers several advantages as an evaluation metric in machine learning. Understanding these advantages can help data scientists and researchers assess the performance of classification models more effectively.

1. Comprehensive Performance Assessment: The F-Score combines precision and recall into a single value, providing a balanced measure of a model’s performance. It considers both the accuracy and completeness of positive predictions, allowing for a more holistic evaluation of the model’s effectiveness.

2. Single Metric for Model Comparison: The F-Score provides a single value that can be used to compare and assess different models or algorithms. Data scientists can easily evaluate the performance of various classification models and select the most suitable one based on the F-Score.

3. Trade-off Between Precision and Recall: The F-Score highlights the trade-off between precision and recall. It helps in finding the right balance between accurately predicting positive instances (precision) and capturing as many actual positive instances as possible (recall).

4. Awareness of False Positives and False Negatives: By incorporating both precision and recall, the F-Score emphasizes the importance of minimizing both false positives and false negatives. This awareness is crucial in domains where the cost of false positives or false negatives varies significantly.

5. Simple to Understand and Communicate: The F-Score formula is straightforward, making it easy to understand and communicate model performance. Its value ranges from 0 to 1, with a higher score indicating better performance, making it accessible to both technical and non-technical stakeholders.

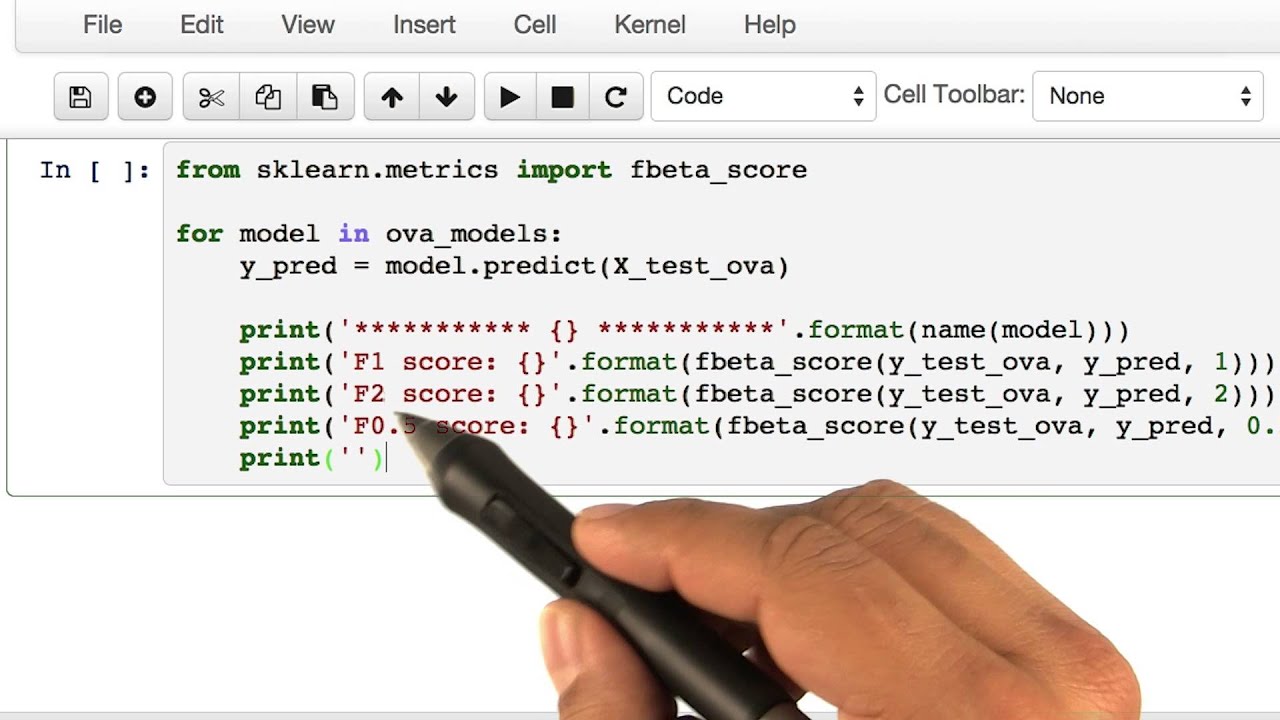

6. Flexibility in Weighting Precision and Recall: The F-Score allows for flexibility in weighting precision and recall. Depending on the specific requirements of the classification task, data scientists can adjust the weight given to precision and recall to prioritize one over the other.

7. Integration with Machine Learning Frameworks: The F-Score is widely implemented in popular machine learning frameworks and libraries, making it readily available for evaluation and optimization. Its usage is supported by a range of tools, making the implementation process seamless and efficient.

While the F-Score offers many advantages, it’s crucial to consider the specific characteristics of the dataset and the objectives of the classification task. It may not always be the ideal metric, and alternative evaluation metrics like area under the receiver operating characteristic curve (AUC-ROC) or precision-recall curve should be considered when appropriate.

Limitations of the F-Score

The F-Score, while a useful metric in evaluating classification models, has certain limitations that should be considered when interpreting its results. Understanding these limitations helps ensure a comprehensive evaluation of a model’s performance.

1. Balance Assumption: The F-Score assumes a balanced classification task, meaning that the dataset has an equal number of positive and negative instances. In real-world scenarios, datasets are often imbalanced, with significantly more instances in one class than the other. In such cases, the F-Score may not accurately reflect the model’s performance, as it may be predominantly influenced by the majority class.

2. Overemphasizing Accuracy: The F-Score prioritizes the balance between precision and recall, but it does not explicitly consider the overall accuracy of the model. While achieving a high F-Score is desirable, it is possible to have a model with satisfactory precision and recall but overall low accuracy. Thus, it is crucial to consider other evaluation metrics in conjunction with the F-Score to obtain a more complete picture of the model’s performance.

3. Context-Specific Requirements: The F-Score assumes a balanced weighting between precision and recall, which may not align with the specific requirements of every classification task. Depending on the domain and the consequences of false positives or false negatives, precision or recall may need to be prioritized. In such cases, alternative evaluation metrics that allow for different weightings, like the Receiver Operating Characteristic (ROC) curve, may be more appropriate.

4. Limited to Binary Classification: The F-Score is primarily designed for binary classification problems, where there are two classes. Extending it to multi-class classification tasks often requires modifications or using variant metrics like the micro-average or macro-average F-Score. These adaptations can introduce additional complexities and may not fully capture the intricacies of multi-class classification.

5. Lack of Insight into Class Imbalances: The F-Score does not provide detailed information regarding class imbalances. It does not differentiate between cases where both classes are accurately predicted and cases where one class dominates the predictions. This limitation can impact the interpretation of model performance, particularly in situations where the minority class is of high interest.

6. Insensitivity to Specific Misclassifications: The F-Score, being a combination of precision and recall, does not distinguish between different types of misclassifications. It treats all false positives and false negatives equally. In cases where specific types of misclassifications are of higher concern, additional evaluation metrics or post-processing techniques may be necessary to address these nuances.

Despite these limitations, the F-Score remains a widely-used metric due to its simplicity and ability to provide a balanced assessment of model performance. It serves as a valuable tool in evaluating classification models, but careful consideration of the specific context and requirements of the classification task is necessary for a thorough evaluation.

Adjusting the F-Score for Imbalanced Datasets

The F-Score, while widely used in evaluating classification models, may not accurately reflect model performance when dealing with imbalanced datasets. In scenarios where the number of instances in one class significantly outweighs the other, the F-Score can be biased towards the majority class. To address this, several adjustments can be made to ensure a more accurate evaluation.

1. Stratified Sampling: When splitting the dataset into training and testing sets, it is important to use stratified sampling. This ensures that the proportion of instances from each class remains balanced in both sets. By maintaining class balance, the F-Score is more likely to provide meaningful insights into model performance.

2. Oversampling and Undersampling: Oversampling and undersampling techniques are used to adjust the class distribution in the dataset. Oversampling involves duplicating instances from the minority class to increase its representation, while undersampling reduces the instances from the majority class to achieve a balanced dataset. These techniques help mitigate the impact of class imbalance on the F-Score.

3. Synthetic Minority Over-sampling Technique (SMOTE): SMOTE is a popular oversampling technique used to generate synthetic samples for the minority class. It creates new instances by interpolating between existing minority class samples. This helps to overcome the scarcity of instances in the minority class and provides a better representation of both classes during model training and evaluation.

4. Cost-Sensitive Learning: Assigning different misclassification costs to each class can help balance the impact of class imbalance on the F-Score. By assigning higher costs to misclassifications in the minority class, the model is encouraged to pay more attention to correctly predicting instances from the minority class, thus reducing the bias caused by class imbalance.

5. Performance Metrics Specific to Imbalanced Datasets: In addition to the F-Score, alternative performance metrics are available that are specifically designed to address the challenges of imbalanced datasets. These include the area under the precision-recall curve (AUC-PRC), which explicitly considers precision and recall trade-offs, and the Matthew’s Correlation Coefficient (MCC), which takes into account true positives, true negatives, false positives, and false negatives.

6. Algorithmic Techniques: Some machine learning algorithms offer built-in mechanisms to handle imbalanced datasets. Examples include decision trees with class-weighted criteria or ensemble methods like Random Forest with balanced selection strategies. These techniques inherently address class imbalance to some extent and can help improve model performance and the F-Score.

It’s important to note that the choice of adjustments depends on the specific characteristics of the dataset and the objectives of the classification task. It is advisable to experiment with different techniques and evaluate their impact on the F-Score to determine the most suitable approach for addressing class imbalance.

Application of the F-Score in Machine Learning

The F-Score, with its ability to balance precision and recall, finds broad application in various areas of machine learning. It serves as a valuable metric in evaluating the performance of classification models and aids in decision-making processes. Here are some key applications of the F-Score:

1. Binary Classification: The F-Score is most commonly used in binary classification tasks, where there are two classes. It allows data scientists to assess the effectiveness of a model in correctly predicting positive instances while minimizing both false positives and false negatives.

2. Model Comparison: The F-Score offers a single metric to compare and select the most suitable classification model for a given problem. By evaluating the F-Scores of different models, data scientists can make informed decisions regarding the algorithm or approach to employ for optimal performance.

3. Feature Selection: The F-Score can be utilized in feature selection techniques where the goal is to identify the most relevant features for classification. By calculating the F-Score for each feature, data scientists can rank them in terms of their importance and select the top-performing features for improved model performance.

4. Hyperparameter Tuning: Hyperparameters, such as the threshold or weight assigned to positive predictions, can significantly impact the F-Score. Data scientists can use techniques like grid search or cross-validation to find the optimal hyperparameter values that maximize the F-Score, resulting in an improved model.

5. Anomaly Detection: Anomaly detection involves identifying instances that deviate significantly from the usual patterns. The F-Score can be utilized to evaluate the performance of anomaly detection models, considering both precision and recall in flagging anomalies effectively.

6. Fraud Detection: The F-Score finds particular application in fraud detection systems, where correctly identifying fraudulent instances while minimizing false positives is crucial. The F-Score aids in evaluating the accuracy and completeness of fraud detection models, helping organizations make informed decisions to mitigate financial risks.

7. Medical Diagnosis: In medical diagnosis systems, accurate identification of diseases is critical. The F-Score is utilized to assess the performance of classification models employed in detecting various medical conditions, ensuring a balance between minimizing false negatives and false positives.

8. Natural Language Processing: In sentiment analysis or text classification tasks, the F-Score can be employed to evaluate the performance of machine learning models in classifying text-based data. This is particularly important when balancing the accuracy and completeness of positive predictions.

The F-Score, due to its ability to provide a balanced assessment of model performance, finds application in numerous fields and plays a significant role in machine learning evaluation and decision-making processes. However, it’s important to consider the specific characteristics and requirements of each application area to ensure the appropriate utilization of the F-Score and other complementary evaluation metrics.

Other Evaluation Metrics in Machine Learning

While the F-Score is a widely used and valuable metric in machine learning, it is important to consider other evaluation metrics to gain a comprehensive understanding of model performance. These additional metrics provide different insights and can be used in various scenarios. Here are some other common evaluation metrics:

1. Accuracy: Accuracy measures the overall correctness of predictions by calculating the proportion of correct predictions out of all instances. While accuracy is a straightforward metric, it can be misleading in the presence of imbalanced datasets. It is best used when the classes are evenly distributed.

2. Precision and Recall: Precision and recall, which are also used in the calculation of the F-Score, focus on the positive class. Precision measures the proportion of correctly predicted positive instances out of all predicted positive instances, while recall measures the proportion of correctly predicted positive instances out of all actual positive instances. These metrics are valuable when controlling false positives or false negatives is critical.

3. Area Under the Curve (AUC): The AUC is commonly used in binary classification problems, particularly when evaluating models that produce probabilities instead of binary predictions. It calculates the area under the Receiver Operating Characteristic (ROC) curve, which graphs the true positive rate against the false positive rate. A higher AUC value indicates a better-performing model.

4. Log Loss: Log loss, also known as cross-entropy loss, is used to evaluate models that produce probability distributions. It measures how well the predicted probabilities match the true class labels. A lower log loss value indicates better model calibration and a closer alignment with the true class probabilities.

5. Mean Average Precision (mAP): mAP is commonly used in information retrieval and object detection tasks. It measures the precision at different recall levels and then calculates the average precision across those levels. mAP is valuable when ranking and retrieving relevant instances are crucial.

6. Cohen’s Kappa: Cohen’s Kappa measures the agreement between two annotators or evaluators. It takes into account the possibility of agreement by chance and provides a score that ranges from -1 to 1. A score above 0 indicates agreement beyond chance, while a score below 0 suggests disagreement. Cohen’s Kappa is useful when evaluating inter-rater agreement in tasks such as human annotation or agreement between multiple models.

7. Mean Squared Error (MSE): MSE is commonly used to evaluate regression models. It measures the average squared difference between the predicted and actual values. A lower MSE indicates a better-fitting model.

8. Root Mean Squared Error (RMSE): RMSE is the square root of the MSE, which provides the average absolute deviation between the predicted and actual values. RMSE has the advantage of being in the same unit as the target variable.

While the F-Score is a valuable metric, considering these additional evaluation metrics provides a more comprehensive assessment of a model’s performance. The choice of metric(s) depends on the specific problem, dataset characteristics, and the importance of different performance aspects within the given context.