Understanding Dimensionality Reduction

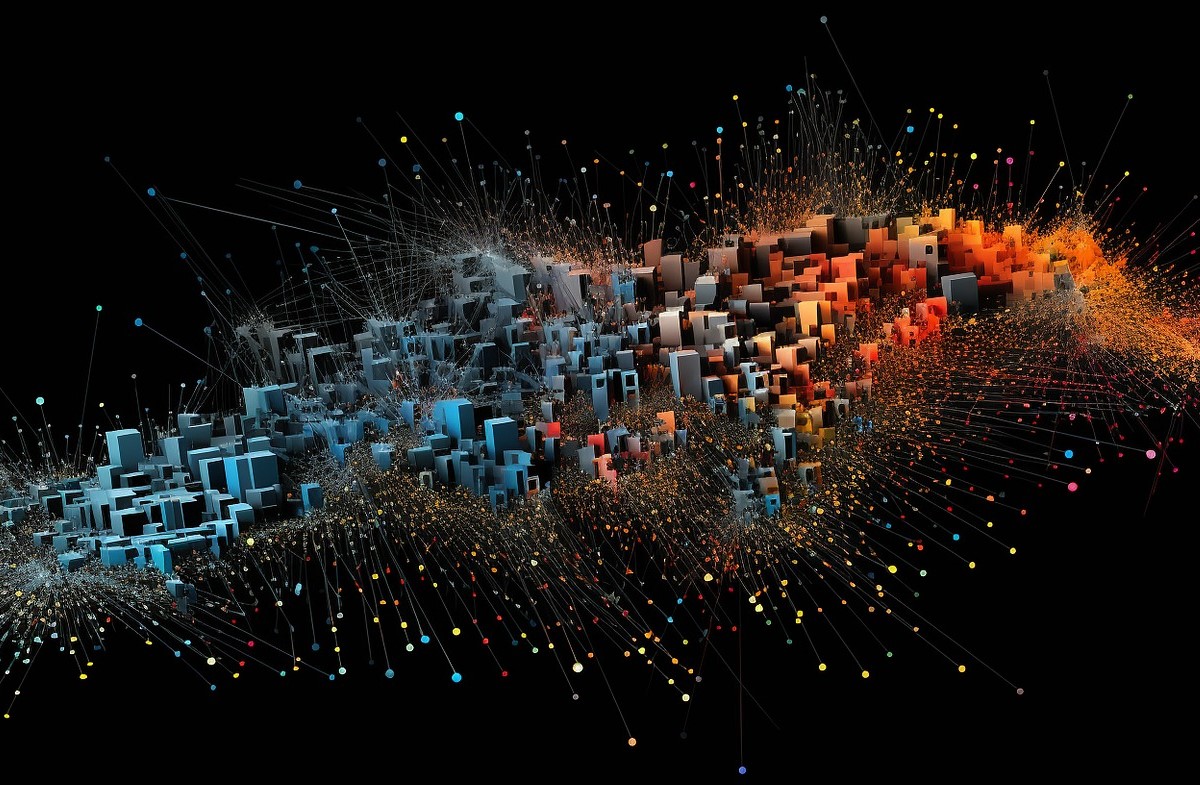

Dimensionality reduction is a technique in machine learning and data analysis that aims to reduce the number of features or variables in a dataset. It involves transforming a dataset with a large number of dimensions into a lower-dimensional space while preserving its essential characteristics. By doing so, dimensionality reduction makes the data more manageable, easier to visualize, and reduces the complexity of machine learning models.

In a high-dimensional dataset, each feature represents a different aspect of the data. However, when the number of features becomes excessive, it can lead to the curse of dimensionality. The curse of dimensionality refers to the problems that arise when working with high-dimensional data, including increased computational complexity, decreased efficiency of algorithms, overfitting, and difficulties in interpretation.

Dimensionality reduction helps to overcome these challenges by identifying the most important and informative features and discarding irrelevant or redundant ones. It extracts the underlying structure of the data and simplifies its representation. This not only reduces computational costs but also improves the performance and interpretability of machine learning models.

There are two primary approaches to dimensionality reduction: feature selection and feature extraction. Feature selection aims to select a subset of the original features, while feature extraction creates new features that are combinations or transformations of the original ones. Both approaches have their strengths and weaknesses and are suitable for different scenarios.

Dimensionality reduction techniques can be broadly categorized into linear and nonlinear methods. Linear methods, such as Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA), assume that the underlying data has linear structures. They project the data onto a lower-dimensional linear subspace while preserving its overall variance or class separability, respectively. Nonlinear methods, such as t-SNE (t-Distributed Stochastic Neighbor Embedding) and Isomap, can capture nonlinear relationships and preserve neighborhood information.

Furthermore, feature extraction methods like Autoencoders use neural networks to learn compact, lower-dimensional representations of the data. These methods have gained popularity due to their ability to capture complex patterns and non-linear relationships.

Overall, dimensionality reduction plays a crucial role in data preprocessing and analysis. It enables the extraction of meaningful information from high-dimensional data, improves the performance of machine learning algorithms, and aids in data visualization. With the advancement of techniques and algorithms, it continues to be an active area of research in the field of machine learning and data analysis.

Why is Dimensionality Reduction Important?

Dimensionality reduction is an essential technique in the field of machine learning and data analysis. It offers a range of benefits that make it crucial in a variety of domains and applications.

One of the primary reasons why dimensionality reduction is important is that it helps to address the curse of dimensionality. The curse of dimensionality refers to the challenges that arise when working with high-dimensional data, such as increased computational complexity, decreased efficiency of algorithms, overfitting, and difficulties in interpretation. By reducing the number of features or variables in a dataset, dimensionality reduction mitigates these issues and makes the data more manageable and easier to analyze.

Another key advantage of dimensionality reduction is improved model performance. High-dimensional data can lead to models that are too complex, resulting in overfitting. Overfitting occurs when a model learns patterns and noise in the training data, leading to poor generalization on unseen data. By reducing the dimensionality, the complexity of the model is reduced, and the risk of overfitting is minimized. This improves the model’s ability to generalize and make accurate predictions on new, unseen data.

Dimensionality reduction is also important for data visualization. Visualizing high-dimensional data is challenging, if not impossible, for humans. By reducing the dimensions, the data can be projected into lower-dimensional spaces that can be visualized on a graph or plot. This enables humans to gain insights and understand the underlying patterns and structures within the data.

Furthermore, dimensionality reduction can aid in feature selection and feature engineering. It helps identify the most relevant and informative features in a dataset, which can be crucial for improving model performance and interpretability. By discarding irrelevant or redundant features, dimensionality reduction streamlines the feature space, making it easier to analyze and interpret the relationships between variables.

In addition to the above benefits, dimensionality reduction can also lead to significant improvements in computational efficiency. Reducing the number of features in a dataset reduces the computational resources required for analysis and model training. This is particularly important when working with large datasets or limited computing resources, as it enables faster computation and reduces the overall processing time.

Challenges in High Dimensional Data

High-dimensional data refers to datasets that contain a large number of features or variables. While high-dimensional data can provide a wealth of information, it also presents unique challenges that need to be addressed. Understanding and overcoming these challenges is crucial for effective data analysis and model building.

One of the primary challenges in high-dimensional data is the curse of dimensionality. As the number of features increases, the data becomes more sparse, resulting in a sparse and scattered distribution in the feature space. This sparsity makes it difficult to gather enough samples to accurately represent each combination of feature values. Consequently, it becomes more challenging to estimate meaningful relationships and patterns in the data.

Another challenge in high-dimensional data is increased computational complexity. As the number of features grows, the computational resources required to process and analyze the data also increase exponentially. Algorithms that are efficient in low-dimensional spaces may become prohibitively expensive and time-consuming in high-dimensional spaces. This can significantly impact the speed and efficiency of data analysis.

Overfitting is another challenge that arises in high-dimensional data. Overfitting occurs when a model becomes too complex and learns noise or irrelevant features in the training data. In high-dimensional spaces, models have a higher risk of overfitting as there are more opportunities for the model to find spurious correlations. This can lead to poor generalization on unseen data and result in inaccurate predictions.

Interpretability is a significant challenge in high-dimensional data. With an increasing number of features, it becomes difficult to understand and interpret the relationships between variables. Visualizing and comprehending high-dimensional data is challenging, if not impossible, for humans. This impedes our ability to gain insights and understand the underlying patterns and structures.

Furthermore, high-dimensional data can suffer from the problem of multicollinearity, where features are highly correlated with each other. Multicollinearity can lead to unstable model estimates and affect the interpretability of the results. It can also make it challenging to identify the specific contributions of individual features in predicting the target variable.

Dealing with missing data is also a significant challenge in high-dimensional datasets. Missing data can be more prevalent in high-dimensional spaces, making it challenging to impute or handle missing values effectively. Moreover, the presence of missing data can introduce bias and impact the validity of the analysis.

Addressing these challenges requires careful consideration and the application of appropriate dimensionality reduction techniques. Dimensionality reduction methods help overcome the curse of dimensionality, improve computational efficiency, alleviate the risk of overfitting, enhance interpretability, and handle missing data effectively. It is crucial to select the most suitable dimensionality reduction technique based on the characteristics of the data and the specific analysis objectives.

Techniques for Dimensionality Reduction

There are several techniques available for dimensionality reduction that help transform high-dimensional data into a lower-dimensional space. These techniques can be broadly categorized into linear and nonlinear methods, each with its own strengths and assumptions.

Principal Component Analysis (PCA): PCA is a widely-used linear method for dimensionality reduction. It identifies the directions (principal components) in which the data varies the most and projects the data onto these components. The resulting projections capture most of the variability in the data while reducing the dimensionality. PCA assumes that the underlying structure of the data is linear.

Linear Discriminant Analysis (LDA): LDA is also a linear method, but it incorporates information about the class labels of the data points. It aims to find a projection that maximizes the separation between different classes while minimizing the within-class scatter. LDA is commonly used in classification tasks to preserve class separability and reduce dimensionality.

t-SNE (t-Distributed Stochastic Neighbor Embedding): t-SNE is a nonlinear method that is particularly effective in preserving the local neighborhood relationships in the data. It constructs a lower-dimensional representation by modeling the similarity between data points in the high-dimensional space and the low-dimensional space. t-SNE is commonly used for visualizing high-dimensional data and exploring clusters or patterns.

Autoencoders: Autoencoders are a type of neural network that learns to compress and reconstruct the input data. They consist of an encoder network that maps the input to a lower-dimensional representation and a decoder network that reconstructs the original data from the compressed representation. Autoencoders are highly effective in capturing complex patterns and non-linear relationships in the data.

Isomap: Isomap is a nonlinear dimensionality reduction technique that aims to preserve the geodesic distances between data points. It uses a graph-based approach to approximate the distances and constructs a lower-dimensional representation that preserves the local geometry of the data. Isomap is often used in applications where the data exhibits non-linear structures.

Feature selection: Feature selection methods aim to identify a subset of the original features that are most relevant for the analysis or predictive task. Various techniques, such as filtering, wrapper, and embedded methods, can be used to evaluate the importance of features and select the most informative ones. Feature selection can be particularly useful when interpretability is important.

It is important to note that the choice of dimensionality reduction technique depends on the characteristics of the data, the specific analysis goals, and the underlying assumptions of the techniques. Experimentation and evaluation of different methods are often necessary to determine the most suitable approach for a given dataset.

Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a popular linear dimensionality reduction technique that allows us to transform a high-dimensional dataset into a lower-dimensional space while preserving the most important patterns and structures within the data. PCA achieves this by identifying the directions, known as principal components, along which the data exhibits the maximum variability. These principal components form a new orthogonal basis in the transformed feature space.

The first principal component captures the direction in which the data varies the most. It represents the line that best fits the data, minimizing the sum of squared distances from each data point to the line. Subsequent principal components capture the remaining variability in decreasing order of importance, with the constraint that they are orthogonal (uncorrelated) to the previous components.

PCA has several applications, including dimensionality reduction, data visualization, noise reduction, and feature extraction. By projecting the data onto the lower-dimensional space spanned by the principal components, PCA can help us visualize high-dimensional data in two or three dimensions, enabling easier interpretation and exploration. It can also provide insights into the most significant features driving the variability of the data.

PCA assumes that the underlying structure of the data is linear. Thus, it may not be suitable for datasets with non-linear relationships. Additionally, PCA does not consider any class information or labels during the transformation process, making it an unsupervised technique. However, PCA can still be beneficial in supervised learning tasks by reducing the dimensionality and removing noise from the data, thereby improving the efficiency and performance of subsequent machine learning algorithms.

The steps involved in PCA are as follows:

- Standardize the original dataset to ensure that all features have mean zero and unit variance.

- Compute the covariance matrix of the standardized data.

- Calculate the eigenvectors and eigenvalues of the covariance matrix.

- Sort the eigenvalues in descending order and select the top k eigenvectors corresponding to the largest eigenvalues.

- Construct the projection matrix using the selected eigenvectors.

- Transform the original data into the lower-dimensional space by multiplying it with the projection matrix.

It is important to note that PCA is sensitive to the scale of the original features. Therefore, it is necessary to standardize the data before performing PCA to ensure that each feature contributes equally to the principal components.

Overall, PCA is a powerful technique for dimensionality reduction and data analysis. It allows us to capture the most relevant patterns and structures in high-dimensional data and provides a compact representation that facilitates visualization and understanding. Its simplicity and effectiveness make it a widely used tool in various fields, including image processing, signal processing, and data mining.

Linear Discriminant Analysis (LDA)

Linear Discriminant Analysis (LDA) is a dimensionality reduction technique that aims to find a linear projection of the data that maximizes the separation between different classes while minimizing the within-class scatter. LDA is particularly useful in classification tasks and can effectively reduce the dimensionality of the data while preserving the class discriminability.

The primary objective of LDA is to identify a set of orthogonal linear combinations of the input features, known as discriminant functions or linear discriminants. These discriminants are chosen in a way that maximizes the ratio of between-class variance to within-class variance. In other words, LDA seeks to project the data to a lower-dimensional space that maximizes the separability between classes while minimizing the variability within each class.

Unlike Principal Component Analysis (PCA), which is an unsupervised technique, LDA is a supervised learning technique that takes into account class labels or categorical information during the dimensionality reduction process. This allows LDA to exploit the class separability information in the data. By incorporating class labels, LDA can effectively model and preserve the discriminative information, leading to improved classification performance.

The steps involved in LDA are as follows:

- Compute the mean vectors for each class and the overall mean vector of the data.

- Calculate the between-class scatter matrix by finding the scatter matrix for each class weighted by the number of samples in each class and summing them up.

- Compute the within-class scatter matrix by calculating the scatter matrix for each class and summing them up.

- Find the eigenvalues and eigenvectors of the matrix obtained by taking the inverse of the within-class scatter matrix and multiplying it with the between-class scatter matrix.

- Select the top k eigenvectors corresponding to the largest eigenvalues as the linear discriminants.

- Project the original data onto the subspace spanned by the selected linear discriminants.

LDA assumes that the classes have approximately Gaussian distributions and that the covariance matrices of the classes are equal. If these assumptions are violated, LDA may not give optimal results. Therefore, it is important to assess the data’s appropriateness for LDA before applying the technique.

LDA has various applications, including face recognition, image classification, and bioinformatics. It is commonly used to reduce the dimensionality of the data while preserving the discriminative information necessary for classification. By selecting the most informative linear discriminants, LDA can effectively represent the data in a lower-dimensional space, improving computational efficiency and classification accuracy.

Overall, Linear Discriminant Analysis is a powerful technique for dimensionality reduction and classification. By considering class information, it can effectively extract the most discriminative features and provide a compact representation of the data. While it has underlying assumptions, LDA is widely used in machine learning and pattern recognition tasks due to its ability to enhance class separability and improve classification performance.

t-SNE (t-Distributed Stochastic Neighbor Embedding)

t-SNE (t-Distributed Stochastic Neighbor Embedding) is a dimensionality reduction technique widely used for visualizing and exploring high-dimensional data. Unlike linear methods such as Principal Component Analysis (PCA), t-SNE is a nonlinear technique that aims to preserve the local structure and relationships between data points in the lower-dimensional space.

The primary objective of t-SNE is to better represent the similarities and dissimilarities between data points based on their proximity in the high-dimensional space. It achieves this by constructing a probability distribution that represents the pairwise similarities of the data points in the original space. It then constructs a similar probability distribution in the lower-dimensional space. The technique uses a stochastic optimization algorithm to minimize the divergence between these two distributions, thereby finding an optimal representation for the data.

t-SNE has gained popularity for its ability to preserve the local structure of the data, allowing for effective visualization and clustering analysis. It is particularly useful for revealing clusters, outliers, and underlying patterns in the data. By mapping high-dimensional data to a two- or three-dimensional space, t-SNE provides a visual representation that helps users gain insights and interpret the complex relationships within the data.

One important factor to consider when using t-SNE is the perplexity parameter. Perplexity is a hyperparameter that determines the balance between focusing on local versus global aspects of the data. A higher perplexity value considers a wider range of neighbors, potentially resulting in more global structure being preserved in the lower-dimensional space. On the other hand, a lower perplexity focuses more on local neighborhood relationships. Selecting an appropriate perplexity value is crucial for obtaining meaningful visualizations.

It is important to note that t-SNE is computationally expensive and may not scale well to very large datasets. Additionally, the visualization produced by t-SNE can vary with different random seeds, so multiple runs of t-SNE may be required to ensure stability and consistency. Furthermore, t-SNE does not preserve global distances and distances between different clusters may not be preserved accurately.

t-SNE has found applications in various fields, including image processing, natural language processing, and bioinformatics. It has been utilized for tasks such as visualizing high-dimensional image and text data, exploring gene expression patterns, and analyzing document similarity.

Autoencoders

Autoencoders are a type of neural network architecture used for unsupervised learning and dimensionality reduction. They are particularly effective in capturing complex patterns and non-linear relationships in the data. Autoencoders consist of an encoder network and a decoder network, which work together to learn a compressed representation of the input data.

The encoder network takes the input data and maps it to a lower-dimensional representation, often referred to as the encoding or latent space. This compressed representation captures the essential features and information of the input data. The decoder network then reconstructs the original input from the encoded representation. The goal of the autoencoder is to minimize the difference between the input and the reconstructed output during training.

By learning to compress and reconstruct the data, autoencoders implicitly capture the most salient features and patterns. The dimensionality reduction capability of autoencoders lies in the bottleneck layer, which has a lower dimensionality than the input and the output layers. The reduced dimensionality of the bottleneck layer allows for a compact representation of the data.

One of the key advantages of autoencoders is their ability to capture complex and non-linear relationships in the data. This is achieved through the use of hidden layers with non-linear activation functions, such as sigmoid or relu, which enable the autoencoder to learn intricate patterns and representations.

Autoencoders can be further enhanced by adding regularization techniques, such as adding noise to the input, to make them more robust and capable of handling noisy or incomplete data. Regularized autoencoders, like denoising autoencoders or sparse autoencoders, can help improve the generalization performance and remove unwanted noise or irrelevant features from the data.

While autoencoders are mostly used for unsupervised learning and dimensionality reduction, they can also be adapted for semi-supervised learning and feature extraction in supervised learning tasks. By utilizing the compressed latent space learned by the autoencoder, it is possible to extract meaningful features that can aid in improving the performance of subsequent supervised learning models.

Autoencoders have been successfully applied to various domains, including image recognition, natural language processing, and anomaly detection. They have been employed for tasks such as image denoising, document representation learning, and fraud detection.

It is important to note that training autoencoders can be computationally expensive, especially for large datasets and complex architectures. Furthermore, the performance of autoencoders heavily relies on the selection of hyperparameters, such as the number of hidden layers and the dimensionality of the bottleneck layer. Careful tuning and experimentation are often required to achieve optimal results.

Isomap

Isomap is a non-linear dimensionality reduction technique that aims to preserve the local geometric structure of the data in a lower-dimensional space. It is particularly effective for capturing non-linear relationships and uncovering the underlying manifold or structure in the data.

The key idea behind Isomap is to approximate the geodesic distances, or the shortest path distances along the underlying manifold, between data points. By capturing the true intrinsic distances, Isomap can efficiently represent the data in a lower-dimensional space while preserving the local neighborhood relationships.

The main steps involved in Isomap are as follows:

- Construct a k-nearest neighbor graph by connecting each data point to its k nearest neighbors based on a chosen distance metric.

- Compute the pairwise geodesic distances between data points using graph-based techniques, such as Dijkstra’s algorithm. These distances represent the shortest paths along the edges of the k-nearest neighbor graph.

- Perform classical multidimensional scaling (MDS) on the geodesic distance matrix to obtain the lower-dimensional representation. MDS aims to find a configuration of points in the lower-dimensional space that preserves the pairwise distances as closely as possible.

Isomap has several advantages over other dimensionality reduction techniques. By accounting for the underlying manifold structure of the data, it can effectively capture complex non-linear relationships and reveal meaningful patterns. Isomap is also robust to noise and can handle missing or incomplete data, making it suitable for real-world datasets.

However, there are a few limitations to consider when using Isomap. Firstly, Isomap assumes that the data lie on a single continuous manifold. If the data contain multiple disconnected manifolds or nonlinear structures, Isomap might produce misleading results. Additionally, Isomap can be sensitive to the choice of k, the number of nearest neighbors used in the construction of the graph. Choosing an appropriate value for k is crucial to capturing the local neighborhood relationships accurately.

Isomap has found applications in various fields, including image processing, motion capture, and bioinformatics. It has been used for tasks such as facial expression recognition, gait analysis, and analysis of molecular dynamics.

Overall, Isomap is a valuable tool for non-linear dimensionality reduction. By preserving the intrinsic distances and local neighborhood relationships, it provides a lower-dimensional representation that aids in visualization, pattern discovery, and analysis of complex datasets.

Feature Selection vs. Feature Extraction

When dealing with high-dimensional data, it is often necessary to reduce the number of features for efficient and effective analysis. Two common approaches for achieving this are feature selection and feature extraction. While both methods aim to reduce the dimensionality of the data, they differ in their approaches and objectives.

Feature Selection: Feature selection involves identifying and selecting a subset of the most informative features from the original feature set. The selected features are considered the most relevant and contribute significantly to the analysis or prediction task at hand. Feature selection can help remove irrelevant or redundant features, enhance model interpretability, and reduce computational complexity.

There are different strategies for performing feature selection:

- Filter methods: These methods use statistical measures or scores to rank individual features based on their relevancy to the target variable. Features are selected based on predetermined criteria, such as correlation coefficient, chi-square test, or mutual information.

- Wrapper methods: These methods involve training a model with different subsets of features and evaluating the performance of the model based on selected criteria, such as accuracy or cross-validation error. Features are selected based on the model’s performance.

- Embedded methods: These methods incorporate feature selection as part of the learning algorithm. For example, some machine learning algorithms, like LASSO or decision trees, have built-in mechanisms for feature selection that identify the most important features during the training process.

Feature Extraction: Feature extraction aims to transform the original feature space into a new, lower-dimensional space by creating new features that are combinations or transformations of the original features. The information from the original features is condensed into a smaller set of more informative features.

Some popular feature extraction techniques include:

- Principal Component Analysis (PCA): PCA is a technique that linearly transforms the original features into a new set of uncorrelated features known as principal components. The principal components capture the maximum variability in the data. PCA is effective for capturing global patterns but may not preserve class-specific information.

- Linear Discriminant Analysis (LDA): LDA is a technique that aims to find a projection that maximizes the class separability in the transformed feature space. It creates new features that discriminate between different classes while minimizing the within-class scatter.

- Autoencoders: Autoencoders, mentioned earlier, are neural networks that learn to compress and reconstruct the input data. The bottleneck layer of the autoencoder represents the extracted features or the compressed representation of the input data.

Feature extraction often requires a machine learning algorithm to learn the optimal transformations or combinations of features. The extracted features can then be used for subsequent analysis or modeling.

It is important to note that both feature selection and feature extraction have their strengths and weaknesses. Feature selection is useful when interpretability is important or when the datasets are relatively small. On the other hand, feature extraction is useful when the inherent structure or patterns in the data need to be captured or when a compact representation is desired.

The choice between feature selection and feature extraction depends on the specific characteristics of the data, the analysis objectives, the interpretability requirements, and the available computational resources.

Evaluation of Dimensionality Reduction Methods

Evaluating the performance and effectiveness of dimensionality reduction methods is crucial to determine their suitability for a specific dataset and analysis task. Various evaluation measures and techniques can be employed to assess the quality of the reduced-dimensional representation and understand the impact on downstream tasks.

One common approach to evaluating dimensionality reduction methods is to consider how well they preserve the intrinsic structure and important information in the data. If the dimensionality reduction method successfully captures the main patterns and relationships, it will result in a reduced-dimensional representation that retains the essential characteristics of the original data.

Several evaluation measures can be used to quantify the quality of dimensionality reduction:

- Reconstruction error: For methods like PCA and autoencoders, the reconstruction error can be calculated by comparing the original data with the reconstructed data. A lower reconstruction error indicates that the method accurately captures the important features of the data.

- Preservation of distances: The preservation of pairwise distances is another important aspect to evaluate. When reducing dimensionality, it is crucial to maintain the relative distances between data points. Measures like stress or relative error can quantify the level of distortion introduced in the reduced space compared to the original space.

- Preservation of global or local structures: The ability of the dimensionality reduction method to preserve global or local structures can be assessed using clustering or classification evaluation. If the reduced-dimensional representation still allows effective separation of clusters or accurate classification, it indicates successful preservation of structural information.

- Visualization and interpretability: Visual inspection can provide valuable insights into the quality of dimensionality reduction. If the reduced-dimensional representation can be visually interpreted and reveals meaningful patterns, it suggests that the method has captured important information in the data.

- Impact on downstream tasks: Evaluating the performance of downstream tasks, such as classification or regression, with and without dimensionality reduction can provide insights into the impact of the reduction method. If the performance remains stable or improves after dimensionality reduction, it indicates that the method has effectively captured the relevant information while removing noise or irrelevant features.

It is important to note that the evaluation of dimensionality reduction methods is highly dependent on the specific dataset and the analysis task. Careful consideration must be given to the evaluation measures selected and their relevance to the specific objectives and requirements of the analysis.

Furthermore, it is advisable to compare multiple dimensionality reduction methods and evaluate their performance on different evaluation measures. This helps in selecting the most appropriate method for the specific dataset and analysis task, considering factors such as preservation of structure, computational complexity, and interpretability.

Applications of Dimensionality Reduction

Dimensionality reduction techniques have a wide range of applications across various fields, owing to their ability to simplify data representation and improve the efficiency and performance of machine learning models. Let’s explore some key applications of dimensionality reduction:

Data Visualization: One of the primary applications of dimensionality reduction is data visualization. High-dimensional data is challenging to interpret and visualize directly, but by reducing its dimensionality, it can be projected onto a lower-dimensional space that can be easily visualized. Techniques like PCA, t-SNE, and Isomap are often utilized to transform the data into a lower-dimensional space, enabling visualization and exploration of complex datasets.

Machine Learning: Dimensionality reduction plays a vital role in machine learning tasks. High-dimensional datasets can lead to increased computational complexity, overfitting, and reduced model performance. By reducing the dimensionality of the data, dimensionality reduction techniques like PCA, LDA, and autoencoders help streamline the feature space, improve computational efficiency, and enhance model generalization. They aid in feature selection, feature extraction, and model interpretability.

Image and Face Recognition: In image analysis tasks, dimensionality reduction techniques are used to extract meaningful features for image representation and recognition. Techniques like PCA, LDA, and convolutional autoencoders are employed to reduce the dimensionality of image data, removing noise and irrelevant information while preserving important visual features. This enables efficient image compression, improved recognition accuracy, and speed up in image processing tasks.

Natural Language Processing (NLP): In NLP, dimensionality reduction methods are utilized to transform high-dimensional text data into a lower-dimensional vector space. Techniques like Latent Semantic Analysis (LSA), Latent Dirichlet Allocation (LDA), and word2vec help capture semantic relationships and extract latent topics from text corpora. The reduced-dimensional representations facilitate tasks such as document similarity, topic modeling, sentiment analysis, and document clustering.

Bioinformatics: In the field of bioinformatics, dimensionality reduction techniques are employed to analyze large-scale biological datasets and extract meaningful biological features. They aid in identifying gene expression patterns, clustering similar samples, classifying biological samples, and understanding the relationships between genes or proteins. Techniques like PCA, LDA, and non-negative matrix factorization (NMF) are widely used in gene expression analysis, protein structure prediction, and functional genomics.

Anomaly Detection: Dimensionality reduction techniques play a crucial role in anomaly detection by helping to identify deviations or outliers in high-dimensional data. By reducing the dimensionality of the data, anomalies become more apparent, as they deviate from the expected patterns. Techniques like PCA, autoencoders, and Isolation Forest are utilized for dimensionality reduction and anomaly detection in various domains, including fraud detection, cybersecurity, and network analysis.

These are just a few examples of the numerous applications of dimensionality reduction across different domains. The choice of dimensionality reduction technique depends on the specific requirements of the application, the characteristics of the data, and the desired output. Dimensionality reduction continues to be a fundamental tool in the analysis of high-dimensional data, enabling efficient processing, visualization, and extraction of valuable insights.