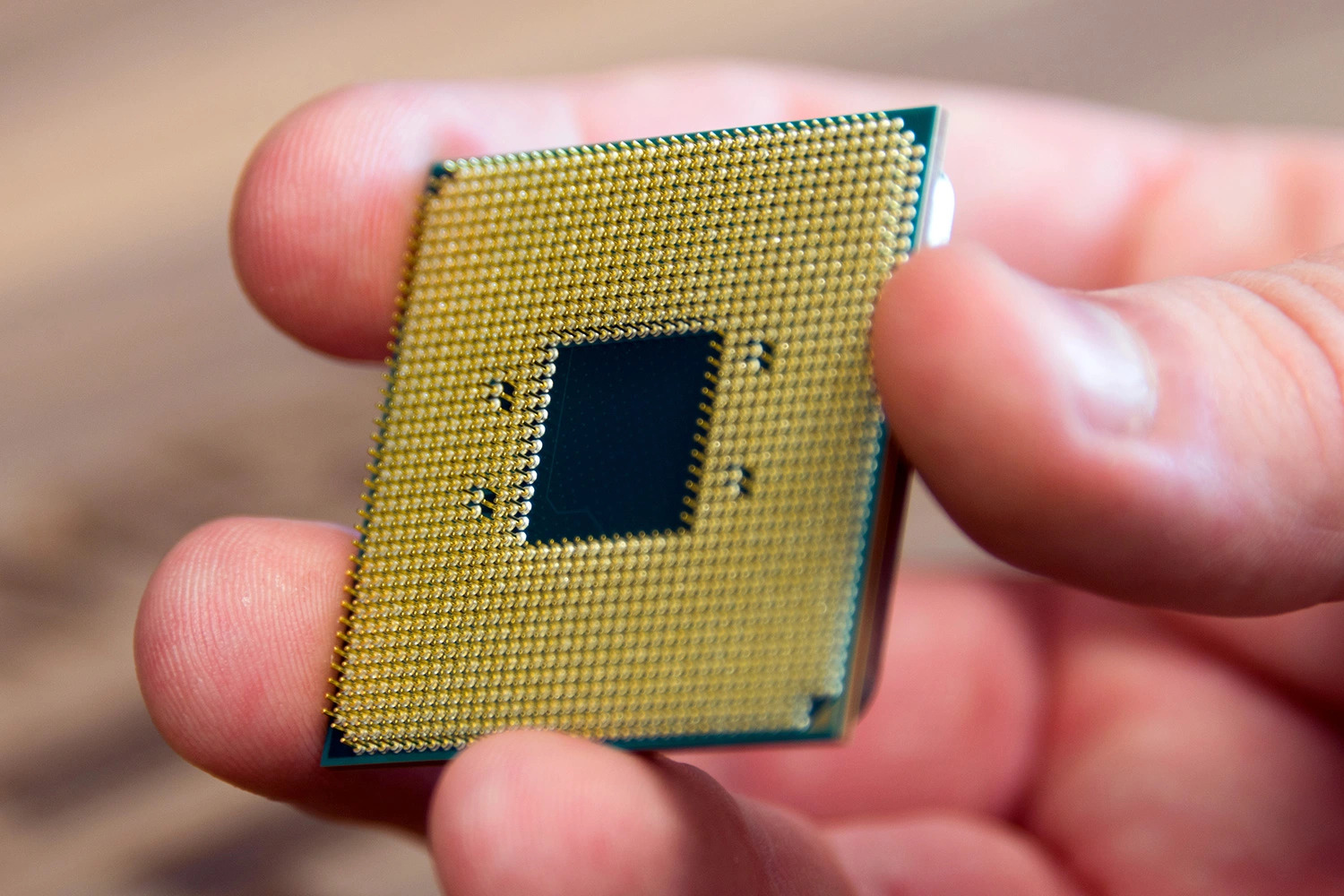

What Is a CPU? (Central Processing Unit)

A CPU, or Central Processing Unit, is a crucial component of a computer system. Often referred to as the “brain” of the computer, the CPU is responsible for executing instructions and performing calculations, making it the most important part of any computing device.

The primary function of a CPU is to process and carry out instructions given by the computer’s operating system and applications. It performs tasks such as fetching, decoding, and executing instructions, as well as managing the flow of data within the computer system.

The CPU is made up of several key components that work together to perform these tasks. One of the most important components is the control unit, which coordinates the activities of all other components, ensuring that instructions are executed in the correct order.

Another critical component is the Arithmetic Logic Unit (ALU), which performs mathematical calculations and logical operations, such as addition, subtraction, multiplication, and comparison. The ALU is responsible for carrying out the fundamental operations that drive the processing capabilities of the CPU.

The CPU also includes registers, small storage areas within the processor, which hold data and instructions that are currently being processed. These registers play a crucial role in speeding up the execution of instructions by providing quick access to frequently used information.

In addition to its core components, the CPU interacts with other essential components of a computer system, such as memory, input/output devices, and storage devices. It coordinates the movement of data between these components and ensures efficient communication and operation of the entire system.

The performance of a CPU is determined by various factors, including its clock speed, the number of cores, cache size, and architecture. Clock speed refers to the number of instructions a CPU can execute per second, and higher clock speeds generally result in faster processing.

CPU architecture, on the other hand, defines the organization and design of the processor, impacting its efficiency and capability to execute instructions. Different architectures, such as x86, ARM, and PowerPC, are utilized in various types of computing devices, catering to different computing needs.

How Does a CPU Work?

The inner workings of a CPU can be complex, but understanding the basic principles can provide insight into how it operates. At its core, a CPU follows a simple cycle of fetching, decoding, and executing instructions.

The first step in this cycle is the fetching stage. The CPU retrieves instructions from the computer’s memory, using the program counter to determine the next instruction’s location. It fetches the instruction from the memory address, bringing it into the CPU’s internal cache for quick access.

Once the instruction is fetched, the CPU moves on to the decoding stage. During this stage, the CPU analyzes the instruction to determine what specific operation needs to be executed. This involves breaking down the instruction into smaller parts, identifying the opcode (operation code) that specifies the action to be performed.

Once the instruction is decoded, the CPU proceeds to the execution stage. In this stage, the CPU carries out the desired operation based on the decoded instruction. This can involve performing arithmetic calculations, logical operations, or accessing data from memory.

Throughout this process, the CPU interacts with various components to perform its tasks. It communicates with the memory subsystem to fetch and store data, accessing RAM (Random Access Memory) where temporary instructions and data are stored.

The CPU also utilizes registers, small on-chip storage units, to hold data and instructions that are currently being processed. Registers provide quick access to frequently used data, speeding up the execution of instructions. They act as temporary storage for intermediate results during calculations.

Additionally, the CPU interfaces with input/output devices, such as keyboards, mice, and monitors, to receive inputs and display outputs. It coordinates the flow of data between these devices and the memory, ensuring smooth communication and operation.

The entire process of fetching, decoding, and executing instructions is repeated continuously, forming a loop that allows the CPU to perform tasks in a sequential manner. The speed at which this cycle occurs is determined by the CPU’s clock speed, measured in gigahertz (GHz). A higher clock speed allows for faster processing and execution of instructions.

Understanding how a CPU works is essential in recognizing its crucial role in a computer system. By efficiently carrying out instructions and performing calculations, the CPU enables the functioning of various software applications and the overall execution of tasks on a computing device.

Components of a CPU

A CPU (Central Processing Unit) consists of several key components that work together to execute instructions and perform calculations. Each component has a specific role in the overall functioning of the CPU.

1. Control Unit: The control unit acts as the “brain” of the CPU, responsible for coordinating and controlling the execution of instructions. It manages the flow of data between different components, ensuring instructions are executed in the correct order.

2. Arithmetic Logic Unit (ALU): The ALU is responsible for performing mathematical calculations and logical operations. It can carry out tasks such as addition, subtraction, multiplication, and comparison. The ALU is an integral component that drives the processing capabilities of the CPU.

3. Registers: Registers are small, high-speed storage units within the CPU. They are used to hold data and instructions that are currently being processed. Registers provide quick access to frequently used information, enabling faster execution of instructions and calculations.

4. Cache Memory: Cache memory is a small but fast memory that is located within the CPU. It acts as a buffer between the CPU and the main memory (RAM), storing frequently accessed data and instructions. By providing quick access to this data, cache memory helps reduce the time it takes for the CPU to retrieve information, improving overall system performance.

5. Bus Interface: The bus interface connects the CPU to other components of the computer system, such as memory, input/output devices, and storage devices. It manages the transfer of data between these components, ensuring smooth communication and operation of the entire system.

6. Instruction Decoder: The instruction decoder is responsible for decoding instructions fetched from memory. It analyses the instruction and determines what specific operation needs to be executed. By breaking down the instruction into smaller parts, the decoder enables the CPU to understand and execute the desired action.

7. Clock Generator: The clock generator provides timing signals to synchronize the activities of different components in the CPU. It generates regular pulses, known as clock cycles, that determine the speed at which instructions are executed. The clock speed, measured in gigahertz (GHz), impacts the overall performance of the CPU.

These components work together harmoniously to ensure the smooth operation of the CPU. Their collaboration enables the CPU to execute instructions, perform calculations, and manage data within a computer system.

Clock Speed and Performance

The clock speed of a CPU (Central Processing Unit) plays a critical role in determining its performance. Clock speed refers to the number of instructions a CPU can execute per second, measured in gigahertz (GHz).

A higher clock speed generally results in faster processing, as the CPU can perform more instructions within a given time frame. This means that tasks and operations can be completed more quickly, leading to a smoother and more efficient user experience.

However, it is important to note that clock speed alone does not guarantee superior performance. Other factors, such as the architecture and design of the CPU, also contribute to its overall processing capabilities.

Sometimes, different CPUs with the same clock speed may have varied performance due to their microarchitecture. Microarchitecture refers to the specific design and organization of the internal components of a CPU, including the number and efficiency of its execution units.

Furthermore, the efficiency of instruction execution and the presence of multiple cores also impact performance. CPUs with multiple cores can effectively perform simultaneous tasks, known as multitasking, resulting in increased productivity and improved performance.

While a higher clock speed can lead to faster performance, there are limitations to consider. Higher clock speeds generate more heat, which can affect the stability and reliability of the CPU. To mitigate this, cooling mechanisms such as heat sinks and fans are utilized to dissipate heat and maintain optimal operating conditions.

Additionally, it is crucial to ensure that other components, such as memory and storage, can keep up with the speed of the CPU. Imbalances in performance across different components can limit the overall system performance, as the CPU may be waiting for data or instructions from other slower components.

CPU Cores and Multithreading

CPU (Central Processing Unit) cores and multithreading are essential aspects of modern processors that significantly impact performance and efficiency. Let’s explore how these features contribute to the overall functionality of a CPU.

A CPU core can be thought of as an independent processing unit within a CPU. Each core is capable of executing instructions and performing calculations. CPUs can have a single core, dual-core, quad-core, or even higher numbers of cores, with each core capable of performing tasks concurrently.

Having multiple cores allows for parallel processing, where different cores can simultaneously execute instructions and carry out calculations. This improves overall performance by dividing the workload among multiple cores, enabling the CPU to handle more tasks efficiently.

For example, in a quad-core CPU, tasks can be distributed among the four cores, allowing for faster and more efficient execution. This is especially beneficial for tasks that can be parallelized, such as multimedia editing, gaming, and running multiple applications simultaneously.

Multithreading is another important concept that enhances CPU performance. It allows a single core to handle multiple threads or sets of instructions concurrently. In other words, multithreading enables a core to work on multiple tasks or processes at the same time.

By utilizing multithreading, a CPU can provide the illusion of simultaneous execution by quickly switching between different threads. This is particularly useful for scenarios where multiple threads need to be executed simultaneously, such as in multitasking environments.

Thread-level parallelism, facilitated by multithreading, improves the efficiency of processors by ensuring that cores are not idle when a task is waiting for resources or input/output operations. Multithreading allows the CPU to keep its resources utilized and boost overall performance.

It is important to note that the effectiveness of multithreading depends on several factors, including the availability of parallel tasks, the software’s ability to parallelize tasks, and the CPU’s implementation of multithreading technologies like Simultaneous Multithreading (SMT) or Hyper-Threading.

Cache Memory and Its Importance

Cache memory is a small but crucial component of a CPU (Central Processing Unit) that plays a significant role in enhancing overall system performance. Let’s explore what cache memory is and why it is important.

Cache memory is a type of high-speed memory that is located closer to the CPU than main memory (RAM). It acts as a buffer between the CPU and the main memory, storing frequently accessed data and instructions.

The primary purpose of cache memory is to reduce the time it takes for the CPU to retrieve data and instructions. Since cache memory is faster to access than RAM, having the required data and instructions readily available in the cache allows the CPU to fetch and execute them more quickly.

Cache memory operates on the principle of locality, which refers to the idea that data and instructions that are accessed recently or in close proximity are likely to be accessed again in the near future. By keeping this data in the cache, the CPU can avoid the need to fetch it from the slower main memory.

Cache memory is organized into multiple levels, with each level having a different size and proximity to the CPU. The first level, known as L1 cache, is the smallest and closest to the CPU. It is divided into separate instruction and data caches, allowing for simultaneous access to instructions and data.

As we move to higher cache levels, such as L2 and L3 caches, the size increases, but the access speed becomes slower. However, even the larger and slower caches are faster than accessing data from RAM, providing a significant performance boost.

The importance of cache memory can be seen in its impact on the CPU’s efficiency. By reducing memory latency and improving data access times, cache memory helps in avoiding memory bottlenecks and CPU idle time.

In addition, cache memory also alleviates the load on the main memory, reducing the need to constantly access it. This leads to a more efficient utilization of system resources and overall improved performance.

It is worth noting that cache effectiveness depends on the principle of locality and the size of the cache. Larger cache sizes can accommodate more data and instructions, reducing cache misses (failures to find data in the cache) and improving overall performance.

Overall, cache memory plays a crucial role in bridging the speed gap between the CPU and main memory. Its ability to store frequently accessed data and instructions allows the CPU to operate more efficiently, delivering faster and more responsive computing experiences.

CPU Architecture and Instruction Set

CPU (Central Processing Unit) architecture refers to the organization and design of the internal components of a processor. It determines how instructions are executed, how data is processed, and how different components of the CPU interact with each other.

One significant aspect of CPU architecture is the instruction set, which defines the set of instructions that a CPU can understand and execute. Each CPU architecture has its own unique instruction set, consisting of specific operations and commands.

The instruction set includes basic operations, such as arithmetic calculations and logical operations, as well as more complex instructions for executing branching, looping, and data manipulation. Different instruction sets have different capabilities, allowing CPUs to cater to specific computing needs.

Two common instruction set architectures are CISC (Complex Instruction Set Computing) and RISC (Reduced Instruction Set Computing).

CISC architecture encompasses a rich set of complex instructions, which can perform multiple operations in a single instruction. This reduces the number of instructions required to perform a task but can result in more complex decoding and execution processes. x86 is a widely used CISC architecture.

RISC architecture, on the other hand, focuses on simplicity and efficiency by providing a smaller set of instructions. RISC CPUs typically perform simple instructions quickly, allowing for faster execution. ARM is a popular example of a RISC architecture.

CPU architecture also determines the size and organization of registers, cache memory, and other internal components. The memory hierarchy, including the size and levels of cache memory, is vital in improving efficiency and reducing memory access time.

Furthermore, advancements in CPU architecture have led to innovations such as superscalar processors, which can execute multiple instructions concurrently, and pipelining, which allows for overlapping instruction execution.

The choice of CPU architecture is dependent on the intended use and the specific requirements of a computing system. Different architectures are optimized for different applications, such as high-performance computing, embedded systems, or mobile devices.

It is also worth noting that software compatibility plays a crucial role in CPU architecture. Different instruction sets may require software to be compiled or optimized specifically for that architecture. This impacts the compatibility and performance of software applications on different CPUs.

Ultimately, CPU architecture and instruction set have a profound impact on the performance, efficiency, and compatibility of a CPU. The choice of architecture is driven by factors such as the intended application, system requirements, and the need for specific features and capabilities.

Overclocking a CPU

Overclocking a CPU (Central Processing Unit) is the process of increasing its clock speed to achieve higher performance beyond the manufacturer’s specified limits. This practice involves adjusting the CPU’s clock multiplier or base clock frequency, resulting in faster processing speeds and improved system performance.

Overclocking offers several potential benefits, such as increased system responsiveness, faster program execution, and improved gaming performance. It can give a significant performance boost, especially for demanding tasks that require high computational power, such as video editing, 3D rendering, or scientific simulations.

However, it’s important to note that overclocking also comes with some risks and considerations. Increased clock speeds lead to higher power consumption, which results in more heat generation. This heat must be effectively dissipated to prevent overheating, which can damage the CPU or other system components.

To manage the increased heat, adequate cooling solutions are necessary. This can include improved air cooling with larger heatsinks and fans, or more advanced cooling methods like liquid cooling systems. It’s crucial to monitor the CPU temperature regularly to ensure it remains within safe limits.

Overclocking may also require adjustments to other system settings, such as memory timings and voltage levels. These adjustments are necessary to maintain system stability and prevent crashes or instability caused by the increased clock speed. Knowledge and understanding of the system’s limitations and proper configuration are essential for successful overclocking.

While overclocking can provide performance benefits, it’s important to consider the potential drawbacks. Overclocking typically voids the warranty of the CPU, so it’s essential to understand the risks involved and make an informed decision. Additionally, excessive overclocking or improper configuration can lead to instability, system crashes, data corruption, or even permanent damage to the CPU.

Overclocking is often favored by computer enthusiasts, gamers, and professionals who require maximum performance. It allows them to push the limits of their systems and extract extra performance when needed. However, for average users or those with stability concerns, it may be more prudent to stick to the manufacturer’s recommended specifications.

It’s worth noting that not all processors are created equal, and individual CPUs may have varying limits for overclocking. Some CPUs may overclock more easily and achieve higher speeds, while others may have limitations due to factors such as manufacturing variances or architectural constraints.

Overall, overclocking a CPU can provide a significant boost in performance for those who are willing to take the risks and manage the necessary considerations. With proper cooling, system stability monitoring, and careful configurations, overclocking can enhance the performance of a CPU and provide a more powerful computing experience.

CPU Cooling Techniques

CPU (Central Processing Unit) cooling is a critical aspect of maintaining optimal performance and preventing overheating, which can lead to system instability and damage. Various cooling techniques and technologies are employed to dissipate heat and ensure that the CPU operates within safe temperature limits.

1. Air Cooling: Air cooling is the most common and cost-effective method of CPU cooling. It involves the use of a heatsink and fan (HSF) combination. The heatsink helps absorb and disperse the heat generated by the CPU, while the fan blows cool air over the heatsink, aiding the heat dissipation process. Proper airflow within the computer case is crucial for efficient air cooling.

2. Liquid Cooling: Liquid cooling offers more efficient cooling than air cooling, making it popular among enthusiasts and overclockers. In this method, a closed-loop system or custom setup circulates liquid coolant through a CPU water block, which transfers heat away from the processor. The heated liquid then passes through a radiator, where it is cooled by fans before returning to the CPU water block.

3. Direct Die Cooling: Direct die cooling involves removing the heatspreader from the CPU and applying the cooling solution directly to the CPU die. This method eliminates the thermal resistance introduced by the heatspreader, allowing for more efficient heat transfer. It requires careful installation and is typically used by experienced overclockers seeking maximum cooling performance.

4. Phase-Change Cooling: Phase-change cooling employs a refrigeration system to cool the CPU. It works by compressing and evaporating a refrigerant, which absorbs heat from the CPU, and then condensing the refrigerant back into liquid form. Phase-change cooling can provide exceptionally low temperatures, but it is costly, complex, and often more suitable for extreme overclocking or specialized applications.

5. Thermal Paste: Thermal paste or thermal compound is a material applied between the CPU and the heatsink. It helps fill microscopic imperfections and air gaps, improving heat transfer between the two surfaces and enhancing cooling efficiency. Proper application of thermal paste ensures maximum contact and conductivity, minimizing thermal resistance.

6. Undervolting: Undervolting is a technique where the CPU voltage is decreased below the manufacturer’s recommended level. This reduces power consumption and heat generation while maintaining stable operation. Undervolting requires careful monitoring and testing to ensure system stability and avoid crashes or instability.

7. Passive Cooling: Passive cooling techniques rely solely on heat dissipation through natural convection. This method typically utilizes large heatsinks with extended fin arrays, allowing for efficient heat transfer without the need for active cooling components like fans. Passive cooling is commonly found in small form factor systems or in low-power CPUs that generate less heat.

It’s crucial to select a cooling technique based on the specific requirements of the CPU, system configuration, and intended usage. Proper airflow management, regular cleaning of cooling components, and monitoring of temperatures are essential to maintain optimal cooling performance and ensure the longevity of the CPU.

Choosing the Right CPU for Your Needs

When selecting a CPU (Central Processing Unit), there are several considerations to keep in mind to ensure it meets your specific requirements and provides optimal performance for your needs. Here are some factors to consider when choosing the right CPU:

1. Performance: Assess your computing needs and determine the required level of performance. Consider factors such as clock speed, number of cores, and cache size. Higher clock speeds and more cores generally result in better performance for tasks that require multitasking, gaming, video editing, or other CPU-intensive activities.

2. Budget: Set a budget for your CPU purchase. Understanding your financial constraints will help narrow down the options and focus on CPUs that provide the best value for your money. Remember to allocate an appropriate portion of your budget to the CPU as it is a crucial component for overall system performance.

3. Compatibility: Ensure that the CPU is compatible with your motherboard and other system components. Check the socket type and chipset compatibility to ensure a seamless integration with your existing hardware. Compatibility is crucial to prevent any hardware conflicts or compatibility issues.

4. Power Efficiency: Evaluate the power requirements of the CPU. Power-efficient CPUs can help reduce electricity consumption and heat generation, which can result in lower energy costs and better system longevity. Look for CPUs with lower TDP (Thermal Design Power) ratings for improved power efficiency.

5. Future Proofing: Consider your long-term needs and future-proof your CPU choice to some extent. Look for CPUs that offer a good balance of performance and compatibility with evolving technologies. This can help ensure that your system remains relevant and capable of handling future software and hardware advancements.

6. Workload Specificity: Different CPUs excel at specific tasks. If your work or hobbies involve specific software or applications that are highly optimized for certain CPU architectures, consider choosing a CPU that is well-suited for those specific workloads. For example, certain CPUs may be better for gaming, while others may be more suitable for content creation or scientific computing.

7. Reviews and Benchmarks: Research and read reviews or benchmarks to gain insights into the performance and reliability of different CPU models. Professional and user-generated reviews can provide valuable information and help you make an informed decision based on real-world experiences.

Remember that the perfect CPU choice depends on your unique computing needs and priorities. By considering factors such as performance, budget, compatibility, power efficiency, future-proofing, workload specificity, and reviews, you can make an informed decision and select the right CPU that will meet your requirements and deliver optimal performance.

Future Trends in CPU Technology

CPU (Central Processing Unit) technology continues to evolve, driven by the demand for increased performance, power efficiency, and new computing capabilities. Here are some future trends that are shaping the direction of CPU technology:

1. More Cores and Multithreading: CPUs are expected to incorporate even more cores in the future. With advancements in manufacturing processes, higher numbers of cores can be integrated onto a single chip. This will enable more parallel processing and enhanced multitasking capabilities. Additionally, multithreading technologies like Simultaneous Multithreading (SMT) or Hyper-Threading are likely to become more prevalent, allowing each core to handle multiple threads simultaneously.

2. Increased Power Efficiency: Power efficiency has become a critical consideration for CPU design. Future CPUs are expected to focus on improving power efficiency, reducing energy consumption, and minimizing heat generation. This will lead to more environmentally friendly computing solutions and longer battery life for mobile devices.

3. Advanced Manufacturing Processes: Advances in manufacturing processes, such as smaller nanometer scales and the use of FinFET or Gate-All-Around technologies, will enable the production of CPUs that are smaller, faster, and more power-efficient. These manufacturing advancements will help to overcome the physical constraints of traditional lithography techniques and pave the way for further innovation in CPU design.

4. Artificial Intelligence (AI) Integration: AI is becoming increasingly pervasive in various applications. Future CPUs are expected to incorporate dedicated AI accelerators or specialized processing units to enhance AI-related tasks, such as machine learning, deep learning, and neural network computations. These AI-focused components will improve AI performance and enable more efficient AI processing.

5. Quantum Computing: Quantum computing holds the potential to revolutionize computational power by utilizing quantum bits (qubits) to perform complex calculations. While still in its early stages, research and development efforts on quantum CPUs are gaining momentum. Quantum CPUs have the potential to solve complex problems much faster than classical CPUs, leading to advancements in fields such as cryptography, drug discovery, and optimization.

6. Heterogeneous Computing: Heterogeneous computing employs different types of processors (CPU, GPU, FPGA, etc.) working together to handle diverse workloads efficiently. In the future, CPUs are expected to integrate more heterogeneous computing elements, enabling seamless cooperation between different processors. This integration will optimize performance for different applications and workloads, improving overall system efficiency.

7. Neuromorphic Computing: Inspired by the structure and function of the human brain, neuromorphic computing focuses on developing CPUs that mimic neural networks. These CPUs would excel at tasks such as pattern recognition, sensory perception, and cognitive computing. Neuromorphic CPUs have the potential to revolutionize AI and enable advances in areas such as robotics, autonomous vehicles, and natural language processing.

The future of CPU technology holds exciting possibilities, driven by the ongoing demand for faster, more efficient, and intelligent computing solutions. Advancements in core count, power efficiency, manufacturing processes, AI integration, quantum computing, heterogeneous computing, and neuromorphic computing are set to shape the CPUs of tomorrow.