What Does Cache Mean?

A cache refers to a temporary storage location that holds frequently accessed data or information to expedite future retrieval. It acts as a buffer between the main memory and the processor, providing faster access to data, reducing latency, and improving overall system performance.

In simple terms, cache is like a shortcut that allows your computer or device to access data quickly without having to retrieve it from the original source every time it’s needed. It stores copies of data that the system anticipates will be used again, based on past behavior or programming logic.

Cache is commonly used in various computing systems, from web browsers and operating systems to databases and CPU architectures. It helps optimize performance by reducing the time and resources required to retrieve data.

When you visit a website, for example, your browser stores elements like images, scripts, and style sheets in its cache. The next time you visit the same website, instead of downloading these elements again, your browser retrieves them from the cache. This results in faster page loading times and less strain on the network.

Cache also plays a vital role in improving the performance of search engines. They store search results in their cache, allowing for quicker retrieval when similar queries are made.

Overall, cache allows systems to work more efficiently by reducing latency and optimizing resource usage. It saves time, improves user experience, and enhances system performance.

How Does Cache Work?

Cache works by utilizing the principle of locality, which states that data that has been recently accessed is likely to be accessed again in the near future. It acts as a hierarchical storage system, consisting of multiple levels with varying speeds and sizes.

When a system needs to access data, it first checks the cache hierarchy for the presence of that data. If the data is found in the cache, it is known as a cache hit, and the system retrieves the data from there, resulting in faster access times.

On the other hand, if the data is not present in the cache, it is known as a cache miss. In this case, the system needs to retrieve the data from the slower main memory or the original source, which takes more time and resources.

Cache operates with different levels, such as L1, L2, and L3 caches. L1 cache, also known as the primary cache, is the smallest and fastest cache located closest to the processor. It holds frequently used data and instructions. If the data is not found in the L1 cache, the system checks the next level, L2 cache, which is larger but slower. Finally, if the data is not found in the L2 cache, the system checks the L3 cache.

Cache uses a mechanism called cache coherence to ensure that the data in the cache remains consistent with the data in the main memory. When a system writes data to the cache, it may also update the corresponding data in the main memory.

Cache management techniques, such as replacement policies, determine which data should be evicted from the cache when it becomes full. Common replacement policies include Least Recently Used (LRU), First In, First Out (FIFO), and Most Recently Used (MRU).

Overall, cache plays a vital role in improving system performance by reducing the time and resources required to retrieve data. It helps overcome the speed mismatch between the processor and the main memory, resulting in faster computation and improved efficiency.

Types of Cache

There are several types of cache used in computing systems, each designed for different purposes and levels within the memory hierarchy. Let’s explore some of the most common types:

- Processor Cache: Also known as CPU cache, this type of cache is built directly into the processor itself. It is further divided into multiple levels, including L1, L2, and sometimes L3 caches. These caches are designed to store frequently accessed data and instructions, helping to alleviate the speed mismatch between the fast processor and the slower main memory.

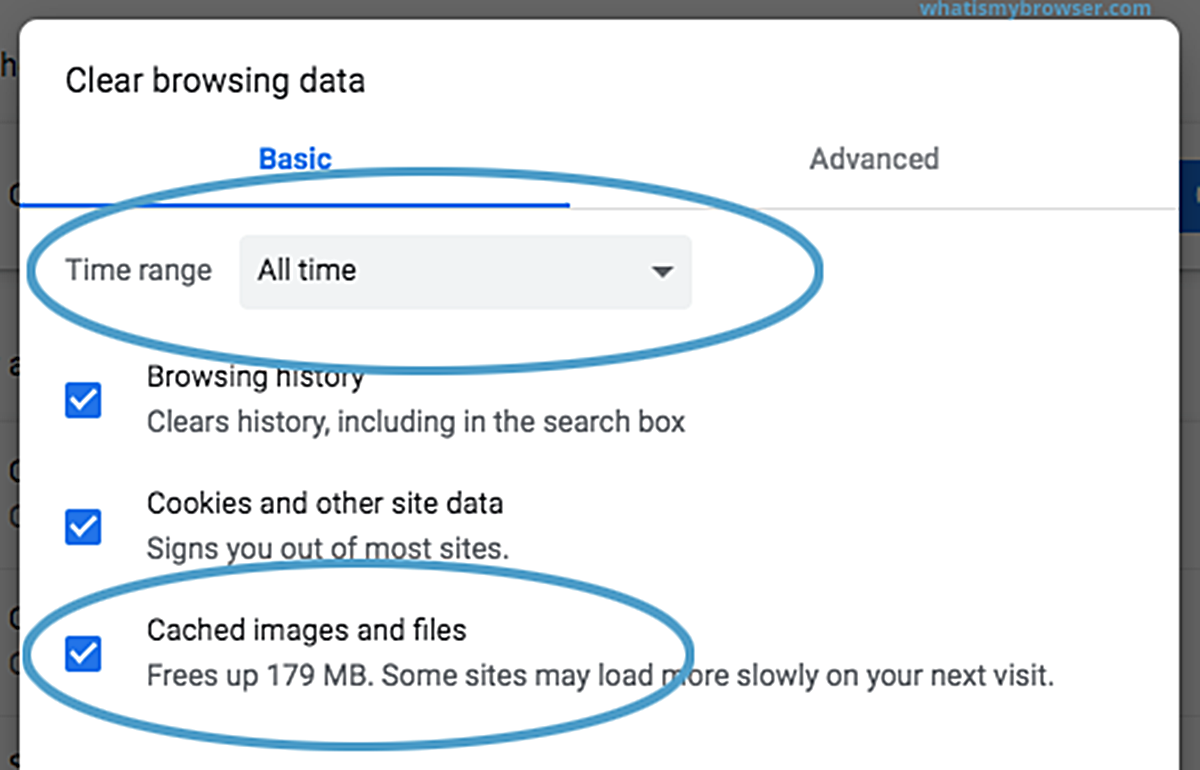

- Web Browser Cache: Web browsers use cache to store elements of web pages, such as images, scripts, and style sheets. This type of cache helps speed up website loading times by retrieving the stored elements instead of downloading them again from the server. Clearing the browser cache can resolve issues related to outdated or incorrect data.

- Disk Cache: Disk cache, also known as buffer cache, is used to store recently accessed data from the hard disk drive (HDD) or solid-state drive (SSD). It improves disk read and write performance by reducing the number of physical disk accesses. The disk cache holds a portion of data that is likely to be accessed again in the near future.

- Database Cache: Database systems utilize cache to store frequently accessed data and query results. By caching frequently accessed data, database cache reduces the need to access the underlying storage, leading to improved query response times and overall system performance.

- Operating System Cache: The operating system employs cache to store recently used data and system files. This includes file system cache, which caches file data, and memory cache, which caches recently accessed memory pages. The operating system cache helps optimize system performance by reducing disk access and improving memory efficiency.

These are just a few examples of cache types used in different computing systems. Each type serves a specific purpose and contributes to improving system performance by reducing latency, optimizing resource usage, and enhancing overall efficiency.

Benefits of Using Cache

The use of cache in computing systems brings several benefits that enhance performance, efficiency, and user experience. Let’s explore some of the key advantages:

- Faster Data Access: Cache significantly reduces the time taken to retrieve data by storing frequently accessed information closer to the processor. This results in faster access times and improved system responsiveness.

- Reduced Latency: By providing quicker access to data, cache reduces the latency or delay in retrieving information from the main memory or external sources. This leads to faster computation and improved overall system performance.

- Improved Resource Usage: Cache helps optimize resource utilization by reducing the number of times data needs to be fetched from slower storage devices or network sources. This results in more efficient use of system resources and better scalability.

- Enhanced User Experience: With cache, applications, websites, and other computing systems can load and respond faster, providing a smoother and more seamless user experience. Faster page loading times and reduced network strain contribute to improved user satisfaction.

- Cost-effective: Using cache can help reduce costs associated with hardware upgrades or investments in high-speed storage devices. By leveraging cache to improve performance, organizations can maximize the efficiency of existing infrastructure.

- Improved Scalability: Cache helps support scalability by reducing the load on primary data sources. As the number of users or requests increases, cache ensures that data can be retrieved quickly, without overwhelming the system or causing bottlenecks.

- Better System Availability: Cache can also enhance system availability by reducing the impact of network outages or temporary unavailability of data sources. When data is temporarily unavailable, cache can serve as a backup and provide access to previously retrieved information.

Overall, the benefits of using cache are multifaceted, ranging from improved performance and efficiency to cost savings and enhanced user experience. By leveraging cache effectively, computing systems can achieve faster data access, reduced latency, and optimized resource usage, resulting in a more seamless and responsive computing experience.

Common Uses of Cache

Cache is extensively used in various computing systems to improve performance, reduce latency, and enhance user experience. Let’s explore some of the most common applications of cache:

- Web Browsing: Web browsers utilize cache to store elements of web pages, such as images, style sheets, and scripts. This allows subsequent visits to the same website to load faster as the browser retrieves the cached elements instead of downloading them again from the internet.

- Search Engines: Search engines make use of cache to store previously searched results. When a similar query is made again, the search engine can retrieve the cached results instead of performing an entirely new search. This speeds up search result delivery and enhances user experience.

- Operating Systems: Operating systems employ cache to store frequently accessed data and system files. This includes file system cache, which caches recently accessed data from storage devices, and memory cache, which holds frequently used memory pages for quicker access. Caching in the operating system improves overall system performance and responsiveness.

- Database Systems: Database cache is used to store frequently accessed data and query results. By caching frequently requested data, database systems can reduce the need to access the underlying storage system, resulting in improved query response times and overall database performance.

- Content Delivery Networks (CDNs): CDNs use cache to store copies of website content at strategically distributed edge server locations. This allows users to access content from a nearby server, reducing latency and improving load times, especially for static content like images, videos, and documents.

- File Sharing Applications: File sharing applications often utilize cache to store recently accessed files or file parts. This helps reduce the need to re-download files, improving transfer speeds and overall user experience.

- Virtual Memory Management: Operating systems use cache to implement virtual memory management, storing frequently accessed memory pages in cache for faster access by the processor. This helps alleviate the performance impact of page swaps between main memory and secondary storage.

These are just a few examples of how cache is commonly used in various computing systems. By leveraging cache effectively, organizations can achieve faster data access, improved system performance, and an overall enhanced user experience.

Challenges and Limitations of Cache

While cache provides significant benefits in terms of improving performance and reducing latency, it is important to be aware of its challenges and limitations. Let’s explore some of the common challenges associated with cache:

- Cache Coherency: Maintaining cache coherency, ensuring that data in the cache is consistent with the data in the main memory or other caches, can be a complex task. The use of multiple caches and parallel processing can introduce possible inconsistencies, requiring careful synchronization and coordination mechanisms.

- Cache Invalidation: Cache invalidation, the process of marking cached data as outdated or no longer valid, can be a significant challenge. Ensuring that the cache reflects the most recent and accurate data requires effective cache invalidation strategies, such as time-based invalidation or invalidation based on specific events or triggers.

- Cache Size Limitations: Cache size limitations can pose challenges, especially when dealing with large amounts of data. Limited cache capacity can result in higher cache miss rates, reducing the effectiveness of cache in improving performance.

- Cache Pollution: In some cases, cache pollution can occur when less frequently accessed data occupies valuable cache space, displacing more frequently accessed data. This can impact cache hit rates and degrade overall system performance.

- Cache Consistency: In distributed systems with multiple caches, maintaining cache consistency across different nodes can be challenging. Ensuring that all caches have the same view of data and are synchronized requires sophisticated cache coherence protocols and careful coordination.

- Temporal and Spatial Locality: Cache performance heavily relies on the presence of temporal and spatial locality in data access patterns. If the data access pattern lacks locality, cache efficiency may be reduced, resulting in increased cache miss rates and degraded performance.

- Cache Warm-up and Cold-start: When a cache is initially empty or does not contain frequently accessed data, a cache warm-up period is required for data to be populated in the cache. During this period, cache miss rates may be high, resulting in slower performance until the cache becomes fully populated and effective.

It is important for developers and system architects to be aware of these challenges and limitations when designing and implementing cache solutions. Understanding the trade-offs and implementing appropriate strategies can help mitigate these limitations and optimize cache performance.

Tips for Optimizing Cache Performance

To maximize the benefits of cache and improve system performance, it is important to optimize cache utilization. Here are some tips for optimizing cache performance:

- Cache Size Selection: Carefully consider the cache size based on the application’s needs and the available memory resources. A larger cache can hold more data, reducing cache miss rates, but it may also consume more memory and incur higher costs.

- Cache Replacement Policies: Choose an appropriate cache replacement policy that best suits the application’s data access patterns. Common policies include Least Recently Used (LRU), First In, First Out (FIFO), and Most Recently Used (MRU). Analyze the application’s behavior to determine the most effective policy.

- Cache Partitioning: For multi-level cache systems, consider partitioning the cache to allocate more space to frequently accessed data. Based on access patterns and data importance, partitioning can be adjusted to optimize cache hit rates.

- Cache Preloading: Preload frequently accessed data into the cache during system startup or warm-up periods to minimize cache misses. This can be done by identifying and prefetching data based on historical usage patterns or predicted future access.

- Data Locality Optimization: Optimize software algorithms to increase data locality, ensuring that frequently accessed data is stored closer together in memory. This reduces cache miss rates and improves cache efficiency.

- Cache Invalidation Strategies: Implement effective cache invalidation strategies to ensure that stale or outdated data is promptly removed from the cache. This can be based on time-based expiration, event-based invalidation, or explicit invalidation mechanisms.

- Cache-aware Data Structures: Use data structures designed to take advantage of cache behavior, such as cache-conscious arrays and B-Trees. These data structures are optimized for cache usage and can improve performance by reducing cache miss penalties.

- Cache Monitoring and Analysis: Monitor cache hit and miss rates, as well as cache utilization, to identify potential bottlenecks or areas for improvement. Analyzing cache behavior can help fine-tune cache configurations and optimize performance.

- Cache Hierarchy Analysis: Understand the cache hierarchy and the interaction between different cache levels. Analyze the data access patterns and determine the optimal placement of frequently accessed data across different cache levels to maximize performance.

By implementing these optimization strategies and continuously monitoring cache performance, developers and system administrators can ensure that the cache is effectively utilized, resulting in improved system performance, reduced latency, and an enhanced user experience.