Supervised Learning Algorithms

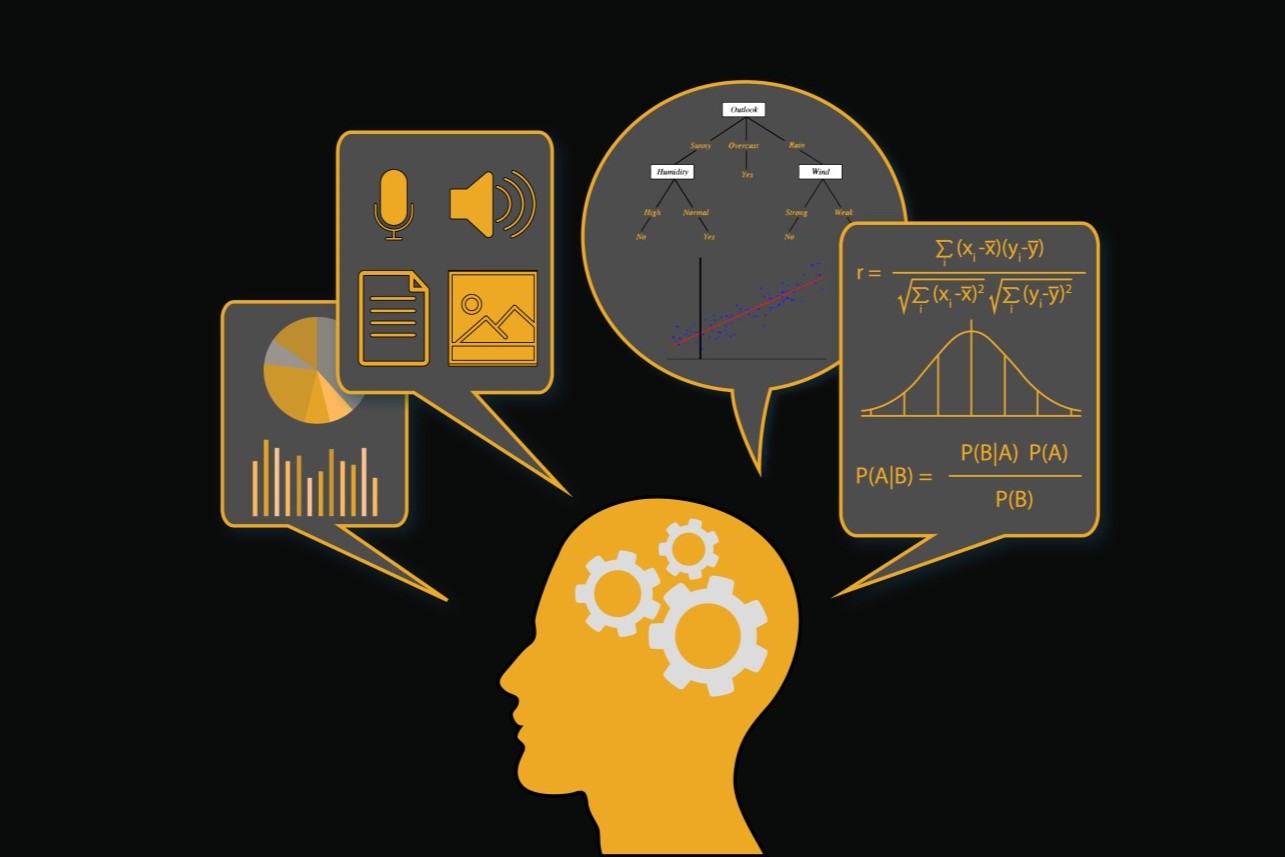

Supervised learning algorithms are a fundamental category of machine learning algorithms. They rely on labeled training data to learn patterns and relationships between input variables (features) and output variables (labels or targets).

These algorithms are called “supervised” because during the training process, they are provided with a set of input-output pairs, with the desired output already known. The goal is for the algorithm to learn from these examples and then be able to predict the correct output for new, unseen input instances.

There are several types of supervised learning algorithms, each with its own strengths and purposes:

- Linear Regression: This algorithm models the relationship between input variables and continuous output variables, fitting the best linear equation to the data.

- Logistic Regression: Used for binary classification problems, logistic regression estimates the probability of an instance belonging to a specific class.

- Support Vector Machines (SVM): SVMs try to find the best hyperplane that separates data points from different classes, maximizing the margin between them.

- Decision Trees: Decision trees create a tree-like model of decisions and their possible consequences, making them interpretable and useful for classification and regression tasks.

- Random Forest: This ensemble algorithm combines the predictions of multiple decision trees to improve accuracy and avoid overfitting.

- Naive Bayes: Based on Bayes’ theorem, this algorithm calculates the probability of an input instance belonging to a certain class, assuming independence between features.

- K-Nearest Neighbors (KNN): KNN classifies instances by finding the majority class among its k nearest neighbors in the feature space.

These are just a few examples of supervised learning algorithms. The choice of algorithm depends on the nature of the problem and the characteristics of the data. It is crucial to select the most appropriate algorithm and optimize its parameters to achieve the best results.

Unsupervised Learning Algorithms

Unsupervised learning algorithms are a type of machine learning algorithm where the training data is unlabeled, meaning there are no target variables or predefined outputs. The goal of these algorithms is to discover patterns, structures, and relationships present in the data without any prior knowledge.

Here are some commonly used unsupervised learning algorithms:

- k-Means Clustering: This algorithm aims to partition the data into k clusters, with each data point assigned to the cluster whose centroid it is closest to.

- Hierarchical Clustering: Hierarchical clustering creates a hierarchy of clusters by merging or splitting them based on the proximity between data points.

- DBSCAN: Density-Based Spatial Clustering of Applications with Noise (DBSCAN) groups together densely connected data points and identifies outliers as noise points.

- Principal Component Analysis (PCA): PCA is a dimensionality reduction technique that projects the data into a lower-dimensional space while preserving the most important information.

- Independent Component Analysis (ICA): ICA attempts to separate a multivariate signal into additive subcomponents that are statistically independent.

- Association Rule Mining: This technique discovers interesting patterns or associations among items in large datasets, often used for market basket analysis.

- Self-Organizing Maps (SOM): SOM is a type of artificial neural network that generates a low-dimensional representation of the input data, preserving the underlying structure.

Unsupervised learning algorithms are valuable in exploratory data analysis, data preprocessing, and pattern discovery. They can help identify hidden structures, detect anomalies, and group similar instances together without any prior knowledge or guidance.

It’s important to note that the output and evaluation of unsupervised learning algorithms are subjective and rely on human interpretation. The insights gained from these algorithms can provide valuable information for decision-making and further analysis in various domains.

Semi-Supervised Learning Algorithms

Semi-supervised learning algorithms represent a middle ground between supervised and unsupervised learning. They are used when a limited amount of labeled data is available, and a larger amount of unlabeled data is present.

The main idea behind semi-supervised learning is to leverage both labeled and unlabeled data to improve the performance of the learning algorithm. By combining these two types of data, the algorithm can learn better representations and make more accurate predictions.

Here are a few commonly used semi-supervised learning algorithms:

- Self-Training: This approach initially trains a model on the small labeled dataset and then uses this model to predict labels for the unlabeled data. The newly labeled data is added to the training set to retrain the model iteratively.

- Co-Training: Co-training relies on multiple views or features of the data. Two or more classifiers are trained on different sets of features and can exchange information between themselves, labeling the unlabeled instances based on their confidence.

- Multi-View Learning: In multi-view learning, each view represents a different perspective or representation of the data. The labeled and unlabeled data from different views are used to create a more comprehensive picture of the underlying patterns.

- Generative Models: Generative models, such as generative adversarial networks (GANs) or variational autoencoders (VAEs), can be utilized for semi-supervised learning by learning the underlying distribution of the data and generating new instances.

Semi-supervised learning algorithms are especially valuable in scenarios where obtaining labeled data is expensive, time-consuming, or requires domain expertise. By utilizing the available unlabeled data, these algorithms can leverage the additional information to improve the model’s performance.

However, it’s important to note that the success of semi-supervised learning algorithms heavily relies on the quality and reliability of the labeled data, as well as the assumption that the structure of the unlabeled data is similar to that of the labeled data. Careful exploration and validation are required to ensure the effectiveness and generalizability of the semi-supervised learning approach.

Reinforcement Learning Algorithms

Reinforcement learning algorithms are designed to enable an agent to learn by interacting with an environment and receiving feedback in the form of rewards or penalties. The goal of reinforcement learning is for the agent to maximize its cumulative reward over time by discovering the optimal actions to take in different situations.

In reinforcement learning, the agent learns through a process of trial and error. It explores the environment, takes actions, and receives feedback in the form of rewards based on its actions. The agent then uses this feedback to adjust its strategy and improve its future actions. The aim is to develop an optimal policy that leads to the maximum possible reward.

Here are some commonly used reinforcement learning algorithms:

- Q-Learning: Q-Learning is a model-free algorithm that uses a table known as Q-table to estimate the expected reward for each action in each state. The agent learns by updating the Q-values through iterative interactions with the environment.

- Deep Q-Networks (DQN): DQN is an extension of Q-Learning that utilizes deep neural networks as function approximators to handle high-dimensional state spaces. It has been successful in solving complex problems in domains such as robotics and game playing.

- Policy Gradient Methods: These methods directly optimize the agent’s policy by estimating the gradient of the expected cumulative reward. They are particularly useful in continuous action spaces and have shown great success in tasks like robotics and autonomous driving.

- Actor-Critic Methods: Actor-Critic methods combine the advantages of both value-based and policy-based methods. They maintain both a value function for evaluation (the critic) and a policy for action selection (the actor), leveraging the benefits of both approaches. This helps in achieving stability and faster convergence.

Reinforcement learning algorithms have been successful in solving complex problems that involve sequential decision-making, such as controlling robots, optimizing resource allocation, and playing games. The ability to learn from interactions with the environment without explicit supervision makes reinforcement learning a powerful tool in various domains.

However, reinforcement learning can be challenging due to the trade-off between exploration and exploitation, long training times, and the need for a well-defined reward function. Careful design and exploration of reward structures, as well as efficient exploration strategies, are crucial for achieving optimal performance.

Classification Algorithms

Classification algorithms are widely used in machine learning to categorize or classify data into predefined classes or categories. The goal of these algorithms is to train a model that can accurately assign new, unseen data instances to the correct class based on their features or attributes.

Classification is a supervised learning task, meaning that it requires labeled training data, where each instance is associated with the correct class. The classifier learns patterns and relationships in the labeled data and uses them to make predictions on new, unseen instances.

Here are some commonly used classification algorithms:

- Logistic Regression: Logistic regression models the relationship between input variables and binary or categorical output variables. It estimates the probability of an instance belonging to a particular class.

- Naive Bayes: Naive Bayes is based on Bayes’ theorem and assumes that features are conditionally independent given the class. It is simple, fast, and effective for text categorization and spam detection tasks.

- Decision Trees: Decision trees create a tree-like model of decisions and their possible consequences. Each internal node represents a feature, and each leaf node represents a class or outcome.

- Random Forest: Random Forest is an ensemble algorithm that combines multiple decision trees and makes predictions based on the majority vote or averaging of the individual trees. It improves accuracy and reduces the risk of overfitting.

- Support Vector Machines (SVM): SVMs find the optimal hyperplane that separates data points from different classes, maximizing the margin between them. They can handle both linear and non-linear classification tasks.

- K-Nearest Neighbors (KNN): KNN classifies instances by finding the majority class among its k nearest neighbors in the feature space. It is simple and effective, especially with small datasets.

- Neural Networks: Neural networks consist of interconnected nodes (neurons) organized in layers. They can learn complex patterns and relationships and are often used for image classification and natural language processing tasks.

These algorithms have different characteristics and are suitable for various types of data and classification problems. The choice of algorithm depends on factors such as the nature of the data, the desired interpretability, the computational resources available, and the size of the dataset.

It is important to evaluate and fine-tune the performance of classification algorithms by considering metrics such as accuracy, precision, recall, and F1 score. Choosing the right evaluation metric helps in selecting the most appropriate algorithm and optimizing its parameters for the task at hand.

Regression Algorithms

Regression algorithms are a type of supervised learning algorithms used to predict continuous numerical values based on input variables. They are commonly used in various domains, such as finance, economics, healthcare, and engineering, to estimate and forecast numerical outcomes.

Regression models aim to capture the relationship between the input variables (also known as features or predictors) and the output variable (also called the target or response variable). The goal is to find the best-fitting line or curve that can predict the target variable based on the given set of input features.

Here are some commonly used regression algorithms:

- Linear Regression: Linear regression is a simple yet powerful algorithm that assumes a linear relationship between the input variables and the output variable. It calculates the best-fit line that minimizes the sum of squared errors.

- Multiple Linear Regression: Multiple linear regression extends linear regression to handle multiple input variables. It models the linear relationship between multiple predictors and the target variable.

- Polynomial Regression: Polynomial regression fits a polynomial equation to the data, allowing for non-linear relationships between the predictors and the target variable.

- Ridge Regression: Ridge regression is a regularization technique that reduces the impact of irrelevant or highly correlated features by adding a penalty term to the loss function.

- Lasso Regression: Lasso regression, similar to ridge regression, includes a regularization term but it also performs feature selection by setting some coefficients to zero.

- Elastic Net Regression: Elastic net regression combines ridge and lasso regression, providing a balance between the two by controlling the contribution of the L1 and L2 penalties.

- Decision Tree Regression: Decision tree regression constructs a tree-like model that predicts the target variable based on a series of decisions and conditions.

- Random Forest Regression: Random forest regression combines the predictions of multiple decision trees to improve accuracy and minimize overfitting.

- Support Vector Regression (SVR): Support vector regression extends SVM to regression problems by finding a hyperplane that approximates the relationship between predictors and the target variable.

These regression algorithms have different strengths and assumptions, which make them suitable for different types of datasets and target variables. Choosing the right algorithm and optimizing its parameters can lead to accurate and reliable predictions.

Evaluating regression models includes metrics such as mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE), and coefficient of determination (R-squared). These metrics provide insights into the model’s performance and its ability to capture the variation in the target variable.

Clustering Algorithms

Clustering algorithms are unsupervised learning techniques that group similar data points into clusters based on their characteristics or proximity to one another. These algorithms help uncover hidden patterns and structures within datasets without prior knowledge of the class labels or target variables.

Clustering is useful for various applications, such as customer segmentation, anomaly detection, image segmentation, and document categorization. It helps in identifying groups or clusters of data points that share common characteristics or exhibit similar behavior.

Here are some commonly used clustering algorithms:

- K-Means: K-Means is one of the most popular clustering algorithms. It partitions the data into k clusters by minimizing the total within-cluster sum of squares. Each cluster is represented by its centroid, which is the mean of the data points in that cluster.

- Hierarchical Clustering: Hierarchical clustering builds a hierarchy of clusters by repeatedly merging or splitting them based on the similarity or distance between data points. It can be agglomerative (bottom-up) or divisive (top-down).

- DBSCAN: Density-Based Spatial Clustering of Applications with Noise (DBSCAN) groups together dense areas of data points and identifies outliers as noise points. It doesn’t need to specify the number of clusters in advance.

- Gaussian Mixture Models (GMM): GMM assumes that the data points are generated from a mixture of Gaussian distributions. It probabilistically assigns data points to different clusters based on the probability density function.

- Agglomerative Clustering: Agglomerative clustering starts by considering each data point as a separate cluster and then iteratively merges the most similar clusters until a stopping criterion is met.

- Spectral Clustering: Spectral clustering uses the eigenvectors of a similarity matrix to divide the data points into clusters. It is effective for non-linearly separable data.

- Mean Shift: Mean Shift moves data points towards the mode or highest density of data. It iteratively adjusts the center of each cluster until convergence.

These clustering algorithms have different strengths and assumptions, making them suitable for different types of datasets and clustering objectives. The choice of algorithm depends on factors such as the shape of the clusters, the dimensionality of the data, and the desired interpretability.

Clustering algorithms are evaluated based on metrics like silhouette coefficient, cohesion, separation, and the visual inspection of cluster properties. However, it’s important to note that the evaluation of clustering results is often subjective and requires domain knowledge for meaningful interpretation.

Dimensionality Reduction Algorithms

Dimensionality reduction algorithms are techniques used to reduce the number of input variables or features in a dataset while retaining most of the relevant information. By reducing the dimensionality, these algorithms make it easier to analyze and visualize the data, improve computational efficiency, and mitigate the curse of dimensionality.

High-dimensional data often suffers from issues such as increased complexity, overfitting, and difficulty in interpretation. Dimensionality reduction methods address these challenges by capturing the most important underlying structure and patterns in the data, while discarding the less relevant or redundant features.

Here are some commonly used dimensionality reduction algorithms:

- Principal Component Analysis (PCA): PCA is a widely used linear dimensionality reduction technique. It transforms the data into a new set of uncorrelated variables called principal components, ordered by the amount of variance they explain.

- t-SNE (t-Distributed Stochastic Neighbor Embedding): t-SNE is a non-linear technique that focuses on preserving the local structure of data points in a lower-dimensional space. It is often used for visualizing high-dimensional data clusters.

- Autoencoders: Autoencoders are neural network-based models that learn to reconstruct the input data from a compressed representation or bottleneck layer. By training the model to minimize the reconstruction error, the autoencoder effectively learns a lower-dimensional representation of the data.

- Linear Discriminant Analysis (LDA): LDA is a dimensionality reduction technique that can be used for both dimensionality reduction and classification tasks. It seeks to maximize the separation between different classes while minimizing the within-class variance.

- Isomap: Isomap is a non-linear dimensionality reduction method that preserves the geodesic distances between data points. It constructs a low-dimensional representation of the data by considering the intrinsic geometry of the dataset.

- Locally Linear Embedding (LLE): LLE is a technique that seeks to preserve the local relationships between neighboring data instances. It captures the underlying manifold structure by reconstructing each data point as a linear combination of its neighboring points.

Dimensionality reduction algorithms can help in data visualization, noise reduction, feature extraction, and preprocessing for downstream tasks. However, it’s important to note that the reduction in dimensionality comes at the cost of losing some information, and the interpretability of the reduced features should be carefully considered.

Choosing the right dimensionality reduction algorithm depends on factors such as the data distribution, the desired level of preservation of global or local relationships, and the computational resources available.

Ensemble Algorithms

Ensemble algorithms are machine learning techniques that combine multiple individual models to make more accurate and robust predictions. By aggregating the predictions of multiple models, ensemble algorithms can overcome the limitations of individual models and achieve higher performance.

The idea behind ensemble methods is to leverage the diversity and complementary strengths of different models by combining their predictions. Ensemble algorithms can improve generalization, reduce overfitting, and increase the stability of the predictions.

Here are some commonly used ensemble algorithms:

- Bagging (Bootstrap Aggregating): Bagging is an ensemble technique that trains multiple models on different subsets of the training data, using bootstrapping. The final prediction is obtained by aggregating the predictions of individual models, typically through majority voting (for classification) or averaging (for regression).

- Random Forest: Random Forest is an ensemble algorithm that combines the predictions of multiple decision trees. Each decision tree is trained on a random subset of features and data instances, reducing overfitting and improving accuracy.

- Boosting: Boosting is a sequential ensemble technique that trains models iteratively, with each subsequent model focusing on the instances that were misclassified by previous models. Boosting algorithms assign weights to each model’s prediction and adjust them during the training process.

- AdaBoost (Adaptive Boosting): AdaBoost is a popular boosting algorithm that assigns higher weights to misclassified instances, increasing their importance in subsequent iterations. It combines the predictions of weak learners (e.g., decision trees) to obtain the final prediction.

- Gradient Boosting: Gradient Boosting is another boosting algorithm that builds an ensemble of models sequentially, with each subsequent model aiming to minimize the errors of the previous models. It optimizes the loss function by taking the gradient of the loss with respect to the model’s parameters.

- Voting Classifiers: Voting classifiers combine the predictions of different classification models by majority voting or weighted voting. They can be hard voting (based on majority) or soft voting (based on probabilities).

- Stacking: Stacking is a meta-ensemble technique that combines the predictions of multiple models by training a higher-level model, often called a meta-model, on the predictions of individual models. It learns to make final predictions based on the outputs of the base models.

Ensemble algorithms have proven to be highly effective in various domains, including classification, regression, and anomaly detection. Their ability to leverage the diversity and complementary strengths of individual models leads to improved performance and more robust predictions.

However, it’s important to note that ensemble algorithms can be computationally expensive and require careful tuning of hyperparameters. The choice of ensemble algorithm depends on the type of data, the size of the dataset, the model types being combined, and the desired balance between accuracy and computational complexity.

Deep Learning Algorithms

Deep learning algorithms are a subset of machine learning algorithms that are inspired by the structure and function of the human brain. These algorithms use artificial neural networks, particularly deep neural networks, to model and learn complex patterns and relationships in data.

Deep learning algorithms have shown remarkable success in various domains such as computer vision, natural language processing, speech recognition, and recommendation systems. They are capable of automatically learning hierarchical representations from the data, allowing them to extract high-level features and make accurate predictions.

Here are some commonly used deep learning algorithms:

- Feedforward Neural Networks: Feedforward neural networks, also known as multi-layer perceptrons (MLPs), consist of multiple layers of interconnected neurons. They process input data through the network’s layers to produce an output.

- Convolutional Neural Networks (CNNs): CNNs are designed for analyzing visual data and have revolutionized computer vision tasks. They consist of convolutional layers that extract features from images and pooling layers that downsample the features.

- Recurrent Neural Networks (RNNs): RNNs are used for sequence data processing and modeling. They have feedback connections that allow information to flow from previous time steps, making them suitable for tasks like language modeling and speech recognition.

- Long Short-Term Memory (LSTM): LSTM is a type of RNN that overcomes the vanishing gradient problem. It has a memory cell that can maintain information over long periods, making it effective for tasks that involve longer-term dependencies.

- Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, that are trained in a competitive manner. GANs are used for tasks such as image generation, style transfer, and data augmentation.

- Deep Reinforcement Learning: Deep reinforcement learning combines deep neural networks with reinforcement learning techniques. It has been successful in learning policies for complex tasks, such as playing games and controlling robots, based on trial and error.

Deep learning algorithms excel at handling high-dimensional data, learning complex patterns, and achieving state-of-the-art performance in various tasks. However, they often require large amounts of labeled training data and computational power for training.

Choosing the right deep learning algorithm and architecture depends on the nature of the data, the specific task or problem, and the available resources. Fine-tuning the hyperparameters and optimizing the training process are crucial for obtaining optimal performance.