Overview of Machine Learning

Machine learning is a rapidly growing field that focuses on enabling computers to learn and make predictions or decisions without being explicitly programmed. It is a subset of artificial intelligence (AI) that empowers machines to automatically learn and improve from experience.

The goal of machine learning is to develop algorithms and models that can analyze and interpret vast amounts of data to recognize patterns, make predictions, or take actions. By leveraging advanced statistical and computational techniques, machine learning enables computers to identify insights, make accurate predictions, and automate complex tasks.

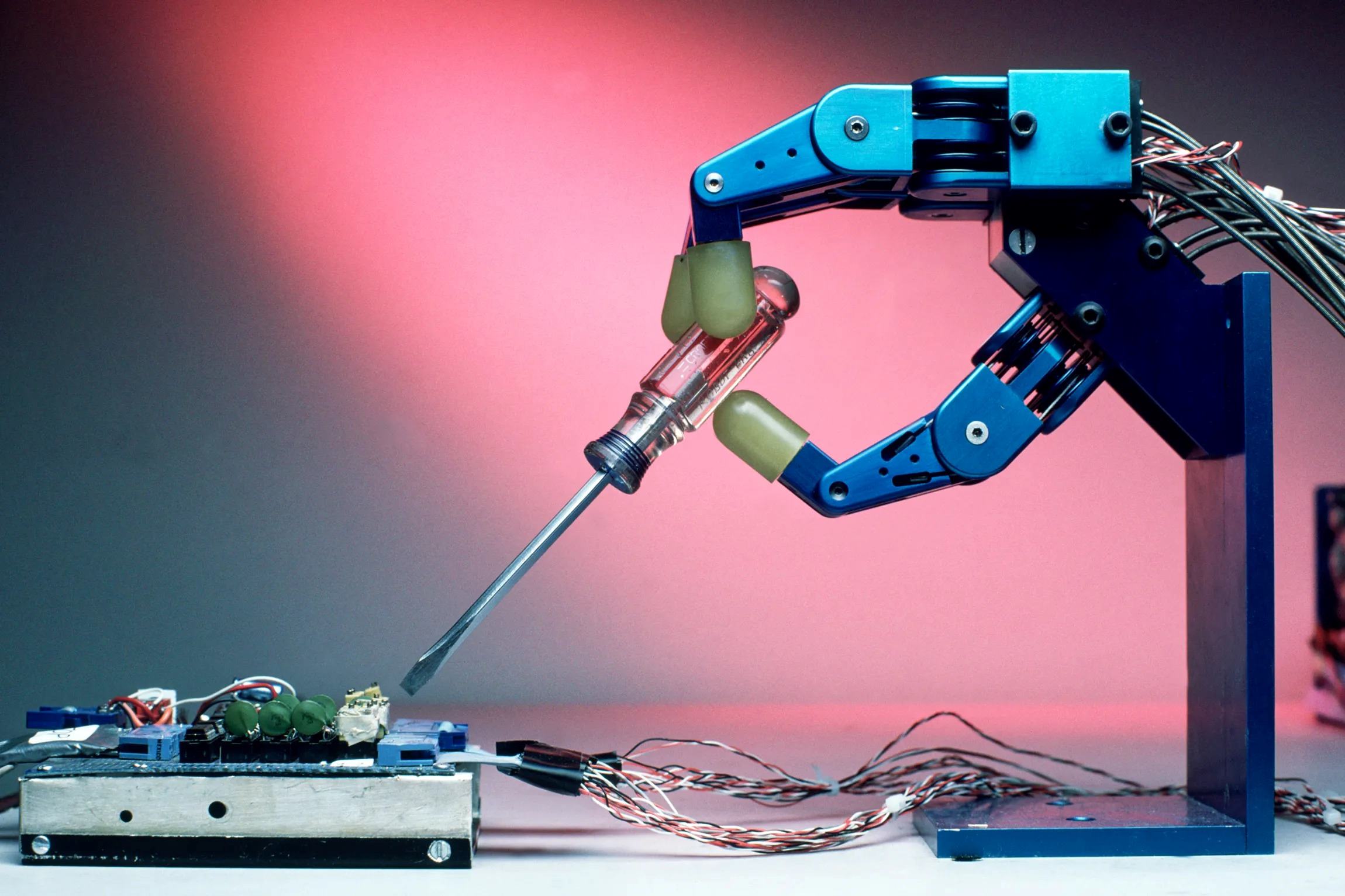

Machine learning is applied in various domains, including image recognition, speech recognition, natural language processing, recommender systems, fraud detection, autonomous vehicles, and robotics. It has revolutionized industries such as healthcare, finance, marketing, and manufacturing, enhancing decision-making processes and enabling organizations to gain a competitive edge.

There are two main types of machine learning: supervised learning and unsupervised learning. In supervised learning, models are trained on labeled data, where inputs are paired with their corresponding outputs. The model learns from these examples and can make predictions on new, unseen data. Unsupervised learning, on the other hand, deals with unlabeled data and focuses on finding patterns or structures within the data.

To develop machine learning models, a variety of algorithms and techniques are used. These include decision trees, random forests, support vector machines, naive Bayes, neural networks, and deep learning algorithms. Each algorithm has its strengths and weaknesses, and the choice depends on the specific problem and the available data.

Machine learning requires a solid understanding of mathematics, statistics, and programming. Proficiency in programming languages like Python, R, or Java is crucial for implementing machine learning algorithms and working with libraries and frameworks specifically designed for machine learning tasks.

Overall, machine learning plays a pivotal role in extracting meaningful insights from complex data, automating processes, and making accurate predictions. It continues to push the boundaries of what is possible and is set to shape the future of technology and innovation.

Importance of Machine Learning

Machine learning has become increasingly important in today’s data-driven world. Its ability to extract insights, make predictions, and automate complex tasks has made it an invaluable tool across various industries. Here, we explore the key reasons why machine learning is of paramount importance.

Firstly, machine learning enables organizations to harness the vast amounts of data they generate and collect. In today’s digital age, data is being generated at an unprecedented rate, and traditional methods of data analysis are often inadequate. Machine learning algorithms can analyze large datasets quickly and efficiently, uncovering patterns and trends that might otherwise go unnoticed. This newfound knowledge can drive better decision-making, optimize business processes, and identify lucrative opportunities.

Secondly, machine learning is integral to the development of AI-driven applications and technologies. By leveraging machine learning algorithms, developers can build intelligent systems that can understand and interpret human language, recognize images and objects, and even mimic human behavior. This has widespread applications in natural language processing, computer vision, and robotics, enabling advancements in various fields such as healthcare, finance, and transportation.

Another significant importance of machine learning lies in the realm of predictive analytics. By analyzing historical data and identifying patterns, machine learning models can make accurate predictions about future outcomes. This has profound implications in industries such as finance, where machine learning algorithms can help predict stock market movements, detect fraud, and optimize investment strategies. In healthcare, machine learning can assist in diagnosing diseases, forecasting patient outcomes, and personalizing treatment plans.

Furthermore, machine learning plays a crucial role in automating repetitive and complex tasks, freeing up humans to focus on more strategic and creative endeavors. From self-driving cars to chatbots and virtual assistants, machine learning enables automation and streamlines processes, resulting in increased productivity and efficiency.

Machine learning also holds the potential to address societal challenges such as climate change, healthcare accessibility, and poverty. By leveraging machine learning to analyze data related to these issues, policymakers and researchers can gain valuable insights and devise effective strategies to tackle these problems at a larger scale.

In summary, the importance of machine learning cannot be overstated. Its ability to analyze large datasets, automate tedious tasks, predict future outcomes, and drive innovation makes it an indispensable tool across various sectors. As technology continues to advance, machine learning will continue to shape the way we live, work, and interact with the world around us.

Understanding the Basics of Machine Learning

Machine learning is a branch of artificial intelligence that focuses on building algorithms and models that enable computers to learn and make predictions or decisions without being explicitly programmed. To grasp the basics of machine learning, it’s important to understand key concepts and terms associated with this field.

One fundamental concept in machine learning is the idea of training data. Training data is a collection of examples with known inputs and outputs. By exposing the machine learning model to this labeled data, it can learn patterns and relationships to make predictions or decisions on unseen data.

A crucial distinction in machine learning is between supervised learning and unsupervised learning. In supervised learning, the model is trained on labeled data, where the inputs are paired with their corresponding outputs. The model uses this data to learn patterns and relationships, allowing it to make predictions on new, unseen data. Unsupervised learning, on the other hand, deals with unlabeled data and focuses on finding patterns or structures within the data without any guidance.

Machine learning algorithms can be further categorized into different types based on their functionality. Classification algorithms are used when the output is a category or label. For example, email spam filtering is a common application of classification, where emails are classified as spam or not spam. Regression algorithms are employed when the output is a continuous value, such as predicting housing prices based on various features like location and size.

Clustering algorithms are used in unsupervised learning to group similar data points together based on their characteristics. This can uncover hidden structures in the data and provide insights into different clusters or segments. Another type of algorithm is reinforcement learning, where an agent learns to take actions in an environment to maximize a reward. This type of learning is commonly used in robotics and game-playing applications.

Machine learning models rely on features, which are individual measurable properties or characteristics of the data. Feature selection and engineering involve identifying the most relevant features that contribute to the accuracy of the model. This process can involve dimensionality reduction techniques to eliminate irrelevant or redundant features.

Evaluation is a critical step in machine learning. It involves assessing the performance of the model on unseen data. Common evaluation metrics include accuracy, precision, recall, and F1-score, depending on the problem at hand. It is important to select appropriate metrics that align with the specific problem and the desired outcome.

To implement machine learning algorithms, programming languages such as Python or R are commonly used. These languages provide extensive libraries and frameworks that make it easier to work with machine learning models and handle large datasets.

In summary, understanding the basics of machine learning involves familiarizing oneself with concepts such as training data, supervised and unsupervised learning, algorithm types, feature selection, evaluation metrics, and programming languages. By grasping these fundamentals, you will have a solid foundation to delve deeper into the world of machine learning and explore its vast possibilities.

Choosing the Right Programming Language for Machine Learning

Choosing the right programming language is crucial when embarking on a journey into the world of machine learning. The programming language you choose will determine the ease of implementation, the availability of libraries and frameworks, and the overall development experience. Here, we explore some popular programming languages for machine learning and their key features to help you make an informed choice.

Python is widely regarded as one of the best languages for machine learning. Its simplicity, readability, and extensive ecosystem make it an ideal choice for beginners and experts alike. Python is equipped with numerous libraries for scientific computing and machine learning, such as NumPy, Pandas, matplotlib, and scikit-learn. These libraries provide efficient tools for data manipulation, numerical computations, and implementing machine learning algorithms. Additionally, with frameworks like TensorFlow and PyTorch, Python offers powerful deep learning capabilities, enabling the development of complex neural networks.

R is another popular language for machine learning, particularly in the field of statistics. It provides a wide array of packages dedicated to data analysis and machine learning, such as caret, mlr, and randomForest. R’s strong statistical capabilities and visualization tools make it a preferred choice for researchers and data scientists working on analytical tasks. However, compared to Python, R can be more challenging for beginners due to its syntax and learning curve.

Java is a versatile and widely-used programming language that is well-suited for building enterprise-level machine learning applications. It offers excellent scalability and performance, making it a preferred choice for large-scale data processing and distributed computing. Java provides libraries like Weka and Deeplearning4j, which offer a range of machine learning algorithms and tools. However, Java may require more code compared to Python or R, making it slightly less user-friendly for rapid prototyping and experimentation.

Scala is a general-purpose programming language that runs on the Java Virtual Machine (JVM). It offers a unique blend of functional and object-oriented programming paradigms, making it a powerful language for machine learning. Scala’s seamless interoperability with Java libraries allows you to leverage the vast ecosystem of Java tools for machine learning. Additionally, Scala has its own libraries like Spark MLlib, a distributed machine learning framework that scales well with big data processing.

Other programming languages, such as Julia and C++, also have dedicated libraries and frameworks for machine learning. Julia aims to provide a high-performance environment for numerical computing, while C++ offers the advantage of being a low-level language with excellent speed and memory efficiency. These languages are often chosen for specific machine learning tasks that require utmost performance and optimization.

Ultimately, the choice of programming language for machine learning depends on several factors, including your familiarity with the language, the requirements of your project, the availability of libraries and frameworks, and the performance needs. Python and R are widely recommended for their ease-of-use and extensive ecosystem, making them excellent choices for most machine learning applications. Java and Scala are preferred for enterprise-level applications, while Julia and C++ offer superior performance for specialized use cases.

Regardless of the programming language you choose, it’s important to keep in mind that learning and mastering the underlying principles and techniques of machine learning is equally important as mastering the syntax of the programming language itself.

Setting up Your Machine Learning Environment

Setting up a proper machine learning environment is essential for efficient development and experimentation. A well-configured environment ensures that you have the necessary tools and libraries to implement and test machine learning algorithms effectively. Here are the key steps to set up your machine learning environment.

1. Choose your operating system: One of the first decisions is to select an operating system that best suits your needs. Most machine learning libraries and frameworks are compatible with Windows, macOS, and Linux distributions. It’s important to consider factors like ease of installation, community support, and compatibility with specific tools when making this choice.

2. Install Python: Python is the most widely-used programming language for machine learning. Install the latest version of Python on your machine. You can choose between Python 2.x or Python 3.x, but Python 3.x is recommended for new projects as it offers improvements and ongoing support.

3. Set up a virtual environment: Creating a virtual environment is crucial for managing dependencies and ensuring project isolation. Tools like Anaconda, venv, and virtualenv allow you to create separate Python environments for each project, preventing conflicts between different libraries and versions.

4. Install machine learning libraries: Install the necessary libraries for machine learning. The most commonly used libraries include NumPy, Pandas, matplotlib, and scikit-learn. These libraries provide tools for mathematical computations, data manipulation, visualization, and implementing machine learning algorithms. Additionally, consider installing deep learning frameworks like TensorFlow or PyTorch if you plan to work on neural networks.

5. Explore integrated development environments (IDEs): IDEs offer a more streamlined development experience for machine learning. Popular options include PyCharm, Jupyter Notebook, and Visual Studio Code. IDEs provide features like code auto-completion, debugging, and visualization tools, making it easier to write, test, and debug machine learning code.

6. Set up additional tools: Depending on your specific requirements, you may need to install additional tools like Apache Spark for big data processing, Docker for containerization, or SQL databases for data storage. These tools enhance your machine learning capabilities and enable you to handle larger datasets efficiently.

7. Access cloud-based platforms: Consider utilizing cloud-based platforms like Google Cloud AI, Microsoft Azure, or Amazon Web Services (AWS) for more advanced machine learning capabilities. These platforms provide pre-configured environments, powerful computing resources, and convenient access to machine learning tools and services.

8. Learn version control: Version control systems like Git are essential for tracking changes and collaborating on machine learning projects. Familiarize yourself with version control concepts and choose a platform like GitHub or GitLab to manage your code repository.

By following these steps, you can ensure a well-equipped machine learning environment that enables efficient development, experimentation, and deployment of your machine learning projects. Remember to keep your environment up-to-date and stay informed about the latest updates and advancements in the field to make the most out of your machine learning journey.

Learning Machine Learning Algorithms

To become proficient in machine learning, it is crucial to gain a solid understanding of the different algorithms used in this field. A thorough knowledge of machine learning algorithms enables you to select the appropriate technique for a given problem, understand their strengths and weaknesses, and effectively implement them. Here are key steps to learning machine learning algorithms.

1. Start with the basics: Begin by learning the fundamental algorithms that form the building blocks of machine learning. These include linear regression, logistic regression, decision trees, and k-nearest neighbors (KNN). Familiarize yourself with the underlying concepts and mathematics behind each algorithm.

2. Explore supervised learning algorithms: Supervised learning algorithms learn from labeled data to make predictions or decisions. Dive deeper into algorithms like support vector machines (SVM), random forests, and gradient boosting. Understand how each algorithm works, what kind of problems they are suitable for, and their respective advantages and limitations.

3. Delve into unsupervised learning algorithms: Unsupervised learning algorithms uncover hidden patterns or structures in unlabeled data. Learn about algorithms such as k-means clustering, hierarchical clustering, and dimensionality reduction techniques like principal component analysis (PCA). Gain insights into how unsupervised learning can be utilized for tasks like customer segmentation, anomaly detection, and data visualization.

4. Explore deep learning: Deep learning focuses on neural networks with multiple layers, capable of learning complex representations from data. Study deep learning algorithms like convolutional neural networks (CNN) for image recognition, recurrent neural networks (RNN) for sequential data, and generative adversarial networks (GAN) for generating realistic data. These algorithms have revolutionized areas such as computer vision, natural language processing, and speech recognition.

5. Understand ensemble methods: Ensemble methods combine multiple models to improve prediction accuracy. Learn about techniques like bagging, boosting, and stacking, which leverage the wisdom of the crowd to create powerful models. Gain insights into how ensemble methods can be used to reduce bias, improve generalization, and combat overfitting.

6. Implement algorithms in practice: Practice implementing machine learning algorithms in programming languages like Python or R. Implement models from scratch, as well as utilize popular machine learning libraries like scikit-learn or TensorFlow. Apply these algorithms to real-world datasets and familiarize yourself with the data preprocessing steps, model training, evaluation metrics, and hyperparameter tuning.

7. Learn from practical examples and projects: Engage in practical exercises and projects that showcase the application of machine learning algorithms. Work on projects like predicting house prices, classifying images, or sentiment analysis on text data. Participate in Kaggle competitions or join open-source projects to gain valuable hands-on experience and learn from the machine learning community.

8. Stay updated with the latest trends: Machine learning is a rapidly evolving field, with new algorithms and techniques being developed regularly. Stay abreast of the latest research papers, attend conferences, and follow reputable machine learning blogs to keep up with advancements in the field.

By following these steps and continuously practicing and expanding your knowledge, you will develop a strong foundation in machine learning algorithms. Remember that gaining expertise in machine learning is an ongoing process, as new algorithms and techniques continue to emerge. Embrace the learning journey and focus on building a deep understanding of the algorithms and their practical implementations.

Implementing Machine Learning Models

Implementing machine learning models involves translating the theoretical concepts into practical code. It requires understanding the steps involved in model creation, data preprocessing, feature engineering, model selection, model training, and evaluation. Mastering the implementation process is essential to make accurate predictions and derive insights from data. Here are the key steps to effectively implement machine learning models.

1. Define the problem and gather data: Clearly define the problem you want to solve with machine learning. Identify the type of problem, whether it’s classification, regression, clustering, or recommendation. Gather and prepare the relevant data, ensuring it is clean, labeled, and representative of the problem.

2. Preprocess the data: Data preprocessing involves transforming the raw data into a format suitable for machine learning algorithms. This includes handling missing values, handling outliers, feature scaling, and encoding categorical variables. Ensure that the data is ready for training and that it follows the input requirements of the chosen algorithm.

3. Feature engineering: Feature engineering involves selecting the most relevant features from the available data and creating new features that may improve the model’s performance. This process requires domain knowledge and creativity. Consider feature selection techniques, such as recursive feature elimination or feature importance analysis, to identify the most informative features.

4. Select the appropriate machine learning algorithm: Based on the problem type and data characteristics, select the most suitable machine learning algorithm. Consider factors such as the algorithm’s assumptions, complexity, interpretability, and performance on similar problems. Common algorithms include decision trees, random forests, support vector machines, neural networks, and ensemble methods.

5. Split the data: Split the data into training and testing sets. The training set is used to train the model, while the testing set is used to evaluate its performance on unseen data. It’s important to maintain the balance between a sufficiently large training set and a meaningful evaluation on unseen data.

6. Train the model: Train the selected machine learning model using the training set. Feed the features and labels into the algorithm and let it learn the patterns and relationships from the data. Adjust hyperparameters, such as learning rate or regularization parameter, to optimize the model’s performance. Consider techniques like cross-validation to robustly assess the model’s performance during training.

7. Evaluate the model: Evaluate the trained model’s performance on the testing set using appropriate evaluation metrics. Common metrics include accuracy, precision, recall, F1-score, or mean squared error, depending on the problem type. Ensure that the model’s performance is satisfactory and meets the defined objectives.

8. Iterate and improve: Analyze the model’s performance and iteratively improve your approach. Experiment with different algorithms, feature engineering techniques, or hyperparameter configurations to optimize the model’s performance. Keep a record of the experiments and the corresponding results to identify the best approaches.

9. Make predictions: Once you have a trained and evaluated model, use it to make predictions on new, unseen data. Feed the unseen data into the model and obtain the model’s predictions or decision outputs. Assess the model’s performance on real-world data and iterate further if necessary.

Implementing machine learning models requires a deep understanding of the chosen algorithms, data preprocessing techniques, feature engineering methods, and evaluation metrics. Continuously improve your skills through practice, explore different algorithms and techniques to expand your toolkit, and stay updated with the latest advancements in the field. The implementation process is iterative and dynamic, so be prepared to experiment, learn from failures, and refine your approaches to achieve accurate and impactful machine learning models.

Finding and Preparing Data for Machine Learning

Data is the foundation of any successful machine learning project. Finding and preparing suitable and high-quality data is crucial to build accurate and reliable machine learning models. Here are the key steps to effectively find and prepare data for machine learning.

1. Determine the data requirements: Define the specific data requirements for your machine learning project. Identify the type of data needed, such as structured or unstructured, numerical or categorical, and the desired size and quality of the dataset. This will help you narrow down the sources and focus your efforts.

2. Access public datasets: Explore public datasets that are freely available and relevant to your project. Websites like Kaggle, UCI Machine Learning Repository, and government data portals provide access to a wide range of datasets across various domains. These datasets often come pre-cleaned and labeled, saving you valuable time and effort.

3. Utilize web scraping: Web scraping allows you to extract data from websites relevant to your project. Tools like BeautifulSoup and Scrapy in Python can help you scrape structured or unstructured data from HTML pages or APIs. Ensure you comply with the website’s terms of service and respect any legal or privacy considerations.

4. Collect your own data: In some cases, you may need to collect your own data to meet the specific requirements of your project. This could involve surveys, questionnaires, sensor data, or user activity logs. Ensure that you have the necessary permissions and safeguards in place, and consider ethical considerations regarding data privacy and consent.

5. Perform data cleaning: Clean the collected or obtained data to remove any inconsistencies, duplicates, or misinformation. Handle missing data by either imputing values or removing incomplete records. Explore outliers and determine whether they should be corrected or addressed differently based on the context of your project. Data cleaning is essential to ensure the data is reliable and suitable for machine learning.

6. Perform exploratory data analysis: Get familiar with the data through exploratory data analysis (EDA). Visualize the data, identify patterns, and gain insights into its distribution, correlations, and outliers. EDA helps you understand the characteristics of the data and guides your feature selection and preprocessing strategies.

7. Split the data: Split the data into training, validation, and testing sets. The training set is used to train the machine learning model, the validation set is used to fine-tune hyperparameters, and the testing set is used to evaluate the model’s performance on unseen data. The typical split is around 70-80% for training, 10-15% for validation, and 10-15% for testing, but the split can be adjusted based on the dataset size and project requirements.

8. Normalize and transform the data: Normalize or scale the data to ensure that all features have a similar scale. Common techniques include standardization, min-max scaling, and logarithmic transformations. Consider transformations like feature engineering, where new features are derived from the existing data to capture more meaningful information or relationships.

9. Handle class imbalance: In classification problems, class imbalance can occur when one class is significantly more prevalent than others. Address the issue by utilizing techniques such as oversampling the minority class, undersampling the majority class, or using hybrid approaches like SMOTE (Synthetic Minority Over-sampling Technique) to balance the classes.

10. Document and annotate the data: Document the data preprocessing steps you have taken, the data sources, and any transformations or cleaning procedures applied. Annotate the data with appropriate labels or tags for supervised learning tasks. This documentation ensures transparency and reproducibility, allowing others to understand and reproduce your results if needed.

Finding and preparing suitable data for machine learning requires careful consideration and attention to detail. It is essential to source and clean the data relevant to your project, perform exploratory data analysis to gain insights, and preprocess the data to ensure its quality and compatibility with machine learning algorithms. By following these steps, you lay the foundation for successful machine learning projects and accurate model predictions.

Data Cleaning and Feature Engineering

Data cleaning and feature engineering are crucial steps in preparing datasets for machine learning. Data cleaning involves identifying and addressing inconsistencies, duplicates, missing values, and outliers, while feature engineering focuses on creating new features or transforming existing ones to improve the predictive power of the data. These steps play a critical role in ensuring accurate and reliable machine learning models. Let’s explore them in more detail.

Data Cleaning:

Data cleaning is the process of identifying and rectifying issues in the dataset. It involves the following steps:

1. Handling missing values: Missing values can negatively impact machine learning models. Techniques for handling missing data include imputing the missing values using mean, median, mode, or more advanced methods such as regression, or removing rows or columns with missing values if deemed appropriate based on the dataset.

2. Removing duplicates: Duplicate records can distort analysis and model performance. Removing duplicates ensures that each observation in the dataset is unique and representative of the data.

3. Handling outliers: Outliers are data points that significantly deviate from the average or expected values. Outliers can be investigated and either corrected if they are errors or kept if they are valid and meaningful observations. Alternatively, outliers can be removed or transformed using techniques like winsorization or log transformation, based on the context of the problem and the impact of the outliers on the model.

4. Addressing inconsistent data: Inconsistent data can arise due to human errors or different data sources. It’s important to identify and address inconsistencies in formats, units, or encoding to ensure data integrity and accuracy.

Feature Engineering:

Feature engineering involves transforming and creating new features from the existing dataset to enhance the model’s predictive power. Here are some common techniques:

1. Handling categorical variables: Categorical variables need to be converted into numerical forms for the machine learning model to process. Techniques like one-hot encoding, label encoding, or target encoding can be applied to convert categorical variables into numerical representations.

2. Creating interaction and polynomial features: Interaction features capture relationships between two or more existing features, while polynomial features introduce non-linear relationships. These techniques help capture more complex patterns and improve the model’s ability to learn.

3. Scaling and normalization: Scaling the features ensures that they have a similar scale, preventing one feature from dominating the model’s learning. Techniques like standardization (scaling to mean and standard deviation) or min-max scaling (scaling to a specific range) can be employed based on the requirements of the model.

4. Time-based features: If time is a relevant factor in the dataset, extracting time-based features like day of the week, hour of the day, or seasonality can provide valuable insights and improve predictive performance.

5. Domain-specific feature engineering: Incorporating domain knowledge to create relevant features specific to the problem domain can significantly enhance the model’s predictive power. This includes capturing meaningful statistics, ratios, or aggregations that are specific to the problem at hand.

Data cleaning and feature engineering are iterative processes that require careful analysis and knowledge of the dataset. It is essential to experiment with different techniques, assess their impact on the model’s performance, and iterate accordingly. These steps ensure that the data is clean, meaningful, and optimized to produce accurate and reliable machine learning models.

Evaluating Machine Learning Models

Evaluating machine learning models is a crucial step in assessing their performance and determining their effectiveness in solving the problem at hand. It involves using appropriate evaluation metrics and techniques to measure how well the model is performing on unseen data. By thoroughly evaluating machine learning models, you can gain valuable insights and make informed decisions about model selection, parameter tuning, and generalization ability. Here are key steps to effectively evaluate machine learning models.

1. Split the data: Split the dataset into training and testing sets. The training set is used to train the model, and the testing set is used to evaluate the model’s performance on unseen data. Typically, a 70-80% training and 20-30% testing split is used, but the split can be adjusted based on the dataset size and the problem’s requirements.

2. Choose appropriate evaluation metrics: Select evaluation metrics that align with the problem’s objectives. For classification problems, common metrics include accuracy, precision, recall, F1-score, or area under the receiver operating characteristic curve (AUC-ROC). For regression, metrics like mean squared error (MSE), mean absolute error (MAE), or R-squared are commonly used. Choose metrics that measure what is most important for your specific problem.

3. Evaluate baseline models: Compare the performance of your model against baseline models. Baseline models can be simple algorithms or heuristics that provide a benchmark for comparison. This helps you understand if your model significantly outperforms the basic approaches and provides insights into the added value of using machine learning.

4. Cross-validation: Perform k-fold cross-validation to assess the model’s robustness and ability to generalize across different subsets of the data. This technique involves repeatedly splitting the data into training and validation sets, training the model on the training sets, and evaluating its performance on the validation sets. Average the evaluation metrics across the folds to obtain a more reliable estimate of the model’s performance.

5. Explore performance on different data subsets: Evaluate the model’s performance on different subsets of the data to gain insights into potential variations. For example, you could examine performance across different time periods, demographics, or geographical locations. This analysis helps identify any bias or limitations of the model and provides useful feedback for model improvement.

6. Assess overfitting and underfitting: Overfitting occurs when the model performs exceptionally well on the training data but poorly on unseen data, indicating that it has memorized the training set. Underfitting, on the other hand, occurs when the model lacks the complexity to learn the underlying patterns in the data. Evaluate the model’s performance on both the training and testing sets to identify signs of overfitting or underfitting.

7. Use validation data for hyperparameter tuning: When fine-tuning the model’s hyperparameters, use a validation set to assess the model’s performance for different parameter configurations. This helps you identify the optimal set of hyperparameters that yield the best performance on the testing data.

8. Compare different models: Assess the performance of multiple models on the same dataset to determine which one performs the best. Compare their evaluation metrics, consider their computational complexity, interpretability, and other factors relevant to the problem. Use techniques like statistical tests or confidence intervals to determine if the differences in performance are statistically significant.

9. Visualize and interpret the results: Present the evaluation results in a concise and meaningful way. Visualize performance metrics using ROC curves, precision-recall curves, or confusion matrices. Interpret the results to gain insights into the strengths and weaknesses of the model and identify areas for improvement.

By following these steps and adopting a systematic approach to evaluating machine learning models, you can make informed decisions about their performance, select the best model for the problem, and optimize its performance through further iterations and improvement.

Fine-Tuning and Optimizing Machine Learning Models

Fine-tuning and optimizing machine learning models are key steps in maximizing their performance and improving their predictive capabilities. By fine-tuning and optimizing models, you can enhance their accuracy, generalization ability, and overall effectiveness. Here are the steps to effectively fine-tune and optimize machine learning models.

1. Hyperparameter tuning: Hyperparameters are parameters that are set before the model training and affect the learning process. Examples include learning rate, regularization strength, and the number of hidden layers in a neural network. Utilize techniques like grid search, random search, or Bayesian optimization to find the best combination of hyperparameters that optimize the model’s performance on the validation set.

2. Feature selection: Analyze the importance of each feature in the model by considering feature importance scores, correlation analysis, or domain expertise. Eliminate or add features that significantly contribute or hinder the model’s performance. Feature selection helps reduce dimensionality, improve interpretability, and prevent overfitting in the model.

3. Algorithm selection: Experiment with different algorithms that are suitable for the problem at hand. Compare their performances using appropriate evaluation metrics. Each algorithm has different strengths and weaknesses, and selecting the most appropriate one can significantly impact the model’s performance.

4. Model ensemble: Combine multiple models to create a model ensemble. Ensemble techniques like bagging, boosting, or stacking can help improve predictive accuracy and robustness. By combining the predictions of multiple models, you can leverage their collective intelligence and reduce the risk of overfitting.

5. Regularization: Regularization techniques, such as L1 and L2 regularization, help prevent overfitting by adding a penalty term to the loss function. Regularization encourages simpler models and reduces the impact of irrelevant or noisy features. Experiment with different regularization strengths to balance model complexity and performance.

6. Cross-validation: Perform cross-validation to obtain a more reliable estimate of the model’s performance. By splitting the data into multiple folds and evaluating the model on each fold, you can better understand its generalization ability and identify potential issues related to data bias or limited training samples.

7. Ensemble learning: Utilize ensemble learning techniques to combine the predictions of multiple models. This can help mitigate bias, variance, and overfitting. Techniques like bagging, boosting, and stacking enable the models to learn from one another and make more accurate predictions collectively.

8. Regular monitoring and retraining: Continuously monitor the model’s performance on new data and evaluate its accuracy over time. Incorporate newer data into the training process to keep the model up to date. Retraining the model periodically ensures that it remains relevant and aligned with the evolving patterns and characteristics of the data.

9. Optimization techniques: Explore optimization algorithms like gradient descent, stochastic gradient descent (SGD), or adaptive learning rate methods to improve model convergence and training speed. A carefully chosen optimization technique can help the model reach better performance while reducing the computational overhead.

10. Interpretability and explainability: In certain domains, interpretability and explainability of the model’s predictions are important. Consider using techniques like feature importance analysis, SHAP (SHapley Additive exPlanations) values, or LIME (Local Interpretable Model-agnostic Explanations) to understand and interpret the model’s decision-making process.

By following these steps, continually experimenting, and fine-tuning the model, you can optimize its performance, improve accuracy, reduce overfitting, and ensure that it stays relevant to solve the problem efficiently. Remember, model optimization is an iterative process that requires careful evaluation and consideration of various techniques and trade-offs to find the best balance between model complexity and predictive power.

Deploying and Monitoring Machine Learning Models

Deploying and monitoring machine learning models is a critical step in putting your models into production and ensuring they perform optimally over time. This involves setting up a production environment, deploying the model, and continuously monitoring its performance. Here are the key steps to effectively deploy and monitor machine learning models.

1. Set up a production environment: Prepare the infrastructure needed to deploy your machine learning model in a production environment. This includes selecting the appropriate hardware, setting up servers or cloud instances, and installing the necessary software dependencies.

2. Build a reliable and efficient model serving system: Develop an API or a model serving system that allows users or other applications to interact with the deployed model. Consider using frameworks like Flask or Django to build a RESTful API for easy integration and communication.

3. Automate the deployment process: Streamline the deployment process by automating tasks such as data preprocessing, feature engineering, and retraining the model. Utilize tools like CI/CD pipelines to ensure smooth and consistent deployment of updated or new models.

4. Monitor model performance: Continuously monitor the performance of the deployed model to detect any anomalies or performance degradation. Implement logging and error handling mechanisms to capture relevant information about the model’s predictions, including input data, output data, and any errors encountered.

5. Establish model versioning and management: Keep track of different versions of your models to enable easy rollback or switching between versions. Use version control systems like Git to manage the model code and documentation associated with each version.

6. Implement drift detection: Drift detection is crucial for identifying changes in the model’s input data distribution over time. Monitor incoming data and compare it with the data used during model training to ensure that the model’s assumptions still hold. Drift detection helps detect concept drift or data shift, allowing you to take appropriate action, such as retraining the model or updating the input data pipeline.

7. Ensure data privacy and security: Consider data privacy and security measures when deploying machine learning models. Implement encryption techniques and access controls to protect sensitive data. Regularly conduct security audits to identify and address any vulnerabilities in the deployment environment.

8. Continuously retrain and update the model: Models need to adapt to changes in the data and the problem they are solving. Periodically retrain the model using new data to ensure it remains accurate and up to date. Implement a pipeline that automatically incorporates new data and retrains the model as needed.

9. Gather feedback and user insights: Collect feedback from users or stakeholders to gain insights into the model’s performance and impact on the problem it is addressing. Regularly solicit feedback and conduct user surveys to identify areas for improvement and gather real-world insights to refine the model further.

10. Iterate and improve: Continuously iterate and improve the deployed model based on feedback, monitoring results, and business requirements. Keep an eye on the performance metrics, monitor user satisfaction, and leverage user feedback to refine and optimize the model as the problem evolves.

By following these steps and establishing a robust deployment and monitoring process, you can ensure the successful deployment of your machine learning models into production. Monitoring performance, detecting drift, and continuously iterating on the model’s performance will allow you to provide accurate, reliable, and up-to-date predictions to solve real-world problems.

Staying Up-to-Date with the Latest Developments in Machine Learning

Machine learning is a rapidly evolving field with new techniques, algorithms, and advancements being introduced regularly. Staying up-to-date with the latest developments is crucial to remain competitive and leverage the most cutting-edge tools and methodologies. Here are some essential tips for staying informed and current in the field of machine learning.

1. Follow reputable sources: Regularly follow reputable sources such as research papers, academic journals, and publications devoted to machine learning. Top-tier conferences like NeurIPS, ICML, and ACL often release papers showcasing the latest breakthroughs. Stay updated on new publications and influential authors in the field.

2. Engage with the machine learning community: Join communities and forums dedicated to machine learning, such as Kaggle, GitHub, or Stack Overflow. Participate in discussions, ask questions, and share insights with fellow practitioners and researchers. Engaging with the community allows you to gain knowledge, exchange ideas, and collaborate on projects.

3. Attend conferences and webinars: Attend machine learning conferences, workshops, and webinars to learn about the latest research, trends, and applications. Conferences like NeurIPS, ICML, CVPR, and ICLR feature presentations and workshops by leading experts in the field. Additionally, webinars and online events hosted by organizations and experts provide insights into emerging topics and techniques.

4. Take online courses and tutorials: Take advantage of online platforms like Coursera, edX, and Udemy that offer machine learning courses. These courses often cover foundational concepts, advanced algorithms, and practical implementation. Regularly refresh your knowledge and skills by enrolling in courses or tutorials specific to the areas of machine learning that interest you.

5. Follow machine learning blogs and podcasts: Subscribe to respected machine learning blogs and podcasts that provide regular updates on the latest advancements, research papers, and trends. Notable blogs and podcasts include Towards Data Science, Machine Learning Mastery, the TWiML podcast, and the Data Skeptic podcast.

6. Participate in competitions and challenges: Engage in machine learning competitions on platforms like Kaggle, which expose you to real-world problems and cutting-edge techniques. Competitions encourage you to explore different approaches, learn from other participants, and push the boundaries of your knowledge.

7. Experiment with cloud-based machine learning services: Cloud-based machine learning services like Google Cloud AI, Microsoft Azure ML, and Amazon SageMaker offer pre-configured environments, datasets, and resources to explore and experiment with the latest tools and technologies. Using these platforms provides practical experience with state-of-the-art machine learning capabilities.

8. Explore research publications and preprint servers: Keep an eye on arXiv and other preprint servers where researchers often share their latest findings before formal publication. Accessing preprints allows you to stay updated on emerging research and discoveries in machine learning.

9. Participate in academic courses and workshops: Academic institutions and research organizations often offer courses and workshops on specific machine learning topics. Enrolling in these programs allows you to learn from experts in the field and gain hands-on experience with the latest methodologies.

10. Follow industry leaders and influencers: Follow leading researchers, experts, and industry leaders on platforms like Twitter and LinkedIn. These individuals often share insights, articles, and updates on their ongoing projects and research, keeping you informed about the latest advancements in the field.

By incorporating these practices into your routine, you can stay up-to-date with the rapid advancements in machine learning. Continuously learning and exploring new developments will enable you to apply the most relevant and state-of-the-art techniques, and stay at the forefront of the ever-evolving field of machine learning.

Common Challenges and Pitfalls in Machine Learning

Machine learning has the potential to be powerful, but there are several challenges and pitfalls that practitioners often encounter. Understanding and addressing these challenges is crucial to build reliable and accurate machine learning models. Here are some common challenges and pitfalls in machine learning:

1. Insufficient or biased data: One of the key challenges is having insufficient or biased data. Limited or imbalanced datasets can lead to poor model performance and biased outcomes. It’s important to carefully collect and curate representative and diverse data to ensure the model’s generalizability and fairness.

2. Overfitting or underfitting: Overfitting occurs when the model performs well on the training data but fails to generalize to unseen data. Underfitting, on the other hand, occurs when the model is too simple and fails to capture the underlying patterns. Balancing the model’s complexity and its ability to generalize is crucial to avoid these pitfalls.

3. Feature selection and engineering: Identifying and selecting the most relevant features from the data can be challenging. The choice of features influences the model’s performance significantly. It’s important to have domain knowledge and creativity in feature engineering to extract meaningful representations from the data.

4. Hyperparameter tuning: Selecting the optimal values for hyperparameters is a challenge. Adjusting hyperparameters such as learning rate, batch size, or regularization strength is critical to optimize the model’s performance. Automated techniques like grid search or Bayesian optimization can help navigate the hyperparameter search space effectively.

5. Data preprocessing and cleaning: Preparing the data for analysis can be time-consuming and error-prone. Handling missing values, outliers, and inconsistent data requires careful attention. Data preprocessing techniques like imputation, outlier removal, and normalization need to be appropriately applied to ensure accurate and reliable model training.

6. Interpretability and explainability: Machine learning models, especially complex ones like deep neural networks, can lack interpretability. Understanding and interpreting the decisions made by the model is challenging. Techniques like feature importance analysis and surrogate models can be used to gain insights into the model’s inner workings.

7. Model selection: Choosing the right algorithm or model architecture for a given problem is not always straightforward. It requires an understanding of the strengths, weaknesses, and assumptions of various models. Experimentation, testing, and comparison of different models are crucial in identifying the best approach.

8. Computational resources and scalability: Training complex models on large datasets can demand substantial computational resources, including processing power and memory. Scaling up the training process and optimizing the code for efficiency become important considerations, especially when dealing with big data or deep learning.

9. Ethical and legal considerations: Machine learning models need to be designed and deployed responsibly, taking into account ethical and legal considerations. Ensuring fairness, transparency, and privacy are critical to avoid unintentional biases, discrimination, or misuse of sensitive data.

10. Model maintenance and updating: Machine learning models are not static and need to be regularly monitored and updated. Changes in the data distribution, concept drift, or evolving user needs require continuous model maintenance, fine-tuning, and retraining to ensure their ongoing accuracy and relevance.

Understanding and addressing these challenges and pitfalls is essential for successful machine learning projects. By being aware of these difficulties, practitioners can make informed decisions, apply appropriate techniques, and make continuous improvements to develop reliable and high-performing machine learning models.

Best Practices for Learning Machine Learning

Learning machine learning can be an exciting but challenging journey. To make the learning process efficient and effective, it is important to follow best practices that will help you acquire the necessary skills and knowledge. Here are some best practices for learning machine learning:

1. Build a strong foundation in mathematical concepts: Machine learning heavily relies on mathematical principles, including linear algebra, calculus, probability, and statistics. Develop a solid understanding of these concepts as they form the backbone of many machine learning algorithms and techniques.

2. Continuously expand your knowledge: Machine learning is a rapidly evolving field. Stay updated with the latest research papers, publications, and advancements in the field. Engage with the machine learning community through forums, conferences, and online platforms to gain insights into emerging trends and techniques.

3. Practice hands-on coding: Implementing machine learning algorithms and models is essential to solidify your understanding. Apply what you learn by working on practical projects and Kaggle competitions. Practice writing clean, optimized code using popular machine learning libraries and frameworks like scikit-learn, TensorFlow, or PyTorch.

4. Emphasize understanding over memorization: Focus on understanding the core concepts and principles rather than just memorizing algorithms or formulas. Be able to explain why certain techniques work and when they are appropriate. This deeper understanding will enable you to apply your knowledge effectively to new problems.

5. Experiment and explore: Machine learning is an iterative process. Experiment with different algorithms, hyperparameters, and techniques to gain insights and improve your models. Learn from failures and use them as opportunities for growth and understanding.

6. Start with simpler models: Begin by understanding and implementing simpler machine learning models before moving on to more complex ones. This gradual progression will help you grasp the fundamentals and build a solid foundation for understanding more advanced concepts.

7. Work on real-world projects: Apply machine learning to real-world problems to gain practical experience. This will help you understand the challenges faced in real-world scenarios and strengthen your problem-solving and critical-thinking skills.

8. Collaborate and engage with others: Participate in machine learning communities, forums, and meetups. Engage in discussions, ask questions, and contribute to the knowledge-sharing process. Collaborate with others on projects to gain different perspectives and enhance your learning experience.

9. Be patient and persistent: Learning machine learning takes time and practice. Be patient with yourself and persist through challenges and setbacks. Keep an open mindset and be willing to adapt and learn from your experiences.

10. Document and reflect: Keep track of your learning progress, projects, and experiments. Documenting your work helps reinforce your understanding and enables you to revisit and review concepts later. Reflect on your projects and experiments to identify areas for improvement and areas where you have made progress.

By adhering to these best practices, you can accelerate your learning journey in machine learning and cultivate the skills and knowledge necessary to succeed in this evolving field. Stay curious, remain dedicated to continuous learning, and embrace the challenges as opportunities for growth and improvement.