What Is Recall?

Recall is a fundamental metric used in machine learning to measure the effectiveness of a model in identifying all relevant instances of a specific class. It is also known as sensitivity or the true positive rate. Recall quantifies the ability of a model to correctly classify positive instances out of all the actual positive instances present in a dataset.

When working on classification problems, it is crucial to understand the concept of recall to effectively evaluate and compare different models. In a classification task, there are typically two outcomes: true positive (TP) and false negative (FN). True positive refers to the instances that are correctly labeled as positive by the model, while false negative represents the instances that are erroneously labeled as negative when they should be positive.

Recall can be calculated using the formula:

Recall = TP / (TP + FN)

The recall value ranges from 0 to 1, where a value of 1 indicates that the model successfully identifies all positive instances, and a value of 0 means that the model fails to identify any positive instances. A higher recall score indicates a more effective model in capturing the positive instances.

For example, consider a model that predicts whether an email is spam or not. Out of 100 spam emails, the model correctly identifies 90 as spam and incorrectly labels 10 as not spam. In this case, the model has a recall of 90%. This means that it successfully captures 90% of the spam emails.

Recall is particularly useful in scenarios where missing positive instances is more critical than having false positives. It is commonly employed in various applications such as fraud detection, disease diagnosis, face recognition, and information retrieval.

It is important to note that recall should not be considered in isolation, but in combination with other metrics such as precision and accuracy. The overall performance of a model can be evaluated using a combination of these metrics.

Why Is Recall Important in Machine Learning?

Recall plays a crucial role in machine learning as it directly measures the ability of a model to accurately identify positive instances. Understanding why recall is important can help us grasp its significance in various applications and scenarios.

One of the primary reasons why recall is important is its role in detecting and minimizing false negatives. In certain domains like medical diagnosis or fraud detection, accurately identifying positive instances holds immense value. A high recall score indicates that the model is effectively capturing the positive instances, reducing the chances of missing critical information.

Consider a medical diagnosis scenario where a model is trained to identify malignant tumors. If the model has a low recall, it means that it is missing a significant number of malignant tumors, which can have severe consequences for patients. On the other hand, a high recall value assures that most of the malignant tumors are correctly identified, allowing for timely medical intervention.

Furthermore, recall is crucial in tasks where the costs of false negatives outweigh the costs of false positives. For instance, in a cybersecurity system, where the objective is to detect malicious activities, missing an actual threat (false negative) can lead to severe consequences. In such cases, optimizing for higher recall becomes paramount to minimize the rate of false negatives.

In fraud detection applications, recall enables the identification of as many fraudulent instances as possible. It ensures that fraudulent activities are effectively flagged and dealt with, protecting individuals, organizations, and financial systems. A low recall score in this context indicates that a significant number of fraudulent transactions are going undetected, leading to potential financial losses.

Additionally, recall is important in information retrieval systems, where the objective is to retrieve relevant documents or data. In these applications, a high recall is desired to ensure that all pertinent information is retrieved, even if it means retrieving some non-relevant information as well. This ensures comprehensive retrieval and minimizes the chances of missing crucial data.

How Is Recall Calculated?

Recall, also known as sensitivity or the true positive rate, is calculated using a simple formula. It quantifies the ability of a machine learning model to correctly identify positive instances out of all the actual positive instances in a dataset.

The formula to calculate recall is:

Recall = True Positives / (True Positives + False Negatives)

To better understand how recall is calculated, let’s break down the components of the formula:

- True Positives (TP): This refers to the instances that are correctly predicted as positive by the model. For example, in a binary classification problem where the positive class represents a specific condition, true positives are the instances that are correctly identified as having that condition.

- False Negatives (FN): These are the instances that are incorrectly predicted as negative by the model, even though they are actual positive instances. In other words, false negatives are the instances that the model fails to correctly classify as positive.

By dividing the number of true positives by the sum of true positives and false negatives, we obtain the recall score, which represents the proportion of correctly identified positive instances out of all actual positive instances in the dataset.

For example, let’s consider a model that predicts whether an email is spam or not. Out of 100 spam emails, the model correctly identifies 90 as spam (true positives) and incorrectly labels 10 as not spam (false negatives). Using the recall formula, we can calculate the recall as:

Recall = 90 / (90 + 10) = 0.9 or 90%

A recall score of 0.9 indicates that the model successfully captures 90% of the spam emails, which is a good performance.

It’s important to note that recall is a single metric that focuses on the positive class’s identification. It should be evaluated alongside other performance metrics such as precision, accuracy, and F1 score to get a comprehensive understanding of a machine learning model’s performance.

The Relationship Between Recall and Precision

Recall and precision are two important metrics used in machine learning to evaluate the performance of classification models. While recall focuses on the ability to identify positive instances, precision measures the accuracy of positive predictions. Understanding the relationship between recall and precision is essential in assessing the overall effectiveness of a model.

Recall and precision are inversely related to each other. This means that improving one of these metrics often comes at the cost of the other. Let’s delve deeper into these metrics to understand their significance and how they can impact model performance.

Recall: Recall, also known as sensitivity or true positive rate, measures the proportion of actual positive instances that the model correctly identifies. A high recall signifies that the model effectively captures positive instances, minimizing false negatives. However, a high recall does not guarantee a low rate of false positives.

Precision: Precision measures the accuracy of positive predictions made by the model. It calculates the proportion of correctly predicted positive instances from all instances that the model predicted as positive. A high precision score indicates that the positive predictions made by the model are mostly accurate, minimizing false positives. However, a high precision does not assure a low rate of false negatives.

The relationship between recall and precision can be understood with a hypothetical example. Consider a model that predicts whether an email is spam or not. A high recall implies that the model successfully identifies most spam emails, ensuring that few actual spam emails are missed. However, if the model has a low precision, it means that it also misclassifies some non-spam emails as spam, resulting in false positives. On the other hand, a high precision implies that the model accurately identifies spam emails with minimal false positives, but it may miss some actual spam emails, leading to false negatives.

It’s important to strike a balance between recall and precision based on the specific requirements of the application. In scenarios where missing positive instances is more critical, a higher recall is desired, even if it means compromising on precision. For example, in a medical diagnosis application, it is crucial to have a high recall to avoid missing any true positive cases, even if it means having some false positives.

Conversely, in situations where minimizing false positives is more important, a higher precision is preferred, even if it means sacrificing some recall. For instance, in a spam email filtering system, precision is crucial to ensure that few non-spam emails are mistakenly flagged as spam, even if it means a few spam emails may go undetected.

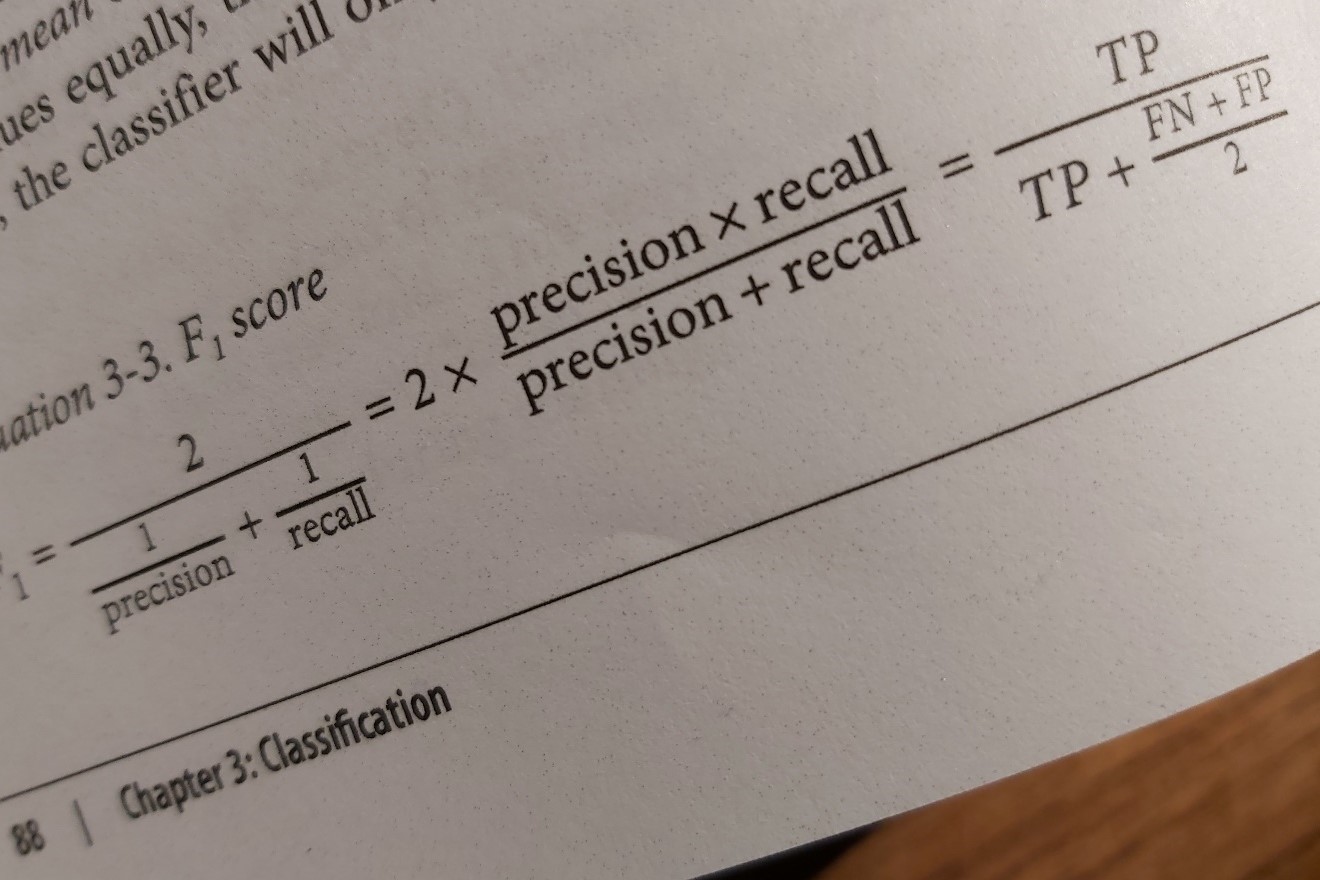

It is worth noting that F1 score, which combines both recall and precision, is often used as a balanced metric to evaluate the overall performance of a model. By considering both recall and precision, the F1 score provides a more comprehensive assessment of a model’s effectiveness in classification tasks.

Balancing Recall and Precision

When working with classification models in machine learning, finding the right balance between recall and precision is crucial. The optimal balance depends on the specific requirements of the application and the relative importance of minimizing false negatives and false positives. Balancing these metrics ensures that the model performs optimally and meets the desired objectives.

There are several strategies that can be employed to balance recall and precision:

1. Adjusting the Classification Threshold: The classification threshold determines the point at which a predicted probability is considered positive or negative. By adjusting this threshold, you can control the trade-off between recall and precision. Lowering the threshold increases recall but may decrease precision, while raising the threshold can improve precision but may reduce recall. It’s essential to experiment with different threshold values and evaluate the impact on the desired outcome.

2. Collecting more Data: Insufficient training data can lead to imbalanced recall and precision. Collecting more diverse and representative data can help improve the model’s ability to capture both positive and negative instances accurately. More data can provide a better understanding of the underlying patterns and help the model make more informed predictions, resulting in a better balance between recall and precision.

3. Feature Engineering: Analyzing and selecting relevant features can greatly impact the performance of the model. By identifying informative features and discarding redundant or noisy ones, you can improve the model’s ability to accurately capture positive instances, thereby enhancing recall and precision. Careful feature engineering can help achieve a better trade-off between these metrics.

4. Using Different Evaluation Metrics: In addition to recall and precision, there are other evaluation metrics such as accuracy, F1 score, and area under the precision-recall curve (AUPRC) that can provide additional insights into the model’s performance. These metrics consider both false negatives and false positives, enabling a more comprehensive assessment of the model’s effectiveness. Considering multiple metrics can help identify the right balance between recall and precision.

5. Employing Ensemble Methods: Ensemble methods like bagging, boosting, or stacking combine the predictions of multiple models to generate a final prediction. They can often help improve the balance between recall and precision by leveraging the strengths of different models. Ensemble methods can be effective in reducing the impact of individual models that may have a skewed trade-off between these metrics.

It’s important to note that the optimal balance between recall and precision may vary depending on the specific application and the associated costs of false negatives and false positives. Understanding the requirements and priorities of the problem at hand is crucial in making informed decisions to achieve the desired balance between these important metrics.

How to Improve Recall in Machine Learning Models

Improving recall is essential in many machine learning applications, especially in tasks where identifying all positive instances is crucial. By focusing on techniques that enhance the model’s ability to capture positive instances, you can effectively improve recall. Here are some strategies to consider:

1. Address Class Imbalance: Class imbalance occurs when one class significantly outnumbers the other. In such cases, the model may have a bias towards the majority class, leading to lower recall for the minority class. Techniques like oversampling the minority class, undersampling the majority class, or using synthetic data generation methods like SMOTE can help to balance the class distribution and improve recall.

2. Fine-Tune Model Threshold: The classification threshold determines the point at which a predicted probability is considered positive or negative. By adjusting this threshold, you can prioritize recall over precision. Lowering the threshold makes the model more sensitive to positive instances, increasing recall. However, this can also result in more false positives. Finding the optimal threshold requires careful consideration of the trade-off between recall and precision based on the specific application.

3. Use Ensemble Methods: Ensemble methods combine predictions from multiple models to make final predictions. By leveraging the strengths of different models, ensemble methods can boost recall. Techniques like bagging, boosting, or stacking can be employed to improve the model’s ability to capture positive instances and enhance recall.

4. Feature Engineering: Careful feature engineering can significantly impact recall. Analyze and select features that are highly informative for the positive class. Discard irrelevant or redundant features that do not contribute to the model’s ability to capture positive instances. Feature engineering techniques such as dimensionality reduction, feature selection, or creating new features based on domain knowledge can enhance the model’s discriminative power and improve recall.

5. Model Selection and Tuning: The choice of the machine learning algorithm can influence recall. Some algorithms may inherently have better recall performance than others for specific tasks. Experiment with different algorithms to identify the most suitable one for improving recall. Additionally, hyperparameter tuning can fine-tune the model’s performance. Consider optimizing hyperparameters that have a direct impact on recall, such as class weights, regularization parameters, or decision boundaries.

6. Error Analysis and Feedback Loop: Regularly analyze the model’s errors, particularly false negatives. Understand the patterns and characteristics of these instances to identify areas of improvement. Refine the model based on this analysis, such as revisiting the feature selection process, collecting more informative data, or making adjustments to the model architecture. Continuously iterate and improve the model based on feedback to enhance recall over time.

7. Cross-Validation and Evaluation: Validate the model’s performance using appropriate evaluation techniques such as cross-validation. This ensures that the observed improvement in recall is not due to random fluctuations. Utilize evaluation metrics like precision, F1 score, or area under the receiver operating characteristic curve (AUROC) to assess the overall performance of the model and ensure a balanced and reliable evaluation.

By implementing these strategies and continuously refining the model, you can effectively improve recall in machine learning models, leading to better identification of positive instances and enhanced performance in various applications.

Real-World Examples of Recall in Machine Learning

Recall plays a critical role in many real-world applications of machine learning, where accurately identifying positive instances is of utmost importance. Let’s explore some practical examples where recall is utilized:

1. Disease Diagnosis: In medical diagnosis, recall is crucial for identifying patients with specific health conditions. For instance, in detecting cancer from medical images or analyzing patient symptoms, high recall ensures that the model correctly identifies individuals who need further evaluation or treatment. Missing positive cases (false negatives) can delay diagnosis and impact patient outcomes.

2. Fraud Detection: In financial transactions, recall is vital for detecting fraudulent activities. By capturing as many instances of fraudulent transactions as possible, a high recall ensures that illegitimate activities are identified and prevented. Minimizing false negatives is crucial to mitigate financial losses and maintain the security of transactions.

3. Sentiment Analysis: In sentiment analysis of customer reviews or social media data, recall is significant for capturing all instances of sentiment expressions. It ensures that the model accurately identifies positive or negative sentiment, which is critical for brand reputation management, customer satisfaction analysis, and targeted marketing campaigns.

4. Information Retrieval: In search engines and recommendation systems, recall plays a crucial role in retrieving relevant information. By maximizing recall, these systems ensure that users find all the pertinent results, even if it means including some non-relevant ones. This helps enhance user experience and improves the overall effectiveness of information retrieval systems.

5. Email Filtering: In email spam filtering, recall is essential for capturing as many spam emails as possible. Maintaining a high recall value ensures that the majority of spam emails are correctly classified, preventing users from receiving unwanted and potentially harmful content. However, striking a balance with precision is important to avoid misclassifying legitimate emails as spam.

6. Object Detection: In computer vision tasks such as object detection or face recognition, recall ensures the detection of all instances of the desired object or the accurate identification of faces. By addressing false negatives, the model can identify all relevant objects or faces present in an image, which is crucial for various applications such as surveillance, image analysis, or biometric security systems.

7. Natural Language Processing: In natural language processing tasks like named entity recognition or information extraction, recall is vital for capturing all instances of specific entities or extracting relevant information. By achieving high recall, the model can accurately identify and extract the desired entities or information, enhancing the performance of various NLP applications, including question answering systems, chatbots, or text summarization.

These real-world examples demonstrate the significance of recall in machine learning across diverse fields. By focusing on improving recall in these applications, we can ensure accurate and reliable identification of positive instances and enhance the overall performance and effectiveness of machine learning models.