What Is Noise In Data?

Noise in data refers to any random or unwanted variation, errors, or inconsistencies that are present in a dataset. It is the irregularity in the data that deviates from the expected pattern or signal. Noise can arise from various sources such as measurement errors, data entry mistakes, sensor malfunctions, sampling errors, or external factors that affect the data collection process.

In the context of machine learning, noise can greatly impact the performance and accuracy of models. When noise is present in the data, it can lead to incorrect interpretations, biased predictions, and reduced model effectiveness. Noise can mask the underlying patterns and relationships within the data, making it difficult for machine learning algorithms to learn and make accurate predictions.

Noise can manifest in different forms and can vary in its characteristics. Random noise refers to the unpredictable and irregular fluctuations in data points that occur due to various reasons. Systematic noise, on the other hand, follows a specific pattern or has a consistent bias that affects the entire dataset. Modeling noise is specific to the model itself and can occur when the model’s assumptions or functional form do not align perfectly with the true underlying data distribution. Measurement noise is introduced during the process of collecting or measuring the data and can be caused by instrumentation limitations or external environmental factors.

Understanding and identifying noise in data is crucial for building reliable and accurate machine learning models. By recognizing the sources and types of noise, data scientists can implement strategies to minimize its impact. This may involve cleaning and preprocessing the data to eliminate outliers and erroneous data points, as well as selecting appropriate algorithms and techniques that are robust to noise. Additionally, evaluating the performance of models in the presence of noise and conducting error analysis can provide insights into the noise level and its effect on model predictions.

The Impact of Noise on Machine Learning Models

Noise in data can have a significant impact on the performance and reliability of machine learning models. When noise is present, it introduces unwanted variations and inaccuracies that hinder the learning process and the model’s ability to make accurate predictions.

One of the major impacts of noise is the disturbance it causes in the patterns and relationships within the data. Machine learning algorithms rely on identifying these patterns to learn and generalize from the data. However, noise can distort these patterns, leading to incorrect interpretations and unreliable predictions.

Noise can also introduce bias in the data, which in turn affects the model’s training and prediction process. For example, systematic noise, which follows a specific pattern or bias, can influence the model to learn and make predictions based on this biased information. This can result in skewed results that do not reflect the true underlying patterns.

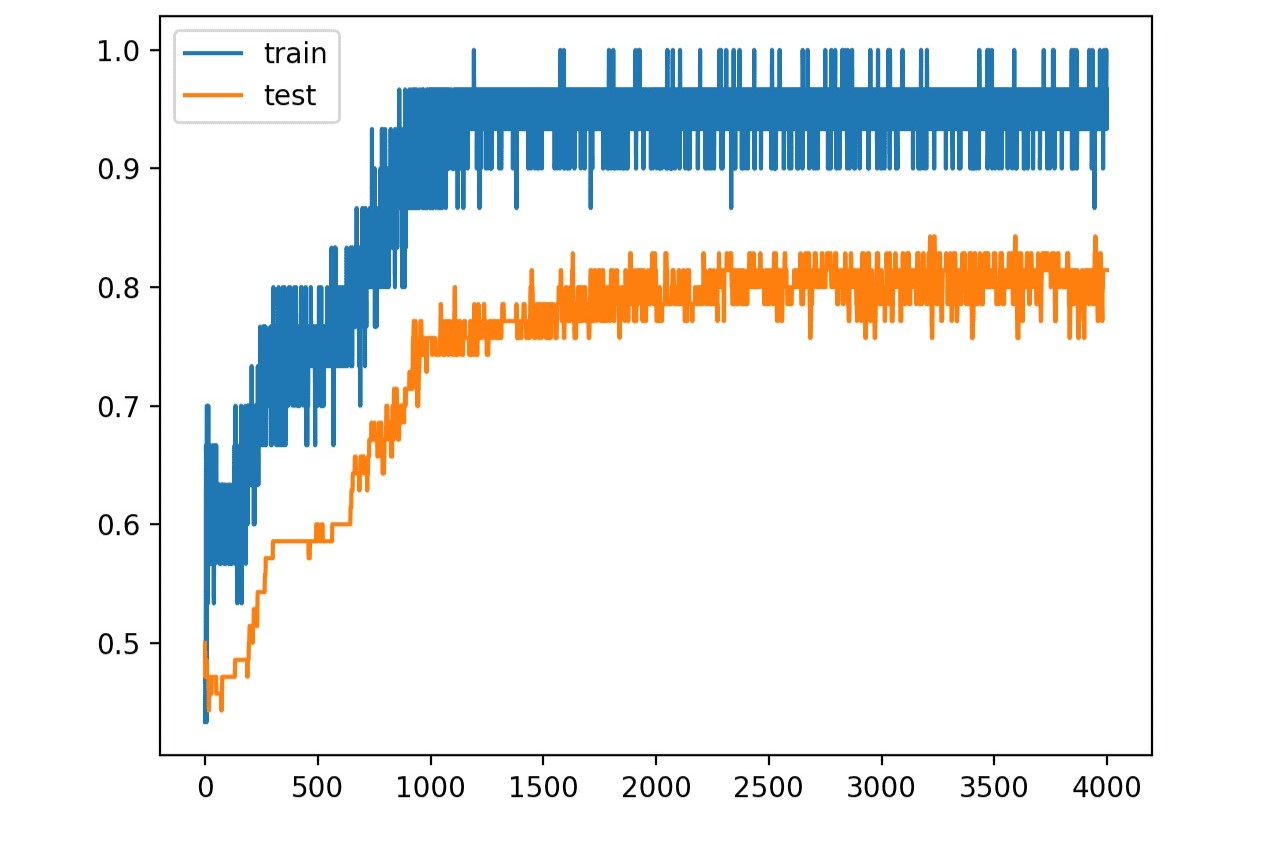

Another consequence of noise is an increase in the complexity of the model. When noise is present, the model tends to overfit the training data by trying to fit every instance, including the noisy ones. This can lead to poor generalization and the inability to accurately predict unseen data.

Moreover, noise can negatively impact the model’s performance metrics, such as accuracy, precision, recall, and F1 score. The presence of noise can cause misclassifications and errors, reducing the overall performance of the model.

It is important for data scientists to understand the impact of noise on machine learning models and take appropriate measures to mitigate its effects. This may involve utilizing robust algorithms and techniques that are less prone to noise, implementing data cleaning and preprocessing methods to remove outliers and erroneous data points, and evaluating the model’s performance in the presence of noise.

Types of Noise in Data

Noise in data can manifest in various forms and can have different characteristics. Understanding the types of noise is crucial for effectively dealing with its impact on machine learning models. Here are some common types of noise found in data:

- Random Noise: Random noise refers to the unpredictable and irregular fluctuations in data points. It occurs due to various factors, such as measurement errors, environmental factors, or inherent variability in the data. Random noise can introduce unpredictable variations that obscure the underlying patterns and relationships in the dataset.

- Systematic Noise: Systematic noise follows a specific pattern or has a consistent bias that affects the entire dataset. It can arise from systematic errors in measurement, data collection, or other external factors. Systematic noise can distort the true signal in the data and lead to biased interpretations and predictions.

- Modeling Noise: Modeling noise is specific to the model itself. It occurs when the assumptions or functional form of the model do not perfectly align with the true underlying data distribution. Modeling noise can lead to deviations between the model’s predictions and the actual observations, impacting its accuracy and reliability.

- Measurement Noise: Measurement noise is introduced during the process of collecting or measuring the data. It can arise due to limitations in the measurement instruments, errors in data entry, or external environmental factors influencing the measurements. Measurement noise can distort the true values of the variables, making it challenging to obtain accurate and reliable data.

Identifying and understanding the different types of noise in data is essential for developing strategies to mitigate its effects. Data preprocessing, cleaning techniques, and robust modeling approaches can help address noise-related challenges in machine learning tasks. Additionally, evaluating the noise level and its impact on model performance through error analysis can provide insights into the reliability and accuracy of the predictions.

Random Noise

Random noise is a type of noise in data that refers to unpredictable and irregular fluctuations in data points. It is often caused by factors such as measurement errors, inherent variability in the data, or environmental influences. Random noise introduces unpredictable variations that can obscure the underlying patterns and relationships in the dataset.

Random noise can significantly impact the performance of machine learning models. It can lead to inaccurate interpretations and unreliable predictions. When noise is present, it can disrupt the learning process of the models by introducing inconsistencies and deviations from the true signal in the data.

In the context of machine learning, random noise can manifest in various ways. It can create outliers, which are data points that significantly deviate from the majority of the dataset. These outliers can have a disproportionate impact on the model’s training process, causing it to learn incorrect patterns from the data.

Additionally, random noise can affect the overall balance and distribution of the data. It can introduce fluctuations in the feature values, making it challenging for the model to identify and learn the true underlying patterns. Random noise can also lead to misclassifications and errors, reducing the accuracy and performance of the model.

To mitigate the impact of random noise on machine learning models, various techniques can be employed. Data cleaning and preprocessing methods, such as outlier detection and removal, can help eliminate the influence of noisy data points. Feature engineering methods, such as transforming or normalizing features, can also help reduce the impact of random noise.

Furthermore, using robust machine learning algorithms that are less sensitive to random noise can be beneficial. Robust algorithms are designed to handle noisy data and can provide more reliable predictions even in the presence of random fluctuations.

Overall, understanding and addressing random noise is essential for building accurate and reliable machine learning models. By effectively dealing with random noise, data scientists can uncover the true underlying patterns in the data and make more informed predictions.

Systematic Noise

Systematic noise is a type of noise in data that follows a specific pattern or exhibits a consistent bias across the entire dataset. It is often caused by systematic errors in the measurement process, data collection biases, or external factors that introduce a consistent distortion into the data. Systematic noise can have a significant impact on machine learning models and can affect their performance and reliability.

One of the main characteristics of systematic noise is that it introduces a consistent bias in the data. This bias can lead to erroneous interpretations and predictions if not properly accounted for. For example, if there is a systematic noise that consistently overestimates the values of a certain feature, the model may learn to make predictions based on this biased information, resulting in inaccurate results.

Systematic noise can also distort the true patterns and relationships within the data. It can mask the underlying signal and make it difficult for machine learning algorithms to learn and accurately predict. The presence of systematic noise can impact the model’s ability to generalize from the training data and may lead to overfitting or underfitting, where the model fails to capture the true patterns or adapts too closely to the noise.

To address the impact of systematic noise, it is important to understand its presence and nature. Analyzing the data and conducting exploratory data analysis can help identify potential sources of systematic noise and detect any patterns or biases. Once identified, appropriate preprocessing techniques can be applied to mitigate the effects of systematic noise.

Data augmentation techniques, such as oversampling or undersampling, can be used to balance the data distribution and compensate for systematic biases. Feature engineering methods, such as scaling or transforming features, can also help reduce the influence of systematic noise and improve the model’s performance.

Moreover, selecting robust machine learning algorithms that are less susceptible to systematic noise can be beneficial. Robust algorithms are designed to handle data variations and outliers, making them more resilient to biases in the data. Training the model with a diverse and representative dataset can also help minimize the impact of systematic noise.

Addressing systematic noise is crucial to ensure the accuracy and reliability of machine learning models. By understanding the nature of systematic noise and employing appropriate techniques, data scientists can mitigate its impact and uncover the true patterns and relationships within the data.

Modeling Noise

Modeling noise refers to the noise that is specific to the model itself. It occurs when the assumptions or functional form of the model do not perfectly align with the true underlying data distribution. Modeling noise can lead to deviations between the model’s predictions and the actual observations, impacting its accuracy and reliability.

There are several factors that can contribute to modeling noise. One common reason is the use of simplified or approximate models that do not fully capture the complexity of the underlying data. If the model fails to capture the true relationships and patterns in the data, it may introduce noise into its predictions.

Another source of modeling noise is the violation of assumptions made by the model. Many machine learning algorithms are based on specific assumptions about the data, such as linearity or normality. If these assumptions are violated, it can lead to modeling noise and inaccurate predictions.

Modeling noise can also arise due to the limitations of the training data. If the training data is not representative of the entire population or lacks diversity, the model may be biased and unable to capture all the variations in the data. This can result in modeling noise and decreased accuracy.

To mitigate the impact of modeling noise, several strategies can be employed. First, it is important to evaluate the appropriateness of the chosen model for the specific problem at hand. Complex problems may require more sophisticated models that can capture the intricacies of the data.

Regularization techniques can also be used to reduce modeling noise. Regularization adds a penalty term to the model’s objective function, discouraging overfitting and promoting simpler models that are less prone to noise.

Additionally, ensembling methods, such as bagging or boosting, can help reduce modeling noise. By combining the predictions of multiple models, ensembles can mitigate the impact of individual models’ noise and improve overall performance.

Furthermore, conducting thorough model evaluation and validation can help identify and diagnose modeling noise. Techniques such as cross-validation and error analysis can provide insights into the sources of noise and potential improvements.

Overall, addressing modeling noise is essential for building accurate and reliable machine learning models. By recognizing its presence and employing appropriate strategies, data scientists can reduce the impact of modeling noise and improve the model’s performance.

Measurement Noise

Measurement noise refers to the noise that is introduced during the process of collecting or measuring the data. It can arise due to various factors such as limitations in the measurement instruments, errors in data entry or recording, or external environmental factors that affect the measurements. Measurement noise can have a significant impact on the accuracy and reliability of the data, as well as the performance of machine learning models.

A common example of measurement noise is sensor noise, which occurs when the sensors used to collect data introduce inaccuracies or variations in the measurements. These inaccuracies can arise from factors such as sensor drift, calibration errors, or interference from other signals.

Measurement noise can also occur during data entry or recording. Human error or inconsistencies in the data collection process can lead to noise in the recorded values. Typos, misinterpretations, or mistakes in recording data can introduce additional variations and distortions in the dataset.

External factors can also contribute to measurement noise. Environmental conditions, such as temperature, humidity, or electromagnetic interference, can affect the measurements and introduce noise. These external factors can be difficult to control and can lead to inconsistent or inaccurate data.

The presence of measurement noise can have several implications for machine learning models. It can lead to incorrect interpretations and predictions, as the noise introduces deviations from the true values or patterns in the data. Measurement noise can also affect the distribution and balance of the data, making it challenging for models to learn the underlying relationships and make accurate predictions.

To mitigate the impact of measurement noise, various techniques can be employed. Data cleaning and preprocessing methods, such as outlier detection and removal, can help eliminate noisy data points caused by measurement noise. It is important to carefully review the data and address any inconsistencies or errors before using it for model training or analysis.

Additionally, techniques like signal filtering or smoothing can help reduce measurement noise. These techniques aim to remove or reduce the high-frequency variations or random fluctuations caused by noise, allowing the underlying patterns to become more apparent.

Moreover, conducting rigorous quality control measures during the data collection process can help reduce measurement noise. Regular calibration of instruments, proper training of data collectors, and double-checking the recorded data can minimize errors and inconsistencies.

It is important for data scientists to be aware of measurement noise and its impact on the data and models. By understanding the sources and characteristics of measurement noise, appropriate steps can be taken to improve the quality of the data and enhance the accuracy and reliability of the machine learning models.

Reducing Noise in Data

Noise in data can have a detrimental impact on the accuracy and reliability of machine learning models. To improve the performance of models and enhance their predictive capabilities, it is important to implement strategies to reduce noise in the data. Here are some techniques that can be used to reduce noise:

- Data Cleaning: Data cleaning involves identifying and removing outliers, errors, and inconsistencies from the dataset. Outliers can significantly affect the model’s training process and overall performance. By detecting and removing these noisy data points, the model can focus on learning patterns and relationships that are more representative of the true data.

- Normalization: Normalizing the data can help reduce the impact of noise by scaling the values to a standard range. This can improve the performance of certain machine learning algorithms, making them more robust to variations caused by noise.

- Feature Selection and Engineering: Selecting relevant features and engineering new meaningful features can help reduce the influence of noise in the dataset. By focusing on the most informative features and creating new ones that capture the underlying patterns, the model can be trained on a more meaningful representation of the data.

- Ensemble Learning: Ensemble learning involves combining the predictions of multiple models to improve overall performance and reduce the impact of noise. By aggregating the outputs of multiple models, the noise present in individual models can be minimized, resulting in more accurate predictions.

- Regularization: Regularization techniques can help mitigate the effects of noise by adding a penalty term to the model’s objective function. This penalty term discourages overly complex models, reducing the likelihood of overfitting and making the model more robust to noise.

- Cross-Validation: Cross-validation can provide a reliable estimate of a model’s performance and help identify the extent of noise in the dataset. By evaluating the model’s performance on different subsets of the data, cross-validation can give insights into how well the model generalizes and how it performs in the presence of noise.

- Data Augmentation: Data augmentation techniques, such as oversampling or undersampling, can help balance the data and increase its diversity. By generating new synthetic data points or selectively reducing the number of samples, data augmentation can reduce the impact of noise and improve the model’s performance.

By employing these techniques, data scientists can effectively reduce the impact of noise in the data and improve the accuracy and reliability of their machine learning models. It is important to keep in mind that the choice of technique may depend on the specific characteristics of the dataset and the underlying problem being addressed.

Cleaning Techniques for Noisy Data

Noisy data can significantly impact the performance and reliability of machine learning models. Cleaning and preprocessing the data is essential to reduce the influence of noise and improve the accuracy of the models. Here are some effective techniques for cleaning noisy data:

- Outlier Detection and Removal: Outliers are data points that significantly deviate from the majority of the dataset. They can introduce noise and bias the model’s training process. Techniques such as statistical methods, distance-based approaches, or clustering can be used to detect and remove outliers from the data.

- Missing Data Imputation: Missing data is a common challenge in real-world datasets. Missing values can introduce noise and affect the model’s performance. Various techniques, such as mean imputation, median imputation, or model-based imputation, can be used to fill in the missing values with reasonable estimates.

- Noise Reduction Techniques: Filtering techniques can be employed to reduce noise in the data. Low-pass filters, such as moving averages or median filters, can help smooth out high-frequency noise and retain the underlying signal. High-pass filters can be used to suppress low-frequency noise.

- Standardization and Normalization: Standardizing and normalizing the data can help reduce the impact of noise. Standardization scales the data to have zero mean and unit variance, ensuring that all features contribute equally to the model. Normalization rescales the data to a specific range, such as [0,1] or [-1,1], making it easier for the model to learn and compare different features.

- Feature Selection: Selecting relevant features can help eliminate noise in the data. Removing irrelevant or redundant features reduces the dimensionality and focuses the model on the most informative attributes. Feature selection techniques, such as recursive feature elimination or statistical methods, can be employed to identify and retain the most significant features.

- Data Transformation: Transforming the data can help reduce noise and improve its distribution. Techniques such as logarithmic transformation, power transformation, or Box-Cox transformation can help achieve a more symmetrical and Gaussian-like distribution, making it easier for the model to learn and make accurate predictions.

- Validation and Cross-Validation: Validation techniques, such as hold-out validation or cross-validation, can assess the performance of the model and identify if it is overfitting or underfitting the data. By using properly validated models, the impact of noise can be minimized, and the model’s generalization capabilities can be improved.

It is important to carefully choose and apply the appropriate cleaning techniques based on the specific characteristics of the dataset and the requirements of the machine learning task. By effectively cleaning the noisy data, data scientists can enhance the performance and reliability of their models, leading to more accurate predictions and valuable insights.

Error Analysis and Noise Evaluation

Error analysis and noise evaluation are critical steps in assessing the impact of noise on machine learning models and identifying potential areas for improvement. Through error analysis, data scientists can gain insights into the sources and characteristics of noise, as well as evaluate the performance of the models in the presence of noise.

Error analysis involves examining the discrepancies between the predicted outputs of the models and the actual ground truth values. By analyzing the errors, data scientists can identify patterns and trends that are influenced by noise. This analysis helps in understanding the specific cases or situations where the models struggle due to the presence of noise.

Error analysis can be conducted at various levels, including individual data points, subsets of the data, or particular classes or categories. By focusing on the most challenging or problematic instances, data scientists can gain a deeper understanding of the impact of noise on model performance and identify potential strategies for improvement.

Noise evaluation involves quantifying the level of noise present in the data and assessing its impact on model outcomes. This evaluation can be done through various measures, including accuracy, precision, recall, F1 score, or other performance metrics. By evaluating the model’s performance on noise-free and noisy subsets of the data, data scientists can determine the extent to which noise affects the model’s predictions.

To evaluate noise, it is important to have a reliable noise-free baseline against which the model’s performance can be compared. This can be achieved by using high-quality, carefully curated datasets or by utilizing expert knowledge to identify instances of ground truth values.

During the error analysis and noise evaluation process, data scientists can gain valuable insights that help guide the next steps in improving the models. This can involve refining data cleaning techniques, exploring new preprocessing methods, or selecting more robust algorithms that are less sensitive to noise.

Additionally, error analysis and noise evaluation can inform the collection of additional data or targeted data acquisition strategies to address the specific sources or types of noise identified. By considering the limitations and biases in the data collection process, data scientists can take steps to ensure the data is more representative and reliable.

Continuous error analysis and noise evaluation are crucial to monitor and assess the performance of machine learning models over time. This process helps identify any changes in the noise characteristics or the model’s sensitivity to noise, enabling data scientists to adapt and refine their approaches accordingly.

By conducting thorough error analysis and noise evaluation, data scientists can gain deeper insights into the impact of noise on their machine learning models and make informed decisions to improve their effectiveness and enhance their performance.