The Definition of Latency

Latency is a term often used in various fields, including computer networking, gaming, and telecommunications. It refers to the time delay between the moment a request is sent and the moment a response is received. In simple terms, latency can be defined as the time it takes for data to travel from its source to its destination.

To understand latency more precisely, let’s consider an analogy. Imagine you are sending a letter to a friend who lives far away. The time it takes for your letter to reach your friend and for them to send a reply back to you is the latency in this scenario. Similarly, in the digital world, latency represents the time it takes for information to travel from one location to another.

Latency is measured in milliseconds (ms) and can vary depending on several factors, such as the distance between the sender and the receiver, the quality of the network connection, and the processing capabilities of the devices involved. It is important to note that latency is not solely determined by network connectivity but is also influenced by the devices and servers involved in the communication.

High latency can result in delays, which can be especially noticeable in real-time applications such as video conferencing, online gaming, and streaming services. In these cases, a high latency can cause noticeable lag or buffering, leading to a suboptimal user experience.

Low latency, on the other hand, is desirable in scenarios where immediate response times are crucial. For example, in financial trading or online auctions, even milliseconds can make a significant difference. Low-latency connections ensure that information is transmitted quickly and efficiently, reducing delays and improving overall performance.

It is important to understand that latency is a crucial aspect of network performance and can have a significant impact on user experience. By monitoring and optimizing latency, businesses and users can ensure smooth and efficient data transmission, leading to improved productivity, reduced frustration, and a higher level of satisfaction.

The Factors that Impact Latency

Latency can be affected by various factors that contribute to the overall time delay in data transmission. Understanding these factors can help identify and address potential causes of high latency. Let’s explore some of the key factors that impact latency:

- Distance: One of the primary factors influencing latency is the physical distance between the sender and the receiver. The greater the distance, the longer it takes for data to travel, resulting in higher latency. This is especially relevant in global networks where data needs to traverse through multiple routers and cables.

- Network Congestion: When the network experiences heavy traffic or congestion, it can cause delays in data transmission. This is similar to rush hour traffic on a highway. As more data packets compete for limited network resources, latency can increase due to congestion.

- Bandwidth: While bandwidth and latency are often used interchangeably, they are distinct concepts. Bandwidth refers to the capacity of a network to transmit data, while latency is the time delay. However, limited bandwidth can indirectly impact latency. If a network has insufficient bandwidth to handle the incoming traffic, it can result in delays and increased latency.

- Network Equipment: The quality and performance of network equipment, such as routers, switches, and cables, can influence latency. Outdated or faulty equipment may introduce additional latency into the data transmission process. Upgrading and optimizing network infrastructure can help reduce latency.

- Server Response Time: The efficiency of the server in processing and responding to requests also plays a role in latency. Slow server response times can contribute to higher latency. Optimizing server configurations and ensuring adequate server resources can help minimize latency caused by server delays.

- Data Routing: The path that data takes from the sender to the receiver can impact latency. Inefficient or congested routing can result in increased latency. Using routing protocols and technologies that prioritize faster routes can optimize data transmission and reduce latency.

By considering these factors and implementing appropriate strategies, businesses and network administrators can work towards minimizing latency. This, in turn, improves overall network performance, enhances user experience, and ensures smoother data transmission.

Understanding Round Trip Time (RTT)

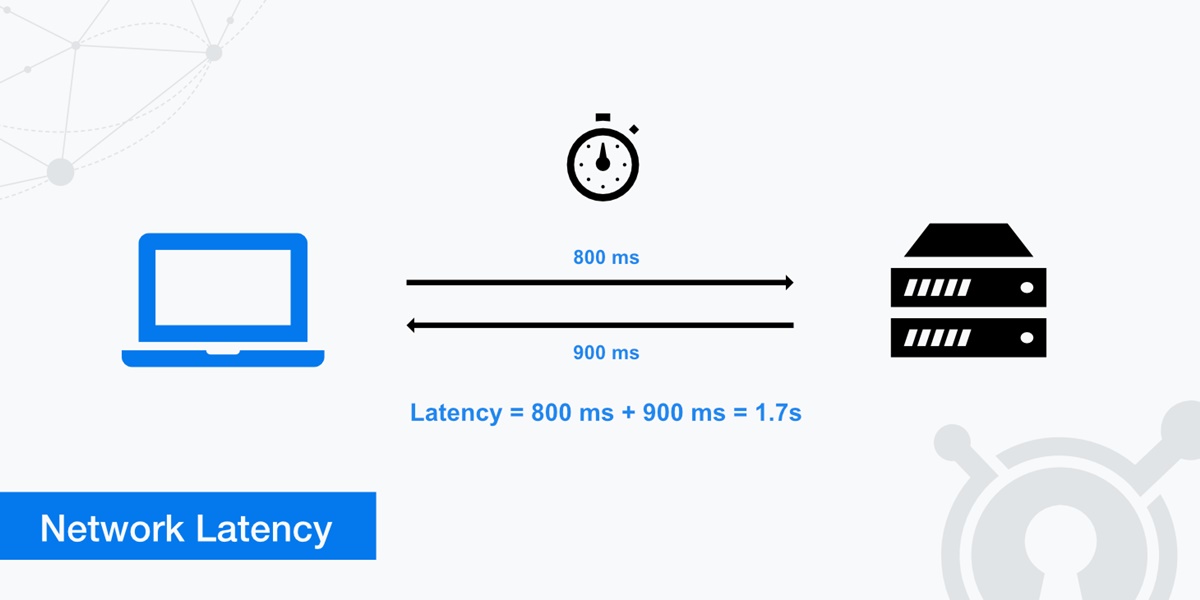

In the context of network latency, Round Trip Time (RTT) is a crucial metric that measures the time it takes for a data packet to travel from the sender to the receiver and back again. RTT is typically expressed in milliseconds (ms) and represents the total time required for a round trip of a data packet.

To understand RTT, let’s consider an example. Suppose you send a request to a web server to load a webpage. The web server receives your request, processes it, and sends a response back to your device. The time it takes for the request to reach the server and the response to return to your device is the RTT.

RTT includes various components that contribute to the overall latency. These components include:

- Propagation Delay: Propagation delay is the time it takes for a signal to travel through a medium, such as a fiber optic cable or wireless connection. It is influenced by the distance between the sender and the receiver and the speed at which the signal travels.

- Transmission Delay: Transmission delay refers to the time it takes for the data packet to be sent from the sender to the receiver. It depends on the bandwidth of the network connection and the size of the data packet being transmitted.

- Processing Delay: Processing delay occurs when the device or server needs time to process the received data and generate a response. This delay is influenced by the processing capabilities and workload of the device or server.

- Queuing Delay: Queuing delay can occur when data packets need to wait in a queue before being transmitted. It is influenced by network congestion and the priority assigned to different types of data packets.

RTT is an important metric in analyzing network performance and diagnosing latency issues. It provides valuable insights into the time it takes for data to travel between two points in the network. By measuring RTT and comparing it against desired thresholds, network administrators can identify potential latency problems and take appropriate actions to improve performance.

Many network diagnostic tools, such as ping and traceroute, can measure RTT. These tools send specially crafted packets to a target destination and measure the time it takes for the packets to reach the destination and return. The measured RTT can help identify latency hotspots, troubleshoot network connectivity issues, and optimize network configurations.

By understanding RTT and its underlying components, network professionals can effectively manage and minimize latency, ensuring efficient data transmission and a seamless user experience.

The Role of Network Latency in Internet Connections

Network latency plays a crucial role in determining the quality and responsiveness of internet connections. Whether you’re browsing the web, streaming videos, or engaging in online gaming, latency can significantly impact your online experience.

One of the key ways network latency affects internet connections is through website loading times. When you enter a website URL or click on a link, your computer sends a request to the website’s server, which then sends back the requested data. The time it takes for this back-and-forth communication is influenced by latency. Higher latency can result in slower loading times, while lower latency facilitates quicker website rendering.

Latency also influences online streaming services. When you’re streaming a video, the data is transmitted to your device in small chunks. Higher latency can lead to buffering issues, where there is a delay in receiving these data chunks, causing the video to pause and buffer. Conversely, lower latency ensures a smoother streaming experience without interruptions.

Online gamers are particularly sensitive to latency, as it directly affects their ability to react and interact with the game world in real-time. In gaming, even a few milliseconds of delay can mean the difference between winning or losing a match. High latency can result in lag, where the game responds slowly to player inputs, making gameplay frustrating and less enjoyable. Low latency, on the other hand, ensures a more responsive and immersive gaming experience.

Another area where network latency is crucial is in VoIP (Voice over Internet Protocol) and video conferencing. With these communication technologies, real-time conversations are transmitted over the internet. High latency can cause delays in audio or video transmission, resulting in disjointed and unreliable communication. Low latency is vital for clear and seamless communication in voice and video conversations.

It is important to note that network latency is not solely dependent on the quality of the internet service provider (ISP). While ISPs do have a role to play in providing stable connections, latency can be influenced by various factors, such as network congestion, the distance between the user and the server, and the quality of the individual devices and networks involved.

Understanding the role of network latency in internet connections allows users to make informed decisions when selecting an ISP and optimizing their network setup. By choosing an ISP with low latency and optimizing their home network configurations, users can enjoy faster website loading times, smooth streaming experiences, responsive gaming, and uninterrupted voice and video calls.

Bandwidth vs. Latency: What’s the Difference?

Bandwidth and latency are two important concepts in the world of networking and internet connections. While they are related, they refer to different aspects of network performance. Understanding the difference between bandwidth and latency can help clarify their roles in determining the quality of your internet experience.

Bandwidth refers to the maximum amount of data that can be transmitted over a network connection in a given time period. It is typically measured in bits per second (bps) or megabits per second (Mbps). Think of bandwidth as a highway with a certain number of lanes. The wider the highway, the more vehicles can pass through simultaneously, allowing for faster data transfer.

On the other hand, latency refers to the time delay experienced in data transmission between two points in a network. It is measured in milliseconds (ms) and represents the time it takes for a data packet to travel from the source to the destination and back. Latency is influenced by factors such as the physical distance between the sender and receiver, network congestion, and the processing capabilities of the devices involved.

To better understand the difference between the two, let’s consider an analogy. Imagine you are transferring a large file from one computer to another. The bandwidth determines how quickly you can transfer the entire file. A higher bandwidth allows for faster data transfer, completing the file transfer in less time. However, latency comes into play during the actual transfer process. The latency determines the delay between the time you initiate the transfer and when the first bit of data reaches its destination. It reflects the responsiveness of the network and can affect the overall transfer time, especially if multiple requests and responses are involved during the transfer.

In essence, bandwidth impacts how much data can be transmitted at once, while latency affects the responsiveness and time delay in the actual transmission. Both factors are important for a smooth and efficient network experience, but they address different aspects of the connection.

When it comes to certain network activities, such as browsing the web or streaming videos, both bandwidth and latency play vital roles. Higher bandwidth allows for faster data downloading, enabling quicker webpage loading and smoother streaming experiences. Meanwhile, low latency ensures more responsive interactions with websites and minimized buffering during streaming.

It’s worth noting that bandwidth and latency are not mutually exclusive; they are often interrelated. A high-bandwidth connection does not necessarily guarantee low latency, and vice versa. For an optimal internet experience, it is important to strike a balance between sufficient bandwidth for fast data transfer and low latency for responsive interactions.

How Latency Affects Online Gaming

Latency plays a critical role in the world of online gaming. It can significantly impact the gameplay experience, making the difference between a smooth and enjoyable session or a frustrating and lag-filled one. Understanding how latency affects online gaming can help players optimize their connections and enhance their gaming experience.

Latency, also known as ping, refers to the delay between a player’s actions and the corresponding response in the game world. In online gaming, even a few milliseconds of delay can make a noticeable difference. High latency can result in lag, where the game responds slowly to player inputs. This lag can lead to delayed movements, disrupted animations, and unresponsive controls, all of which can affect gameplay precision and reaction time.

One of the primary impacts of latency in online gaming is related to the speed of player actions. For instance, in fast-paced shooter games, players need to react quickly to changing situations and make split-second decisions. High latency can cause delays between when a player pulls the trigger and when the shot is registered in the game. This delay can result in missed shots, misleading hitboxes, and an overall frustrating experience.

Latency also affects real-time interactions in multiplayer games. In multiplayer environments, players often rely on swift communication and coordination. High latency can create discrepancies between players’ movements, causing other players to see them in different locations or in inconsistent positions. This can lead to incoherent gameplay and render cooperative or competitive strategies less effective.

Another aspect affected by latency is response time in online gaming. For example, in games with tactical or timing-based elements, such as fighting games or rhythm-based games, precise timing is essential. Even slight delays caused by latency can throw off the timing and disrupt the intended gameplay experience. This can be frustrating for players who rely on split-second reactions and precise execution of game mechanics.

It’s important to note that latency is not solely dependent on the player’s internet connection. Other factors, such as the game server’s performance, network congestion, and the quality of the connections of other players in the game, can also contribute to latency. Therefore, it’s essential for game developers to optimize their servers and for players to consider their own network conditions and server locations to minimize latency.

To mitigate the impact of latency in online gaming, players can take several measures. One option is to choose servers that are geographically closer to their location, as shorter distances can reduce latency. Additionally, ensuring a stable and reliable internet connection, utilizing wired connections instead of wireless, and closing unnecessary background applications can help minimize latency.

By understanding how latency affects online gaming and implementing strategies to minimize its impact, players can enhance their gameplay experience, improve their performance, and fully enjoy the immersive world of online gaming.

The Effect of Latency on Video Streaming

Latency has a significant impact on the quality of video streaming experiences. When it comes to streaming platforms like Netflix, YouTube, or Hulu, latency can determine whether users enjoy smooth playback or face frustrating buffering delays. Understanding how latency affects video streaming can help users optimize their setups for seamless and uninterrupted streaming.

Latency in video streaming refers to the delay between when a video is requested and when it starts playing on the user’s device. Higher latency can lead to buffering, where the video pauses to load more content, disrupting the viewing experience. This delay is especially noticeable during high-resolution playback, where larger amounts of data need to be transferred and processed.

One of the primary effects of latency on video streaming is the time it takes for the video to start playing after hitting the play button. High latency can result in a longer wait time before the video begins, causing frustration and impatience for viewers. Conversely, low latency allows for faster video start times, providing a more seamless and engaging streaming experience.

Latency also impacts the occurrence of buffering during video playback. Buffering occurs when the video playback temporarily stops to allow more data to be loaded. High latency can prolong buffering times, significantly interrupting the flow of the video. This can lead to a disjointed viewing experience and hinder the ability to fully engage with the content.

In addition to buffering, latency can affect video quality. When latency is high, the video player may need to lower the resolution or adjust the bitrate to compensate for the delay. This can result in a decrease in image quality, including pixelation and reduced sharpness. Lower latency ensures that the video player can maintain the desired high-quality playback, providing viewers with a visually pleasing and immersive streaming experience.

Furthermore, latency impacts the responsiveness of seeking or skipping through video content. In some instances, when users attempt to jump to a specific point in the video, a high latency can cause a delay before the video accurately jumps to the desired timestamp. Low latency ensures that video seeking is more immediate and accurate, allowing for efficient navigation within the video content.

Reducing latency in video streaming can be achieved by optimizing the internet connection and network setup. Using a high-speed and reliable internet connection, ensuring a stable Wi-Fi or wired connection, and minimizing network congestion can all help to reduce latency. Additionally, selecting video streaming servers that are geographically closer to the user can improve streaming performance and minimize delays.

Ultimately, understanding the effects of latency on video streaming and implementing strategies to reduce it are vital for a seamless streaming experience. By minimizing latency, users can enjoy uninterrupted playback, higher-quality video streaming, and a more immersive viewing experience.

Latency in Cloud Computing

Latency plays a significant role in cloud computing, which refers to the delivery of computing services, including storage, processing power, and applications, over the internet. Understanding latency’s impact on cloud computing is essential for optimizing performance and ensuring a seamless user experience.

Cloud computing involves the use of remote servers that are located in data centers, often geographically distant from the end-users. Latency comes into play when data is transferred between the user’s device and the cloud servers. The time taken for data to travel back and forth can significantly affect the performance and responsiveness of cloud-based applications and services.

One major factor leading to latency in cloud computing is the physical distance between the user and the cloud server. The longer the distance, the higher the latency. For example, if a user is accessing cloud services hosted in a data center located in another country, the latency will be greater compared to accessing services hosted in a nearby data center.

Another source of latency in cloud computing is the network infrastructure connecting the user and the cloud server. Network congestion, router limitations, and other bottlenecks can cause delays in data transmission and increase latency. Ensuring high-quality network connections and robust network infrastructure is crucial for minimizing latency in cloud computing.

Latency can impact different aspects of cloud computing. In storage services, higher latency can lead to slower data retrieval or uploading processes. This delay becomes especially significant when dealing with large volumes of data. For example, in cloud storage, retrieving files or performing backups may take longer if latency is high.

Similarly, latency affects the performance of cloud-based applications. In remote desktop scenarios, where the graphical user interface of an application is delivered over the network, high latency can result in noticeable delays between user actions and the response of the application. These delays can result in reduced productivity, decreased user satisfaction, and hindered collaboration in cloud-based work environments.

Latency also impacts real-time communication and collaboration tools offered through cloud-based services. Voice and video calls, screen sharing, and real-time document collaboration can suffer from delays and disruptions if latency is high. High-quality real-time interactions require low latency to maintain smooth and seamless communication.

To mitigate latency in cloud computing, several approaches can be taken. Cloud providers often employ content delivery networks (CDNs) that distribute data to geographically dispersed servers, reducing latency by bringing the data closer to the end-user. Additionally, optimizing the network connection, implementing caching mechanisms, and using edge computing technologies can all help reduce latency and improve cloud computing performance.

Understanding the impact of latency in cloud computing is crucial for businesses and individuals relying on cloud services. By identifying latency bottlenecks and implementing measures to minimize latency, users can optimize their cloud computing experience, enhance productivity, and ensure efficient utilization of cloud resources.

Strategies for Reducing Latency

Reducing latency is essential for optimizing network performance and ensuring a smooth user experience. Whether it’s browsing the web, streaming videos, or online gaming, implementing strategies to minimize latency can significantly improve responsiveness and overall satisfaction. Here are some effective strategies for reducing latency:

1. Choose a Reliable Internet Service Provider (ISP): Selecting a reliable ISP that offers stable and high-quality internet connections is crucial. ISPs with good network infrastructure and minimal network congestion can help reduce latency and provide a more reliable connection.

2. Optimize Your Network Setup: Ensure your home or office network is properly configured for optimal performance. Use a wired network connection instead of a wireless one whenever possible, as wired connections generally have lower latency. Additionally, ensure your router is up-to-date and properly configured for efficient data transmission.

3. Minimize Network Congestion: Limit the number of devices using the network simultaneously, especially for bandwidth-intensive activities. Congested networks can lead to higher latency due to increased competition for network resources. Prioritize your network traffic to minimize latency-sensitive activities, such as online gaming or video streaming.

4. Optimize DNS Resolution: Domain Name System (DNS) resolution can introduce latency when translating domain names into IP addresses. Consider using a fast and reliable DNS resolver or employing a DNS caching mechanism to reduce DNS lookup times and improve overall latency.

5. Utilize Content Delivery Networks (CDNs): CDNs distribute content across multiple servers geographically closer to end-users, reducing latency by minimizing the distance data needs to travel. CDN caching ensures that popular content is readily available, resulting in faster data retrieval and reducing latency for users.

6. Utilize Edge Computing: Edge computing brings computation and data storage closer to the end-user, reducing the need for data to travel long distances to centralized servers. By processing data closer to the edge of the network, latency is significantly reduced, resulting in faster response times and improved application performance.

7. Consider Server Location: For businesses hosting their own servers or utilizing cloud services, selecting server locations closer to the target audience can reduce latency. The physical distance between the server and the users plays a significant role in determining latency, so choosing server locations strategically can lead to improved performance.

8. Implement QoS (Quality of Service): Quality of Service mechanisms prioritize network traffic based on specific requirements. By assigning higher priority to latency-sensitive activities, such as video conferencing or online gaming, latency for these activities can be reduced, resulting in a smoother and more responsive experience.

9. Monitor and Optimize Network Performance: Regularly monitor your network performance using diagnostic tools, such as ping or traceroute, to identify latency hotspots. Analyze the results and take necessary steps to optimize network configurations, resolve bottlenecks, and reduce latency for improved overall performance.

Implementing these strategies can help reduce latency, enhance network performance, and improve user experience across a range of activities, from gaming and streaming to general web browsing. By actively managing and minimizing latency, users can enjoy faster response times, reduced delays, and a more seamless and efficient network experience.

Testing Latency: Ping and Traceroute

Testing latency is crucial for assessing the performance of a network connection and identifying potential latency issues. Two commonly used tools for testing latency are Ping and Traceroute. These tools provide valuable insights into the network’s responsiveness and help diagnose latency-related problems. Let’s take a closer look at Ping and Traceroute:

Ping: Ping is a simple and widely used network diagnostic tool that measures the round-trip time (RTT) between two devices on a network. It sends a small packet of data from the source device to a target destination and measures the time it takes for the data to travel to the destination and back. The measured RTT provides an indication of the latency between the sender and receiver. The lower the RTT, the lower the latency.

Ping also provides additional information such as packet loss and variation in RTT (also known as jitter). Packet loss indicates the percentage of data packets lost during transmission, which can contribute to latency and negatively impact network performance. Jitter refers to the variation in RTT and can affect real-time applications, such as voice and video calls or online gaming, where consistent low latency is crucial for a smooth user experience.

Traceroute: Traceroute is another useful network diagnostic tool that traces the route packets take from the source device to the destination. It provides a breakdown of the latency of each hop along the route, showing the time it takes for packets to travel through various routers and devices. This information helps identify specific points where latency might be occurring.

Traceroute displays each router or device in the path and their corresponding latency. By analyzing the Traceroute results, network administrators can identify network segments or devices where latency is particularly high. This enables them to pinpoint and resolve latency issues more effectively.

Ping and Traceroute are typically run from the command line interface on a computer. They are available on various operating systems, including Windows, macOS, and Linux. Ping and Traceroute can also be used through online diagnostic tools, where the user enters the destination IP address or domain name, and the tool performs the tests and displays the results.

When testing latency with Ping and Traceroute, it’s important to remember that the results provide a snapshot of latency at a specific point in time. Latency can fluctuate based on various factors, such as network congestion or server load. Running multiple tests over different time periods and comparing the results can provide a more comprehensive assessment of latency performance.

By utilizing Ping and Traceroute, network administrators can gain valuable insights into latency, packet loss, and network routing. This information helps diagnose latency issues, optimize network configurations, and ensure an efficient and responsive network environment.